- Principales informations

- Getting Started

- Datadog

- Site Datadog

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Intégrations

- Conteneurs

- Dashboards

- Monitors

- Logs

- Tracing

- Profileur

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Surveillance Synthetic

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- OpenTelemetry

- Développeurs

- Authorization

- DogStatsD

- Checks custom

- Intégrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Checks de service

- IDE Plugins

- Communauté

- Guides

- API

- Application mobile

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Alertes

- Infrastructure

- Métriques

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Universal Service Monitoring

- Conteneurs

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- RUM et Session Replay

- Product Analytics

- Surveillance Synthetic

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Exécuteur de tests intelligent

- Code Analysis

- Quality Gates

- DORA Metrics

- Securité

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- Administration

OTLP Ingestion by the Datadog Agent

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

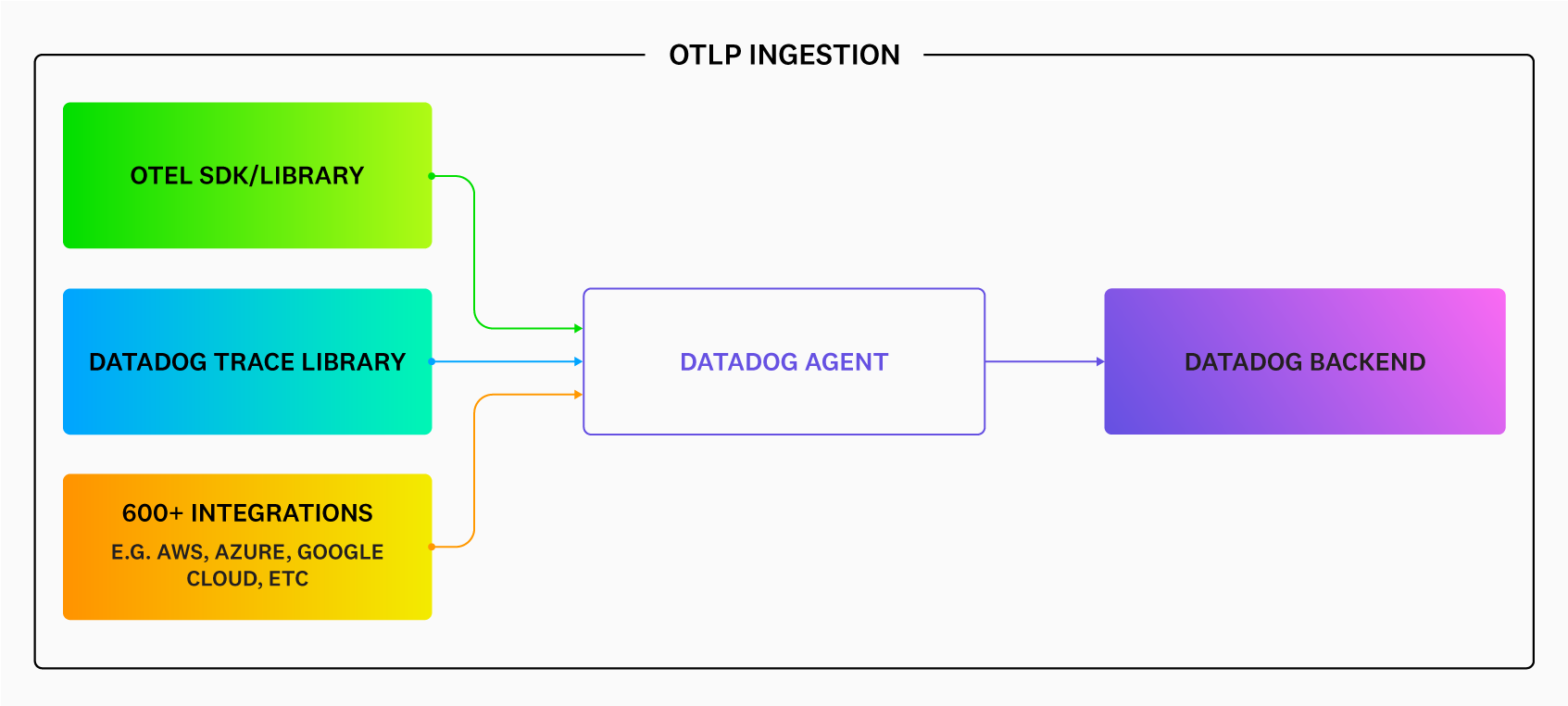

OTLP Ingest in the Agent is a way to send telemetry data directly from applications instrumented with OpenTelemetry SDKs to Datadog Agent. Since versions 6.32.0 and 7.32.0, the Datadog Agent can ingest OTLP traces and OTLP metrics through gRPC or HTTP. Since versions 6.48.0 and 7.48.0, the Datadog Agent can ingest OTLP logs through gRPC or HTTP.

OTLP Ingest in the Agent allows you to use observability features in the Datadog Agent. Data from applications instrumented with OpenTelemetry SDK cannot be used in some Datadog proprietary products, such as Application Security Management, Continuous Profiler, and Ingestion Rules. OpenTelemetry Runtime Metrics are supported for some languages.

To get started, you first instrument your application with OpenTelemetry SDKs. Then, export the telemetry data in OTLP format to the Datadog Agent. Configuring this varies depending on the kind of infrastructure your service is deployed on, as described on the page below. Although the aim is to be compatible with the latest OTLP version, the OTLP Ingest in the Agent is not compatible with all OTLP versions. The versions of OTLP that are compatible with the Datadog Agent are those that are also supported by the OTLP receiver in the OpenTelemetry Collector. To verify the exact versions supported, check the go.opentelemetry.io/collector version in the Agent go.mod file.

Read the OpenTelemetry instrumentation documentation to understand how to point your instrumentation to the Agent. The receiver section described below follows the OpenTelemetry Collector OTLP receiver configuration schema.

Note: The supported setup is an ingesting Agent deployed on every OpenTelemetry-data generating host. You cannot send OpenTelemetry telemetry from collectors or instrumented apps running one host to an Agent on a different host. But, provided the Agent is local to the collector or SDK instrumented app, you can set up multiple pipelines.

Enabling OTLP Ingestion on the Datadog Agent

OTLP ingestion is off by default, and you can turn it on by updating your datadog.yaml file configuration or by setting environment variables. The following datadog.yaml configurations enable endpoints on the default ports.

The following examples use

For more information on secure endpoint configuration, see the OpenTelemetry security documentation.

0.0.0.0 as the endpoint address for convenience. This allows connections from any network interface. For enhanced security, especially in local deployments, consider using localhost instead.For more information on secure endpoint configuration, see the OpenTelemetry security documentation.

For gRPC, default port 4317:

otlp_config:

receiver:

protocols:

grpc:

endpoint: 0.0.0.0:4317

For HTTP, default port 4318:

otlp_config:

receiver:

protocols:

http:

endpoint: 0.0.0.0:4318

Alternatively, configure the endpoints by providing the port through the environment variables:

- For gRPC (

localhost:4317):DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_GRPC_ENDPOINT - For HTTP (

localhost:4318):DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_HTTP_ENDPOINT

These must be passed to both the core Agent and trace Agent processes. If running in a containerized environment, use 0.0.0.0 instead of localhost to ensure the server is available on non-local interfaces.

Configure either gRPC or HTTP for this feature. Here is an example application that shows configuration for both.

OTLP logs ingestion on the Datadog Agent is disabled by default so that you don’t have unexpected logs product usage that may impact billing. To enable OTLP logs ingestion:

Explicitly enable log collection as a whole by following Host Agent Log collection setup:

logs_enabled: trueSet

otlp_config.logs.enabledto true:otlp_config: logs: enabled: true

Follow the Datadog Docker Agent setup.

For the Datadog Agent container, set the following endpoint environment variables and expose the corresponding port:

- For gRPC: Set

DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_GRPC_ENDPOINTto0.0.0.0:4317and expose port4317. - For HTTP: Set

DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_HTTP_ENDPOINTto0.0.0.0:4318and expose port4318.

- For gRPC: Set

If you want to enable OTLP logs ingestion, set the following endpoint environment variables in the Datadog Agent container:

- Set

DD_LOGS_ENABLEDto true. - Set

DD_OTLP_CONFIG_LOGS_ENABLEDto true.

- Set

Follow the Kubernetes Agent setup.

Configure the following environment variables in both the trace Agent container and the core Agent container:

For gRPC:

name: DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_GRPC_ENDPOINT # enables gRPC receiver on port 4317 value: "0.0.0.0:4317"For HTTP:

name: DD_OTLP_CONFIG_RECEIVER_PROTOCOLS_HTTP_ENDPOINT # enables HTTP receiver on port 4318 value: "0.0.0.0:4318"Map the container ports 4317 or 4318 to the host port for the core Agent container:

For gRPC:

ports: - containerPort: 4317 hostPort: 4317 name: traceportgrpc protocol: TCPFor HTTP

ports: - containerPort: 4318 hostPort: 4318 name: traceporthttp protocol: TCPIf you want to enable OTLP logs ingestion, set the following endpoint environment variables in the core Agent container:

Enable log collection with your DaemonSet:

name: DD_LOGS_ENABLED value: "true"And enable OTLP logs ingestion:

name: DD_OTLP_CONFIG_LOGS_ENABLED value: "true"

Follow the Kubernetes Agent setup.

Enable the OTLP endpoints in the Agent by editing the

datadog.otlpsection of thevalues.yamlfile:For gRPC:

otlp: receiver: protocols: grpc: endpoint: 0.0.0.0:4317 enabled: trueFor HTTP:

otlp: receiver: protocols: http: endpoint: 0.0.0.0:4318 enabled: true

This enables each protocol in the default port (4317 for OTLP/gRPC and 4318 for OTLP/HTTP).

Follow the Kubernetes Agent setup.

Enable the preferred protocol:

For gRPC:

--set "datadog.otlp.receiver.protocols.grpc.enabled=true"For HTTP:

--set "datadog.otlp.receiver.protocols.http.enabled=true"

This enables each protocol in the default port (4317 for OTLP/gRPC and 4318 for OTLP/HTTP).

For detailed instructions on using OpenTelemetry with AWS Lambda and Datadog, including:

- Instrumenting your Lambda functions with OpenTelemetry

- Using OpenTelemetry API support within Datadog tracers

- Sending OpenTelemetry traces to the Datadog Lambda Extension

See the Serverless documentation for AWS Lambda and OpenTelemetry.

There are many other environment variables and settings supported in the Datadog Agent. To get an overview of them all, see the configuration template.

Sending OpenTelemetry traces, metrics, and logs to Datadog Agent

For the application container, set

OTEL_EXPORTER_OTLP_ENDPOINTenvironment variable to point to the Datadog Agent container. For example:OTEL_EXPORTER_OTLP_ENDPOINT=http://<datadog-agent>:4318Both containers must be defined in the same bridge network, which is handled automatically if you use Docker Compose. Otherwise, follow the Docker example in Tracing Docker Applications to set up a bridge network with the correct ports.

In the application deployment file, configure the endpoint that the OpenTelemetry client sends traces to with the OTEL_EXPORTER_OTLP_ENDPOINT environment variable.

For gRPC:

env:

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: "http://$(HOST_IP):4317" # sends to gRPC receiver on port 4317

For HTTP:

env:

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: "http://$(HOST_IP):4318" # sends to HTTP receiver on port 4318

Note: To enrich container tags for custom metrics, set the appropriate resource attributes in the application code where your OTLP metrics are generated. For example, set the container.id resource attribute to the pod’s UID.

When configuring the endpoint for sending traces, ensure you use the correct path required by your OTLP library. Some libraries expect traces to be sent to the

/v1/traces path, while others use the root path /.Further reading

Documentation, liens et articles supplémentaires utiles: