- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Setup Data Streams Monitoring for .NET

Prerequisites

Supported libraries

| Technology | Library | Minimal tracer version | Recommended tracer version |

|---|---|---|---|

| Kafka | Confluent.Kafka | 2.28.0 | 2.41.0 or later |

| RabbitMQ | RabbitMQ.Client | 2.28.0 | 2.37.0 or later |

| Amazon SQS | Amazon SQS SDK | 2.48.0 | 2.48.0 or later |

| Amazon SNS | Amazon SNS SDK | 3.6.0 | 3.6.0 or later |

| Amazon Kinesis | Amazon Kinesis SDK | 3.7.0 | 3.7.0 or later |

| IBM MQ | IBMMQDotnetClient | 2.49.0 | 2.49.0 or later |

| Azure Service Bus (requires additional setup) | Azure.Messaging.ServiceBus | 2.53.0 | 2.53.0 or later |

Installation

.NET uses auto-instrumentation to inject and extract additional metadata required by Data Streams Monitoring for measuring end-to-end latencies and the relationship between queues and services. To enable Data Streams Monitoring, set the DD_DATA_STREAMS_ENABLED environment variable to true on services sending messages to (or consuming messages from) Kafka or RabbitMQ.

For example:

environment:

- DD_DATA_STREAMS_ENABLED: "true"

- DD_TRACE_REMOVE_INTEGRATION_SERVICE_NAMES_ENABLED: "true"

Monitoring Kafka Pipelines

Data Streams Monitoring uses message headers to propagate context through Kafka streams. If log.message.format.version is set in the Kafka broker configuration, it must be set to 0.11.0.0 or higher. Data Streams Monitoring is not supported for versions lower than this.

Monitoring SQS pipelines

Data Streams Monitoring uses one message attribute to track a message’s path through an SQS queue. As Amazon SQS has a maximum limit of 10 message attributes allowed per message, all messages streamed through the data pipelines must have 9 or fewer message attributes set, allowing the remaining attribute for Data Streams Monitoring.

Monitoring RabbitMQ pipelines

The RabbitMQ integration can provide detailed monitoring and metrics of your RabbitMQ deployments. For full compatibility with Data Streams Monitoring, Datadog recommends configuring the integration as follows:

instances:

- prometheus_plugin:

url: http://<HOST>:15692

unaggregated_endpoint: detailed?family=queue_coarse_metrics&family=queue_consumer_count&family=channel_exchange_metrics&family=channel_queue_exchange_metrics&family=node_coarse_metrics

This ensures that all RabbitMQ graphs populate, and that you see detailed metrics for individual exchanges as well as queues.

Monitoring SNS-to-SQS pipelines

To monitor a data pipeline where Amazon SNS talks directly to Amazon SQS, you must enable Amazon SNS raw message delivery.

Monitoring Azure Service Bus

Setting up Data Streams Monitoring for Azure Service Bus applications requires additional configuration for the instrumented application.

- Either set the environment variable

AZURE_EXPERIMENTAL_ENABLE_ACTIVITY_SOURCEtotrue, or in your application code set theAzure.Experimental.EnableActivitySourcecontext switch totrue. This instructs the Azure Service Bus library to generate tracing information. See Azure SDK documentation for more details. - Set the

DD_TRACE_OTEL_ENABLEDenvironment variable totrue. This instructs the .NET auto-instrumentation to listen to the tracing information generated by the Azure Service Bus Library and enables the inject and extract operations required for Data Streams Monitoring.

Monitoring connectors

Confluent Cloud connectors

Data Streams Monitoring can automatically discover your Confluent Cloud connectors and visualize them within the context of your end-to-end streaming data pipeline.

Setup

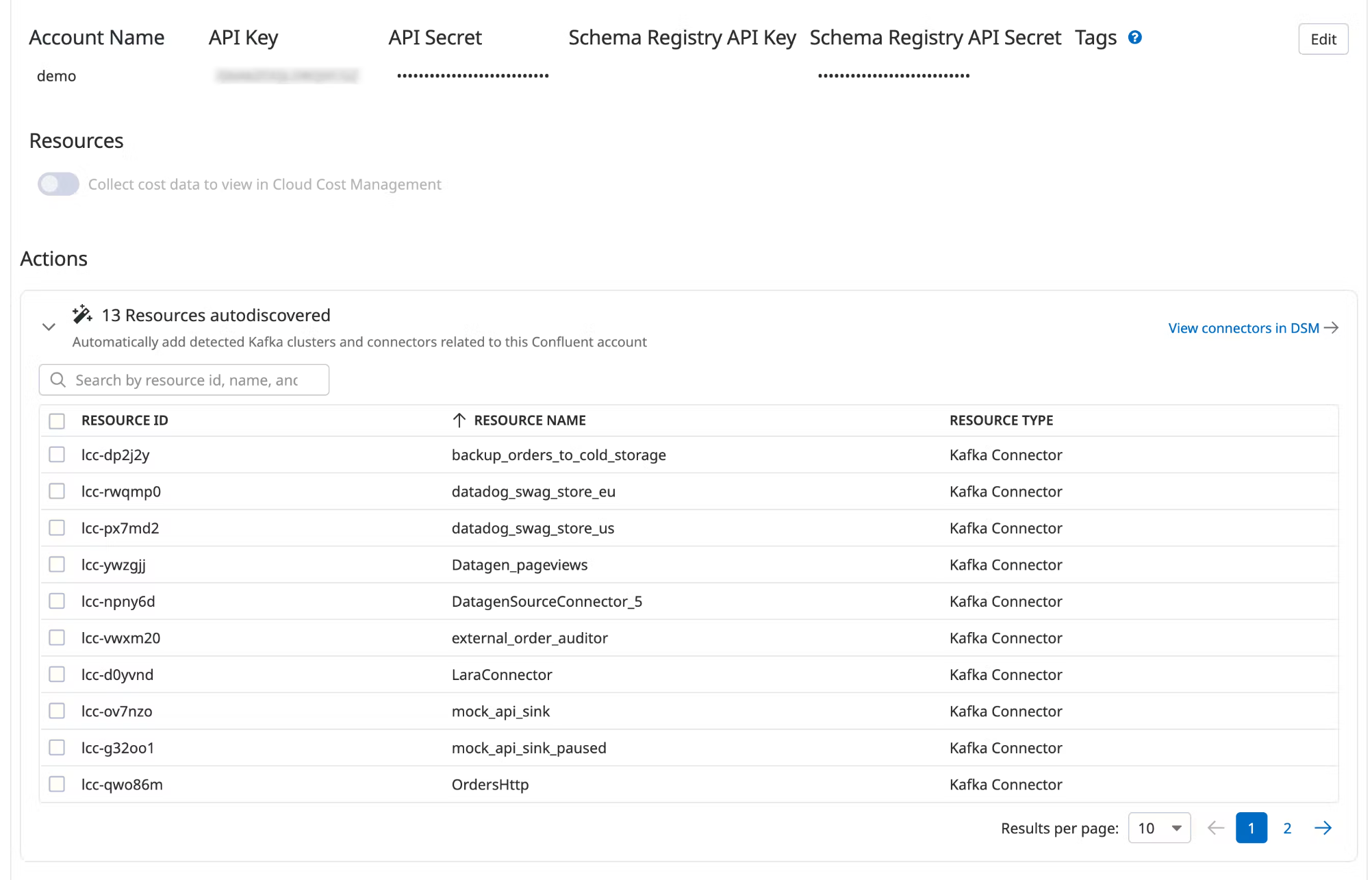

Install and configure the Datadog-Confluent Cloud integration.

In Datadog, open the Confluent Cloud integration tile.

Under Actions, a list of resources populates with detected clusters and connectors. Datadog attempts to discover new connectors every time you view this integration tile.

Select the resources you want to add.

Click Add Resources.

Navigate to Data Streams Monitoring to visualize the connectors and track connector status and throughput.

Further reading

Additional helpful documentation, links, and articles: