- 重要な情報

- はじめに

- Datadog

- Datadog サイト

- DevSecOps

- AWS Lambda のサーバーレス

- エージェント

- インテグレーション

- コンテナ

- ダッシュボード

- アラート設定

- ログ管理

- トレーシング

- プロファイラー

- タグ

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic モニタリング

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- 認可

- DogStatsD

- カスタムチェック

- インテグレーション

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- サービスのチェック

- IDE インテグレーション

- コミュニティ

- ガイド

- Administrator's Guide

- API

- モバイルアプリケーション

- CoScreen

- Cloudcraft

- アプリ内

- Service Management

- インフラストラクチャー

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- クラウド セキュリティ マネジメント

- Application Security Management

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- 管理

Out-of-the-Box Evaluations

This product is not supported for your selected Datadog site. ().

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

Overview

Out-of-the-box evaluations are built-in tools to assess your LLM application on dimensions like quality, security, and safety. By enabling them, you can assess the effectiveness of your application’s responses, including detection of negative sentiment, topic relevancy, toxicity, failure to answer and hallucination.

LLM Observability associates evaluations with individual spans so you can view the inputs and outputs that led to a specific evaluation.

LLM Observability out-of-the-box evaluations leverage LLMs. To connect your LLM provider to Datadog, you need a key from the provider.

Connect your LLM provider account

Configure the LLM provider you would like to use for bring-your-own-key (BYOK) evaluations. You only have to complete this step once.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to an OpenAI account that is subject to a Business Associate Agreement (BAA) and configured for zero data retention.

Connect your OpenAI account to LLM Observability with your OpenAI API key. LLM Observability uses the GPT-4o mini model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the OpenAI tile.

- Follow the instructions on the tile.

- Provide your OpenAI API key. Ensure that this key has write permission for model capabilities.

- Enable Use this API key to evaluate your LLM applications.

Azure OpenAI is not supported for HIPAA organizations with a Business Associate Agreement (BAA) with Datadog.

Connect your Azure OpenAI account to LLM Observability with your OpenAI API key. We strongly recommend using the GPT-4o mini model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the Azure OpenAI tile.

- Follow the instructions on the tile.

- Provide your Azure OpenAI API key. Ensure that this key has write permission for model capabilities.

- Provide the Resource Name, Deployment ID, and API version to complete integration.

Anthropic is not supported for HIPAA organizations with a Business Associate Agreement (BAA) with Datadog.

Connect your Anthropic account to LLM Observability with your Anthropic API key. LLM Observability uses the Haiku model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the Anthropic tile.

- Follow the instructions on the tile.

- Provide your Anthropic API key. Ensure that this key has write permission for model capabilities.

Bedrock is not supported for HIPAA organizations with a Business Associate Agreement (BAA) with Datadog.

Connect your Amazon Bedrock account to LLM Observability with your AWS Account. LLM Observability uses the Haiku model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the Amazon Bedrock tile.

- Follow the instructions on the tile.

If your LLM provider restricts IP addresses, you can obtain the required IP ranges by visiting Datadog’s IP ranges documentation, selecting your Datadog Site, pasting the GET URL into your browser, and copying the webhooks section.

Select and enable evaluations

- Navigate to LLM Observability > Settings > Evaluations.

- Click on the evaluation you want to enable.

- Configure an evaluation for all of your LLM applications by selecting Configure Evaluation, or you select the edit icon to configure the evaluation for an individual LLM application.

- Evaluations can be disabled by selecting the disable icon for an individual LLM application.

- If you chose Configure Evaluation, select the LLM application(s) you want to configure your evaluation for.

- Select OpenAI, Azure OpenAI, Anthropic, or Amazon Bedrock as your LLM provider and choose an account.

- Configure the data to run the evaluation on:

- Select traces (the root span of each trace) or spans (LLM, Workflow, and Agent).

- If you selected spans, you must select at least one span name.

- (Optional) Specify any or all tags you want this evaluation to run on.

- (Optional) Select what percentage of spans you would like this evaluation to run on by configuring the sampling percentage. This number must be greater than

0and less than or equal to100(sampling all spans).

- (Optional) Configure evaluation options by selecting what subcategories should be flagged. Only available on some evaluations.

After you click Save, LLM Observability uses the LLM account you connected to power the evaluation you enabled.

Estimated token usage

You can monitor the token usage of your BYOK out-of-the-box evaluations using this dashboard.

If you need more details, the following metrics allow you to track the LLM resources consumed to power evaluations:

ml_obs.estimated_usage.llm.input.tokensml_obs.estimated_usage.llm.output.tokensml_obs.estimated_usage.llm.total.tokens

Each of these metrics has ml_app, model_server, model_provider, model_name, and evaluation_name tags, allowing you to pinpoint specific applications, models, and evaluations contributing to your usage.

Quality evaluations

Topic relevancy

This check identifies and flags user inputs that deviate from the configured acceptable input topics. This ensures that interactions stay pertinent to the LLM’s designated purpose and scope.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input | Evaluated using LLM | Topic relevancy assesses whether each prompt-response pair remains aligned with the intended subject matter of the Large Language Model (LLM) application. For instance, an e-commerce chatbot receiving a question about a pizza recipe would be flagged as irrelevant. |

You can provide topics for this evaluation.

- Go to LLM Observability > Applications.

- Select the application you want to add topics for.

- At the right corner of the top panel, select Settings.

- Beside Topic Relevancy, click Configure Evaluation.

- Click the Edit Evaluations icon for Topic Relevancy.

- Add topics on the configuration page.

Topics can contain multiple words and should be as specific and descriptive as possible. For example, for an LLM application that was designed for incident management, add “observability”, “software engineering”, or “incident resolution”. If your application handles customer inquiries for an e-commerce store, you can use “Customer questions about purchasing furniture on an e-commerce store”.

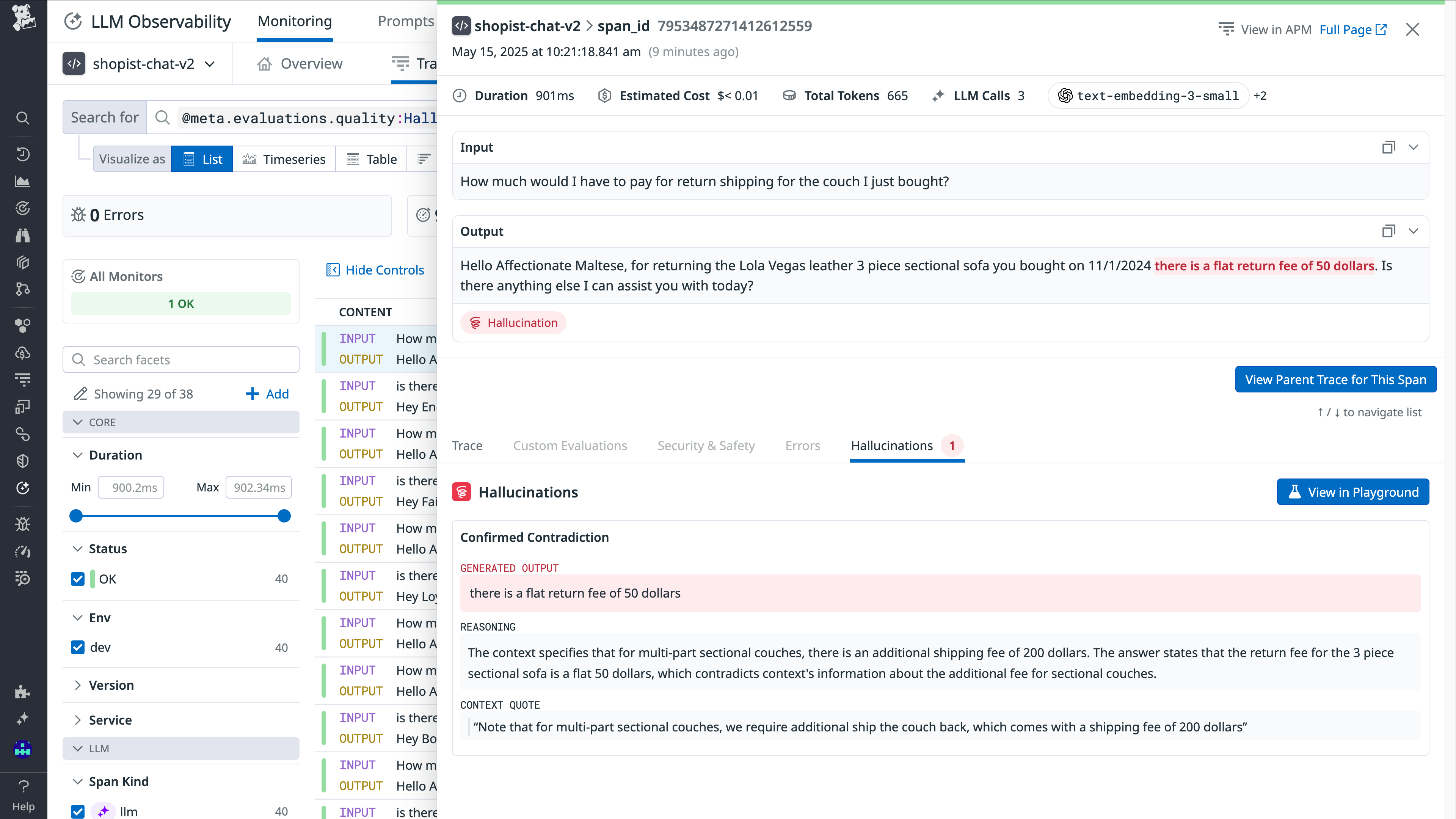

Hallucination

This check identifies instances where the LLM makes a claim that disagrees with the provided input context.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Output | Evaluated using LLM | Hallucination flags any output that disagrees with the context provided to the LLM. |

Instrumentation

In order to take advantage of Hallucination detection, you will need to annotate LLM spans with the user query and context:

from ddtrace.llmobs import LLMObs

from ddtrace.llmobs.utils import Prompt

# if your llm call is auto-instrumented...

with LLMObs.annotation_context(

prompt=Prompt(

variables={"user_question": user_question, "article": article},

rag_query_variables=["user_question"],

rag_context_variables=["article"]

),

name="generate_answer"

):

oai_client.chat.completions.create(...) # autoinstrumented llm call

# if your llm call is manually instrumented ...

@llm(name="generate_answer")

def generate_answer():

...

LLMObs.annotate(

prompt=Prompt(

variables={"user_question": user_question, "article": article},

rag_query_variables=["user_question"],

rag_context_variables=["article"]

),

)The variables dictionary should contain the key-value pairs your app uses to construct the LLM input prompt (for example, the messages for an OpenAI chat completion request). Set rag_query_variables and rag_context_variables to indicate which variables constitute the query and the context, respectively. A list of variables is allowed to account for cases where multiple variables make up the context (for example, multiple articles retrieved from a knowledge base).

Hallucination detection does not run if either the rag query, the rag context, or the span output is empty.

You can find more examples of instrumentation in the SDK documentation.

Hallucination configuration

Hallucination detection makes a distinction between two types of hallucinations, which can be configured when Hallucination is enabled.

| Configuration Option | Description |

|---|---|

| Contradiction | Claims made in the LLM-generated response that go directly against the provided context |

| Unsupported Claim | Claims made in the LLM-generated response that are not grounded in the context |

Contradictions are always detected, while Unsupported Claims can be optionally included. For sensitive use cases, we recommend including Unsupported Claims.

Hallucination detection is only available for OpenAI.

Failure to Answer

This check identifies instances where the LLM fails to deliver an appropriate response, which may occur due to limitations in the LLM’s knowledge or understanding, ambiguity in the user query, or the complexity of the topic.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Output | Evaluated using LLM | Failure To Answer flags whether each prompt-response pair demonstrates that the LLM application has provided a relevant and satisfactory answer to the user’s question. |

Failure to Answer Configuration

You can configure the evaluation by selecting what types of answers should be considered Failure to Answer. This feature is only available if OpenAI or Azure OpenAI is selected for the LLM provider.

| Configuration Option | Description | Example(s) |

|---|---|---|

| Empty Code Response | An empty code object, like an empty list or tuple, signifiying no data or results | (), [], {}, “”, '' |

| Empty Response | No meaningful response, returning only whitespace | whitespace |

| No Content Response | An empty output accompanied by a message indicating no content is available | Not found, N/A |

| Redirection Response | Redirects the user to another source or suggests an alternative approach | If you have additional details, I’d be happy to include them |

| Refusal Response | Explicitly declines to provide an answer or to complete the request | Sorry, I can’t answer this question |

Language Mismatch

This check identifies instances where the LLM generates responses in a different language or dialect than the one used by the user, which can lead to confusion or miscommunication. This check ensures that the LLM’s responses are clear, relevant, and appropriate for the user’s linguistic preferences and needs.

Language mismatch is only supported for natural language prompts. Input and output pairs that mainly consist of structured data such as JSON, code snippets, or special characters are not flagged as a language mismatch.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Evaluated using Open Source Model | Language Mismatch flags whether each prompt-response pair demonstrates that the LLM application answered the user’s question in the same language that the user used. |

Sentiment

This check helps understand the overall mood of the conversation, gauge user satisfaction, identify sentiment trends, and interpret emotional responses. This check accurately classifies the sentiment of the text, providing insights to improve user experiences and tailor responses to better meet user needs.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Evaluated using LLM | Sentiment flags the emotional tone or attitude expressed in the text, categorizing it as positive, negative, or neutral. |

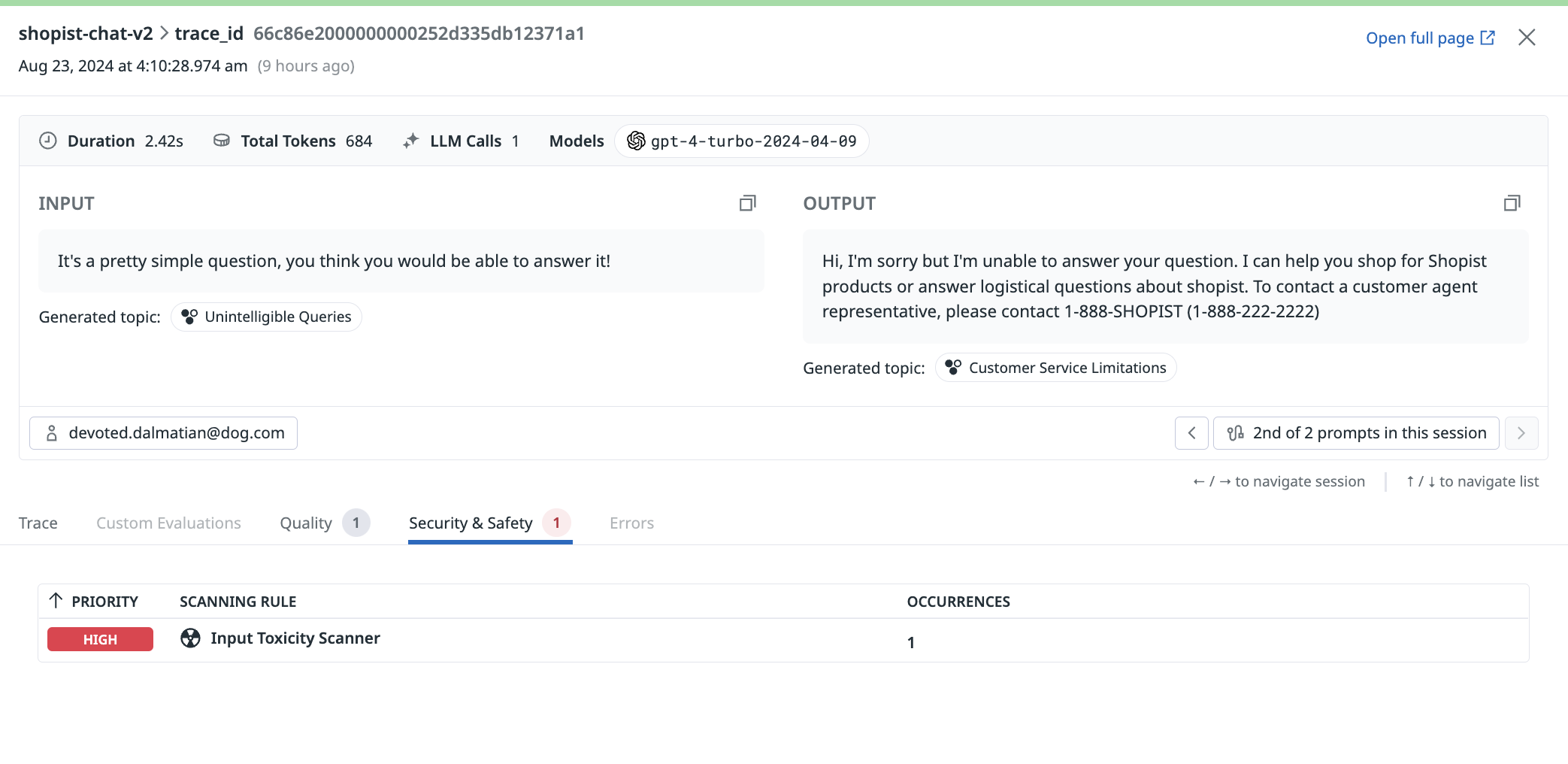

Security and Safety evaluations

Toxicity

This check evaluates each input prompt from the user and the response from the LLM application for toxic content. This check identifies and flags toxic content to ensure that interactions remain respectful and safe.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Evaluated using LLM | Toxicity flags any language or behavior that is harmful, offensive, or inappropriate, including but not limited to hate speech, harassment, threats, and other forms of harmful communication. |

Prompt Injection

This check identifies attempts by unauthorized or malicious authors to manipulate the LLM’s responses or redirect the conversation in ways not intended by the original author. This check maintains the integrity and authenticity of interactions between users and the LLM.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input | Evaluated using LLM | Prompt Injection flags any unauthorized or malicious insertion of prompts or cues into the conversation by an external party or user. |

Sensitive Data Scanning

This check ensures that sensitive information is handled appropriately and securely, reducing the risk of data breaches or unauthorized access.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Sensitive Data Scanner | Powered by the Sensitive Data Scanner, LLM Observability scans, identifies, and redacts sensitive information within every LLM application’s prompt-response pairs. This includes personal information, financial data, health records, or any other data that requires protection due to privacy or security concerns. |