- Principales informations

- Getting Started

- Datadog

- Site Datadog

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Intégrations

- Conteneurs

- Dashboards

- Monitors

- Logs

- Tracing

- Profileur

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Surveillance Synthetic

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- OpenTelemetry

- Développeurs

- Authorization

- DogStatsD

- Checks custom

- Intégrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Checks de service

- IDE Plugins

- Communauté

- Guides

- Administrator's Guide

- API

- Application mobile

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Alertes

- Infrastructure

- Métriques

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Universal Service Monitoring

- Conteneurs

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- RUM et Session Replay

- Product Analytics

- Surveillance Synthetic

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Exécuteur de tests intelligent

- Code Analysis

- Quality Gates

- DORA Metrics

- Securité

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- Administration

Set Up Pipelines

This product is not supported for your selected Datadog site. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Overview

The pipelines and processors outlined in this documentation are specific to on-premises logging environments. To aggregate, process, and route cloud-based logs, see Log Management Pipelines.

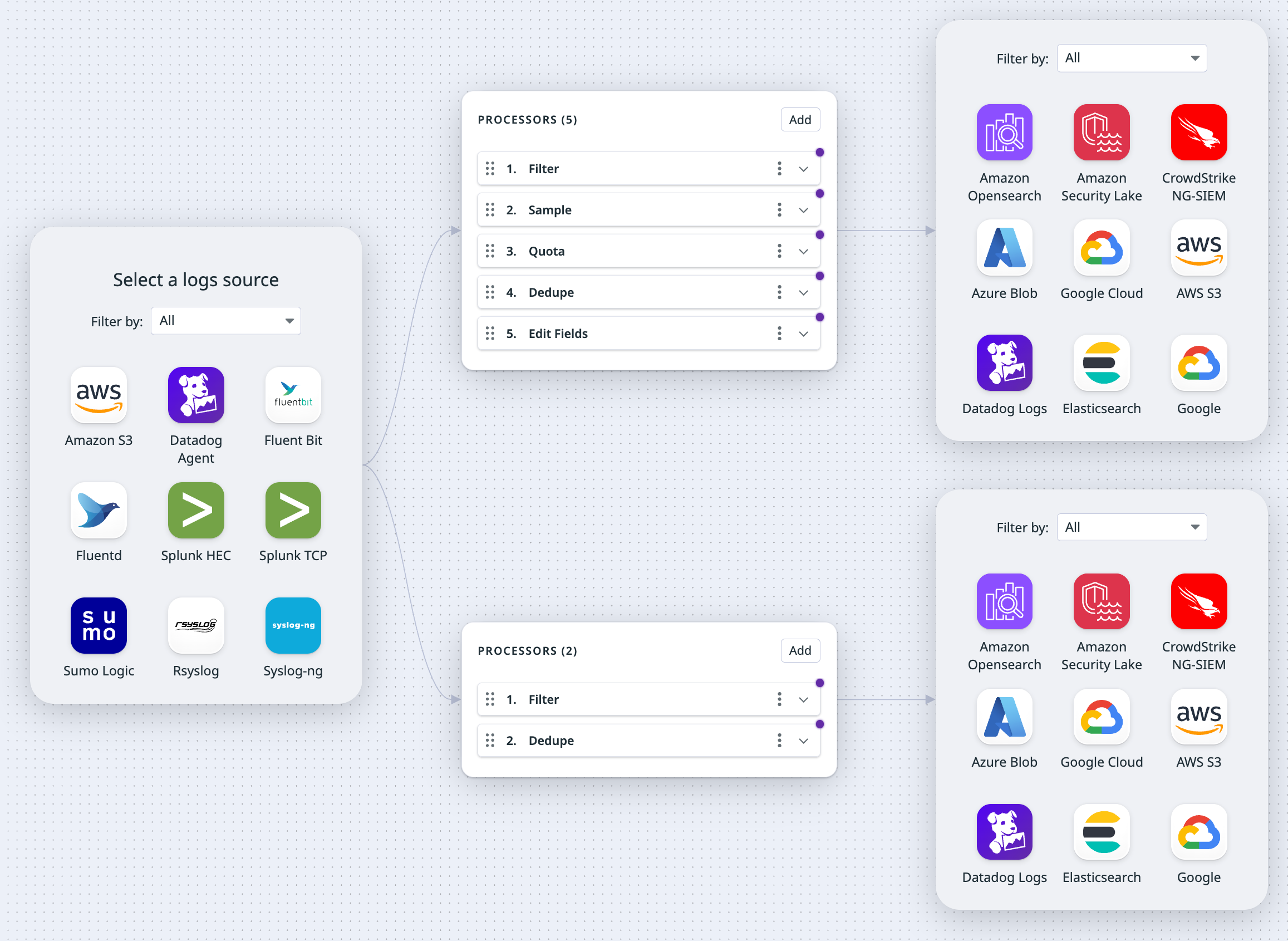

In Observability Pipelines, a pipeline is a sequential path with three types of components: source, processors, and destinations. The Observability Pipeline source receives logs from your log source (for example, the Datadog Agent). The processors enrich and transform your data, and the destination is where your processed logs are sent.

Set up a pipeline

Set up your pipelines and its sources, processors, and destinations in the Observability Pipelines UI.

- Navigate to Observability Pipelines.

- Select a template.

- Select and set up your source.

- Select and set up your destinations.

- Set up your processors.

- If you want to add another set of processors and destinations, click the plus sign (+) to the left of the processor group to add another set of processors and destinations to the source.

- To delete a processor group, you need to delete all destinations linked to that processor group. When the last destination is deleted, the processor group is removed with it.

- If you want to add an additional destination to a processor group, click the plus sign (+) to the right of the processor group.

- To delete a destination, click on the pencil icon to the top right of the destination, and select Delete destination. If you delete a destination from a processor group that has multiple destinations, only the deleted destination is removed. If you delete a destination from a processor group that only has one destination, both the destination and the processor group are removed.

- Notes:

- A pipeline must have at least one destination. If a processor group only has one destination, that destination cannot be deleted.

- You can add a total of three destinations for a pipeline.

- A specific destination can only be added once. For example, you cannot add multiple Splunk HEC destinations.

- Click Next: Install.

- Select the platform on which you want to install the Worker.

- Enter the environment variables for your sources and destinations, if applicable.

- Follow the instructions on installing the Worker for your platform. The command provided in the UI to install the Worker has the relevant environment variables populated. See Install the Observability Pipelines Worker for more information.

- Note: If you are using a proxy, see the

proxyoption in Bootstrap options.

- Note: If you are using a proxy, see the

- Enable out-of-the-box monitors for your pipeline.

- Navigate to the Pipelines page and find your pipelines.

- Click Enable monitors in the Monitors column for your pipeline.

- Click Start to set up a monitor for one of the suggested use cases.

The new metric monitor page is configured based on the use case you selected. You can update the configuration to further customize it. See the Metric monitor documentation for more information.

After you have set up your pipeline, see Update Existing Pipelines if you want to make any changes to it.

Creating pipelines using the Datadog API is in Preview. Fill out the form to request access.

You can use Observability Pipelines API to create a pipeline. After the pipeline has been created, install the Worker to start sending logs through the pipeline.

Note: Pipelines created using the API are read-only in the UI. Use the update a pipeline endpoint to make any changes to an existing pipeline.

Creating pipelines using Terraform is in Preview. Fill out the form to request access.

You can use the datadog_observability_pipeline module to create a pipeline using Terraform. After the pipeline has been created, install the Worker to start sending logs through the pipeline.

Pipelines created using Terraform are read-only in the UI. Use the datadog_observability_pipeline module to make any changes to an existing pipeline.

See Advanced Configurations for bootstrapping options.

Index your Worker logs

Make sure your Worker logs are indexed in Log Management for optimal functionality. The logs provide deployment information, such as Worker status, version, and any errors, that is shown in the UI. The logs are also helpful for troubleshooting Worker or pipelines issues. All Worker logs have the tag source:op_worker.

Clone a pipeline

- Navigate to Observability Pipelines.

- Select the pipeline you want to clone.

- Click the cog at the top right side of the page, then select Clone.

Delete a pipeline

- Navigate to Observability Pipelines.

- Select the pipeline you want to delete.

- Click the cog at the top right side of the page, then select Delete.

Note: You cannot delete an active pipeline. You must stop all Workers for a pipeline before you can delete it.

Further Reading

Documentation, liens et articles supplémentaires utiles: