- 重要な情報

- はじめに

- Datadog

- Datadog サイト

- DevSecOps

- AWS Lambda のサーバーレス

- エージェント

- インテグレーション

- コンテナ

- ダッシュボード

- アラート設定

- ログ管理

- トレーシング

- プロファイラー

- タグ

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic モニタリング

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- 認可

- DogStatsD

- カスタムチェック

- インテグレーション

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- サービスのチェック

- IDE インテグレーション

- コミュニティ

- ガイド

- API

- モバイルアプリケーション

- CoScreen

- Cloudcraft

- アプリ内

- Service Management

- インフラストラクチャー

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- クラウド セキュリティ マネジメント

- Application Security Management

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- 管理

Set up LLM Observability

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

LLM Observability is not available in the selected site () at this time.

Overview

To start sending data to LLM Observability, instrument your application with the LLM Observability SDK for Python or by calling the LLM Observability API.

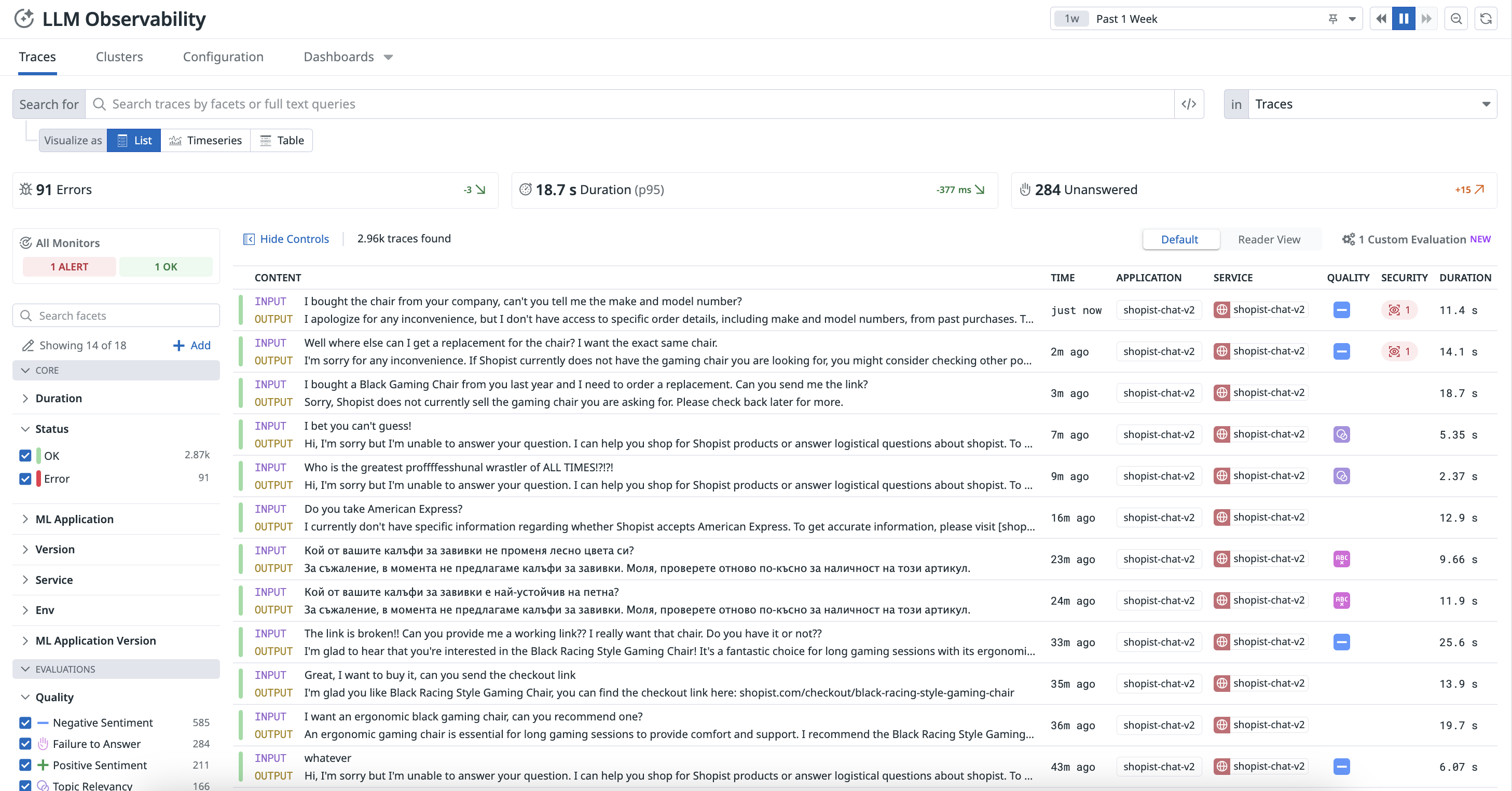

You can visualize the interactions and performance data of your LLM applications on the LLM Observability Traces page, where each request fulfilled by your application is represented as a trace.

For more information about traces, see Terms and Concepts and decide which instrumentation option best suits your application’s needs.

Instrument an LLM application

Datadog provides auto-instrumentation to capture LLM calls for specific LLM provider libraries. However, manually instrumenting your LLM application using the LLM Observability SDK for Python enables access to additional LLM Observability features.

These instructions use the LLM Observability SDK for Python. If your application is running in a serverless environment, follow the serverless setup instructions.If your application is not written in Python, you can complete the steps below with API requests instead of SDK function calls.

To instrument an LLM application:

- Install the LLM Observability SDK for Python.

- Configure the SDK by providing the required environment variables in your application startup command, or programmatically in-code. Ensure you have configured your configure your Datadog API key, Datadog site, and machine learning (ML) app name.

Trace an LLM application

To trace an LLM application:

Create spans in your LLM application code to represent your application’s operations. For more information about spans, see Terms and Concepts.

You can nest spans to create more useful traces. For additional examples and detailed usage, see Trace an LLM Application and the SDK documentation.

Annotate your spans with input data, output data, metadata (such as

temperature), metrics (such asinput_tokens), and key-value tags (such asversion:1.0.0).Optionally, add advanced tracing features, such as user sessions.

Run your LLM application.

- If you used the command-line setup method, the command to run your application should use

ddtrace-run, as described in those instructions. - If you used the in-code setup method, run your application as you normally would.

- If you used the command-line setup method, the command to run your application should use

You can access the resulting traces in the Traces tab on the LLM Observability Traces page and the resulting metrics in the out-of-the-box LLM Observability Overview dashboard.

Creating spans

To create a span, the LLM Observability SDK provides two options: using a function decorator or using a context manager inline.

Using a function decorator is the preferred method. Using a context manager is more advanced and allows more fine-grained control over tracing.

- Decorators

- Use

ddtrace.llmobs.decorators.<SPAN_KIND>()as a decorator on the function you’d like to trace, replacing<SPAN_KIND>with the desired span kind. - Inline

- Use

ddtrace.llmobs.LLMObs.<SPAN_KIND>()as a context manager to trace any inline code, replacing<SPAN_KIND>with the desired span kind.

The examples below create a workflow span.

from ddtrace.llmobs.decorators import workflow

@workflow

def extract_data(document):

... # LLM-powered workflow that extracts structure data from a document

returnfrom ddtrace.llmobs import LLMObs

def extract_data(document):

with LLMObs.workflow(name="extract_data") as span:

... # LLM-powered workflow that extracts structure data from a document

returnAnnotating spans

To add extra information to a span such as inputs, outputs, metadata, metrics, or tags, use the LLM Observability SDK’s LLMObs.annotate() method.

The examples below annotate the workflow span created in the example above:

from ddtrace.llmobs import LLMObs

from ddtrace.llmobs.decorators import workflow

@workflow

def extract_data(document: str, generate_summary: bool):

extracted_data = ... # user application logic

LLMObs.annotate(

input_data=document,

output_data=extracted_data,

metadata={"generate_summary": generate_summary},

tags={"env": "dev"},

)

return extracted_datafrom ddtrace.llmobs import LLMObs

def extract_data(document: str, generate_summary: bool):

with LLMObs.workflow(name="extract_data") as span:

... # user application logic

extracted_data = ... # user application logic

LLMObs.annotate(

input_data=document,

output_data=extracted_data,

metadata={"generate_summary": generate_summary},

tags={"env": "dev"},

)

return extracted_dataNesting spans

Starting a new span before the current span is finished automatically traces a parent-child relationship between the two spans. The parent span represents the larger operation, while the child span represents a smaller nested sub-operation within it.

The examples below create a trace with two spans.

from ddtrace.llmobs.decorators import task, workflow

@workflow

def extract_data(document):

preprocess_document(document)

... # performs data extraction on the document

return

@task

def preprocess_document():

... # preprocesses a document for data extraction

returnfrom ddtrace.llmobs import LLMObs

def extract_data():

with LLMObs.workflow(name="extract_data") as workflow_span:

with LLMObs.task(name="preprocess_document") as task_span:

... # preprocesses a document for data extraction

... # performs data extraction on the document

returnFor more information on alternative tracing methods and tracing features, see the SDK documentation.

Advanced tracing

Depending on the complexity of your LLM application, you can also:

- Track user sessions by specifying a

session_id. - Persist a span between contexts or scopes by manually starting and stopping it.

- Track multiple LLM applications when starting a new trace, which can be useful for differentiating between services or running multiple experiments.

- Submit custom evaluations such as feedback from the users of your LLM application (for example, rating from 1 to 5) with the SDK or the API.

Permissions

By default, only users with the Datadog Read role can view LLM Observability. For more information, see the Permissions documentation.