- 重要な情報

- はじめに

- Datadog

- Datadog サイト

- DevSecOps

- AWS Lambda のサーバーレス

- エージェント

- インテグレーション

- コンテナ

- ダッシュボード

- アラート設定

- ログ管理

- トレーシング

- プロファイラー

- タグ

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic モニタリング

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- 認可

- DogStatsD

- カスタムチェック

- インテグレーション

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- サービスのチェック

- IDE インテグレーション

- コミュニティ

- ガイド

- API

- モバイルアプリケーション

- CoScreen

- Cloudcraft

- アプリ内

- Service Management

- インフラストラクチャー

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- クラウド セキュリティ マネジメント

- Application Security Management

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- 管理

Send Amazon EKS Fargate Logs with Amazon Data Firehose

概要

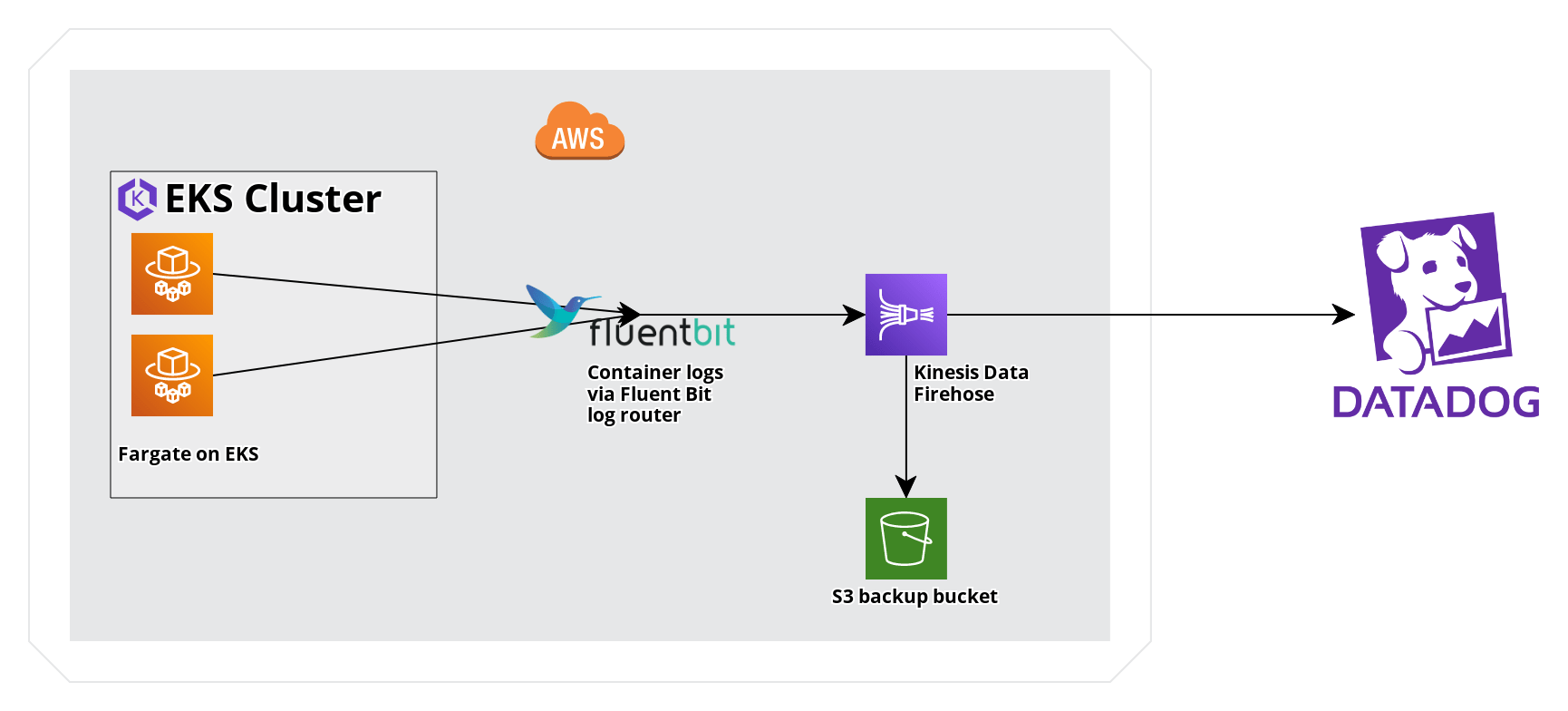

AWS Fargate on EKS provides a fully managed experience for running Kubernetes workloads. Amazon Data Firehose can be used with EKS’s Fluent Bit log router to collect logs in Datadog. This guide provides a comparison of log forwarding through Amazon Data Firehose and CloudWatch logs, as well as a sample EKS Fargate application to send logs to Datadog through Amazon Data Firehose.

Amazon Data Firehose and CloudWatch log forwarding

The following are key differences between using Amazon Data Firehose and CloudWatch log forwarding.

Metadata and tagging: Metadata, such as Kubernetes namespace and container ID, are accessible as structured attributes when sending logs with Amazon Data Firehose.

AWS Costs: AWS Costs may vary for individual use cases but Amazon Data Firehose ingestion is generally less expensive than comparable Cloudwatch Log ingestion.

要件

kubectlとawsのコマンドラインツールを使用します。- Fargate プロファイルと Fargate ポッドの実行ロールを持つ EKS クラスター。このガイドでは、クラスターは

fargate-clusterという名前で、fargate-profileという名前の Fargate プロファイルがfargate-namespaceというネームスペースに適用されているものとします。これらのリソースがまだない場合は、Amazon EKS の概要でクラスターを作成し、Amazon EKS を使った AWS Fargate の概要で Fargate プロファイルとポッドの実行ロールを作成します。

セットアップ

The following steps outline the process for sending logs from a sample application deployed on an EKS cluster through Fluent Bit and an Amazon Data Firehose delivery stream to Datadog. To maximize consistency with standard Kubernetes tags in Datadog, instructions are included to remap selected attributes to tag keys.

- Create an Amazon Data Firehose delivery stream that delivers logs to Datadog, along with an S3 Backup for any failed log deliveries.

- EKS Fargate で Fluent Bit for Firehose を構成しました。。

- サンプルアプリケーションをデプロイします。

- Kubernetes タグと

container_idタグを使った相関のために、リマッパープロセッサーの適用を行います。

Create an Amazon Data Firehose delivery stream

See the Send AWS Services Logs with the Datadog Amazon Data Firehose Destination guide to set up an Amazon Data Firehose Delivery stream.

Note: Set the Source as Direct PUT.

EKS Fargate クラスターで Fluent Bit for Firehose を構成する

aws-observabilityネームスペースを作成します。

kubectl create namespace aws-observability- Fluent Bit 用の Kubernetes ConfigMap を以下のように

aws-logging-configmap.yamlとして作成します。配信ストリームの名前を代入してください。

For the new higher performance Kinesis Firehose plugin use the plugin name

kinesis_firehose instead of amazon_data_firehose.apiVersion: v1

kind: ConfigMap

metadata:

name: aws-logging

namespace: aws-observability

data:

filters.conf: |

[FILTER]

Name kubernetes

Match kube.*

Merge_Log On

Buffer_Size 0

Kube_Meta_Cache_TTL 300s

flb_log_cw: 'true'

output.conf: |

[OUTPUT]

Name kinesis_firehose

Match kube.*

region <REGION>

delivery_stream <YOUR-DELIVERY-STREAM-NAME> - ConfigMap マニフェストを適用するには、

kubectlを使用します。

kubectl apply -f aws-logging-configmap.yaml- Create an IAM policy and attach it to the pod execution role to allow the log router running on AWS Fargate to write to the Amazon Data Firehose. You can use the example below, replacing the ARN in the Resource field with the ARN of your delivery stream, as well as specifying your region and account ID.

allow_firehose_put_permission.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"firehose:PutRecord",

"firehose:PutRecordBatch"

],

"Resource":

"arn:aws:firehose:<REGION>:<ACCOUNTID>:deliverystream/<DELIVERY-STREAM-NAME>"

}

]

}a. ポリシーを作成します。

aws iam create-policy \

--policy-name FluentBitEKSFargate \

--policy-document file://allow_firehose_put_permission.jsonb. Fargate Pod Execution Role を取得し、IAM ポリシーをアタッチします。

POD_EXEC_ROLE=$(aws eks describe-fargate-profile \

--cluster-name fargate-cluster \

--fargate-profile-name fargate-profile \

--query 'fargateProfile.podExecutionRoleArn' --output text |cut -d '/' -f 2)

aws iam attach-role-policy \

--policy-arn arn:aws:iam::<ACCOUNTID>:policy/FluentBitEKSFargate \

--role-name $POD_EXEC_ROLEサンプルアプリケーションをデプロイする

To generate logs and test the Amazon Data Firehose delivery stream, deploy a sample workload to your EKS Fargate cluster.

- デプロイメントマニフェスト

sample-deployment.yamlを作成します。

sample-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

namespace: fargate-namespace

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80fargate-namespaceネームスペースを作成します。

kubectl create namespace fargate-namespace

- デプロイメントマニフェストを適用するには、

kubectlを使用します。

kubectl apply -f sample-deployment.yaml

検証

- ネームスペース

fargate-namespaceでsample-appポッドが動作していることを確認します。

kubectl get pods -n fargate-namespace

期待される出力:

NAME READY STATUS RESTARTS AGE

sample-app-6c8b449b8f-kq2qz 1/1 Running 0 3m56s

sample-app-6c8b449b8f-nn2w7 1/1 Running 0 3m56s

sample-app-6c8b449b8f-wzsjj 1/1 Running 0 3m56s

kubectl describe podを使用して、Fargate のログ機能が有効であることを確認します。

kubectl describe pod <POD-NAME> -n fargate-namespace |grep Logging

期待される出力:

Logging: LoggingEnabled

Normal LoggingEnabled 5m fargate-scheduler Successfully enabled logging for pod

- デプロイのログを検査します。

kubectl logs -l app=nginx -n fargate-namespace

期待される出力:

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2023/01/27 16:53:42 [notice] 1#1: using the "epoll" event method

2023/01/27 16:53:42 [notice] 1#1: nginx/1.23.3

2023/01/27 16:53:42 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2023/01/27 16:53:42 [notice] 1#1: OS: Linux 4.14.294-220.533.amzn2.x86_64

2023/01/27 16:53:42 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1024:65535

2023/01/27 16:53:42 [notice] 1#1: start worker processes

...

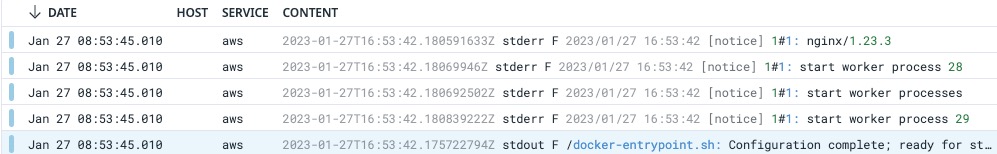

- Verify the logs are in Datadog. In the Datadog Log Explorer, search for

@aws.firehose.arn:"<ARN>", replacing<ARN>with your Amazon Data Firehose ARN, to filter for logs from the Amazon Data Firehose.

ログ相関のための属性のリマップ

この構成からのログは、Datadog の標準的な Kubernetes タグとの一貫性を最大化するために、いくつかの属性をリマップする必要があります。

Datadog Log Pipelines のページに移動します。

Name

EKS Fargate Log Pipelineと Filterservice:aws source:awsで新しいパイプラインを作成します。以下の属性をタグキーにリマップするための Remapper プロセッサーを 4 つ作成します。

リマップする属性 ターゲットタグキー kubernetes.container_namekube_container_namekubernetes.namespace_namekube_namespacekubernetes.pod_namepod_namekubernetes.docker_idcontainer_idこのパイプラインを作成すると、サンプルアプリが出力するログは、この例のようにログ属性が Kubernetes タグにリマップされてタグ付けされるようになります。

その他の参考資料

お役に立つドキュメント、リンクや記事: