- Principales informations

- Getting Started

- Datadog

- Site Datadog

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Intégrations

- Conteneurs

- Dashboards

- Monitors

- Logs

- Tracing

- Profileur

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Surveillance Synthetic

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- OpenTelemetry

- Développeurs

- Authorization

- DogStatsD

- Checks custom

- Intégrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Checks de service

- IDE Plugins

- Communauté

- Guides

- API

- Application mobile

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Alertes

- Infrastructure

- Métriques

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Universal Service Monitoring

- Conteneurs

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- RUM et Session Replay

- Product Analytics

- Surveillance Synthetic

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Exécuteur de tests intelligent

- Code Analysis

- Quality Gates

- DORA Metrics

- Securité

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- Administration

Tutorial - Enabling Tracing for a Python Application in a Container and an Agent on a Host

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Overview

This tutorial walks you through the steps for enabling tracing on a sample Python application installed in a container. In this scenario, the Datadog Agent is installed on a host.

For other scenarios, including the application and Agent on a host, the application and the Agent in containers, and applications written in different languages, see the other Enabling Tracing tutorials.

See Tracing Python Applications for general comprehensive tracing setup documentation for Python.

Prerequisites

- A Datadog account and organization API key

- Git

- Python that meets the tracing library requirements

Install the Agent

If you haven’t installed a Datadog Agent on your machine, go to Integrations > Agent and select your operating system. For example, on most Linux platforms, you can install the Agent by running the following script, replacing <YOUR_API_KEY> with your Datadog API key:

DD_AGENT_MAJOR_VERSION=7 DD_API_KEY=<YOUR_API_KEY> DD_SITE="datadoghq.com" bash -c "$(curl -L https://install.datadoghq.com/scripts/install_script_agent7.sh)"To send data to a Datadog site other than datadoghq.com, replace the DD_SITE environment variable with your Datadog site.

Ensure your Agent is configured to receive trace data from containers. Open its configuration file and ensure apm_config: is uncommented, and apm_non_local_traffic is uncommented and set to true.

If you have an Agent already installed on the host, ensure it is at least version 7.28. The minimum version of Datadog Agent required to use ddtrace to trace Python applications is documented in the tracing library developer docs.

Install the sample Dockerized Python application

The code sample for this tutorial is on GitHub, at github.com/Datadog/apm-tutorial-python. To get started, clone the repository:

git clone https://github.com/DataDog/apm-tutorial-python.gitThe repository contains a multi-service Python application pre-configured to be run within Docker containers. The sample app is a basic notes app with a REST API to add and change data.

Starting and exercising the sample application

Build the application’s container by running:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml build notes_appStart the container:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml up db notes_appThe application is ready to use when you see the following output in the terminal:

notes | * Debug mode: on notes | INFO:werkzeug:WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. notes | * Running on all addresses (0.0.0.0) notes | * Running on http://127.0.0.1:8080 notes | * Running on http://192.168.32.3:8080 notes | INFO:werkzeug:Press CTRL+C to quit notes | INFO:werkzeug: * Restarting with stat notes | WARNING:werkzeug: * Debugger is active! notes | INFO:werkzeug: * Debugger PIN: 143-375-699You can also verify that it’s running by viewing the containers with the

docker pscommand.Open up another terminal and send API requests to exercise the app. The notes application is a REST API that stores data in a Postgres database running in another container. Send it a few commands:

curl -X GET 'localhost:8080/notes'{}curl -X POST 'localhost:8080/notes?desc=hello'(1, hello)curl -X GET 'localhost:8080/notes?id=1'(1, hello)curl -X GET 'localhost:8080/notes'{"1", "hello"}curl -X PUT 'localhost:8080/notes?id=1&desc=UpdatedNote'(1, UpdatedNote)curl -X DELETE 'localhost:8080/notes?id=1'Deleted

Stop the application

After you’ve seen the application running, stop it so that you can enable tracing on it.

Stop the containers:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml downRemove the containers:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml rm

Enable tracing

Now that you have a working Python application, configure it to enable tracing.

Add the Python tracing package to your project. Open the file

apm-tutorial-python/requirements.txt, and addddtraceto the list if it is not already there:flask==2.2.2 psycopg2-binary==2.9.3 requests==2.28.1 ddtraceWithin the notes application Dockerfile,

docker/host-and-containers/exercise/Dockerfile.notes, change the CMD line that starts the application to use theddtracepackage:# Run the application with Datadog CMD ["ddtrace-run", "python", "-m", "notes_app.app"]This automatically instruments the application with Datadog services.

Apply Universal Service Tags, which identify traced services across different versions and deployment environments, so that they can be correlated within Datadog, and you can use them to search and filter. The three environment variables used for Unified Service Tagging are

DD_SERVICE,DD_ENV, andDD_VERSION. Add the following environment variables in the Dockerfile:ENV DD_SERVICE="notes" ENV DD_ENV="dev" ENV DD_VERSION="0.1.0"Add Docker labels that correspond to the Universal Service Tags. This allows you also to get Docker metrics once your application is running.

LABEL com.datadoghq.tags.service="notes" LABEL com.datadoghq.tags.env="dev" LABEL com.datadoghq.tags.version="0.1.0"

To check that you’ve set things up correctly, compare your Dockerfile file with the one provided in the sample repository’s solution file, docker/host-and-containers/solution/Dockerfile.notes.

Configure the container to send traces to the Agent

Open the compose file for the containers,

docker/host-and-containers/exercise/docker-compose.yaml.In the

notes_appcontainer section, add the environment variableDD_AGENT_HOSTand specify the hostname of the Agent container:environment: - DD_AGENT_HOST=host.docker.internalOn Linux: Also add an

extra_hostto the compose file to allow communication on Docker’s internal network. Thenotes-appsection of your compose file should look something like this:notes_app: container_name: notes restart: always build: context: ../../.. dockerfile: docker/host-and-containers/exercise/Dockerfile.notes ports: - "8080:8080" depends_on: - db extra_hosts: # Linux only configuration - "host.docker.internal:host-gateway" # Linux only configuration environment: - DB_HOST=test_postgres # the Postgres container - CALENDAR_HOST=calendar # the calendar container - DD_AGENT_HOST=host.docker.internal # the Agent running on the local machine using docker network

To check that you’ve set things up correctly, compare your docker-compose.yaml file with the one provided in the sample repository’s solution file, docker/host-and-containers/solution/docker-compose.yaml.

Start the Agent

Start the Agent service on the host. The command depends on the operating system, for example:

- MacOS

launchctl start com.datadoghq.agent- Linux

sudo service datadog-agent start

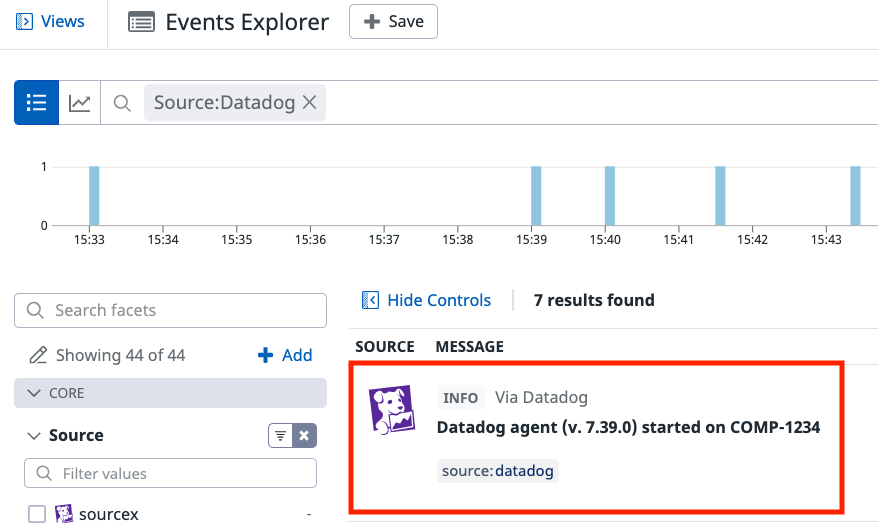

Verify that the Agent is running and sending data to Datadog by going to Events > Explorer, optionally filtering by the Datadog Source facet, and looking for an event that confirms the Agent installation on the host:

If after a few minutes you don't see your host in Datadog (under Infrastructure > Host map), ensure you used the correct API key for your organization, available at Organization Settings > API Keys.

Launch the containers to see automatic tracing

Now that the Tracing Library is installed and the Agent is running, restart your application to start receiving traces. Run the following commands:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml build notes_app

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml up db notes_app

With the application running, send some curl requests to it:

curl -X POST 'localhost:8080/notes?desc=hello'(1, hello)curl -X GET 'localhost:8080/notes?id=1'(1, hello)curl -X PUT 'localhost:8080/notes?id=1&desc=UpdatedNote'(1, UpdatedNote)curl -X DELETE 'localhost:8080/notes?id=1'Deleted

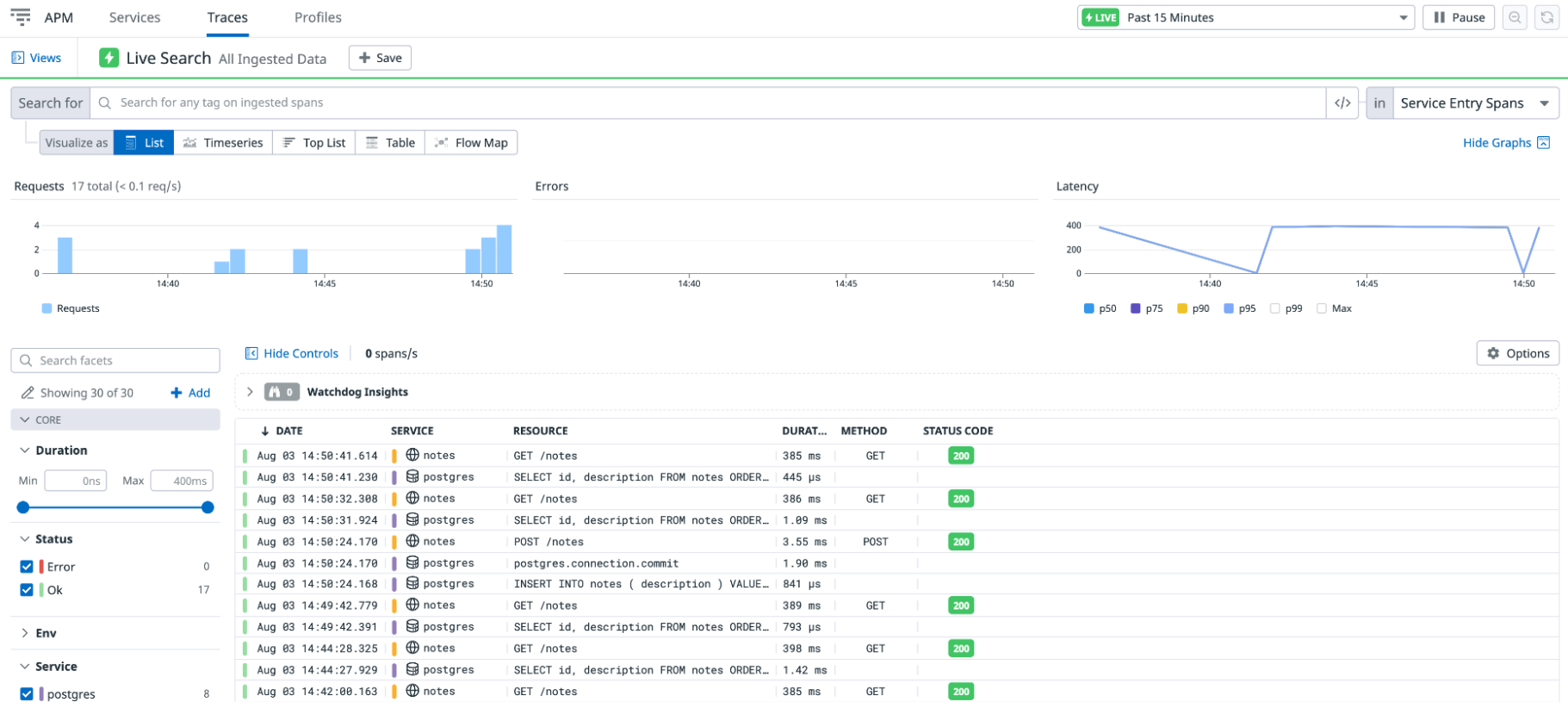

Wait a few moments, and go to APM > Traces in Datadog, where you can see a list of traces corresponding to your API calls:

If you don’t see traces after several minutes, clear any filter in the Traces Search field (sometimes it filters on an environment variable such as ENV that you aren’t using).

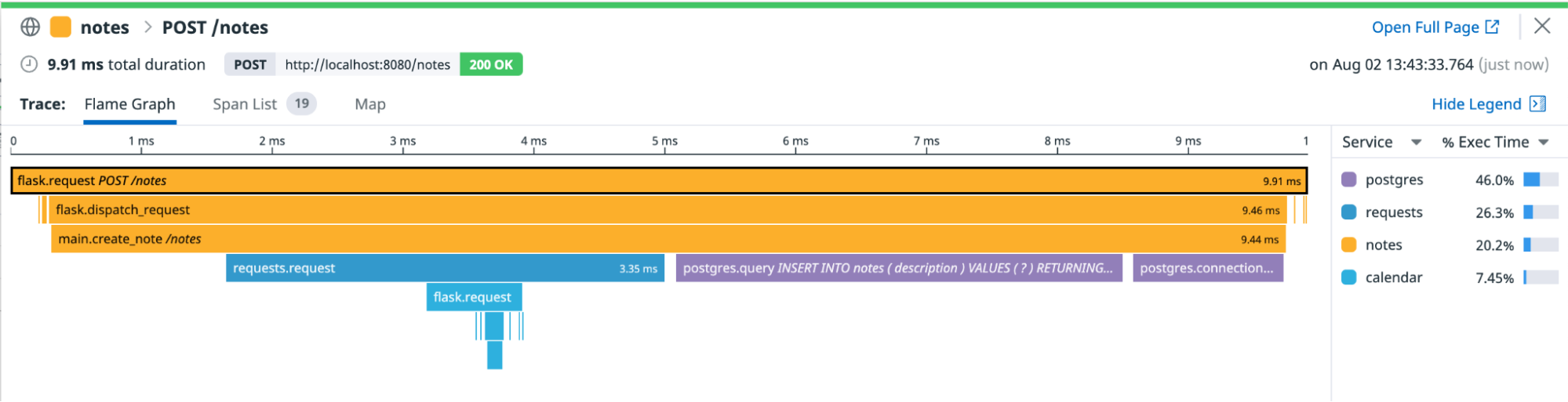

Examine a trace

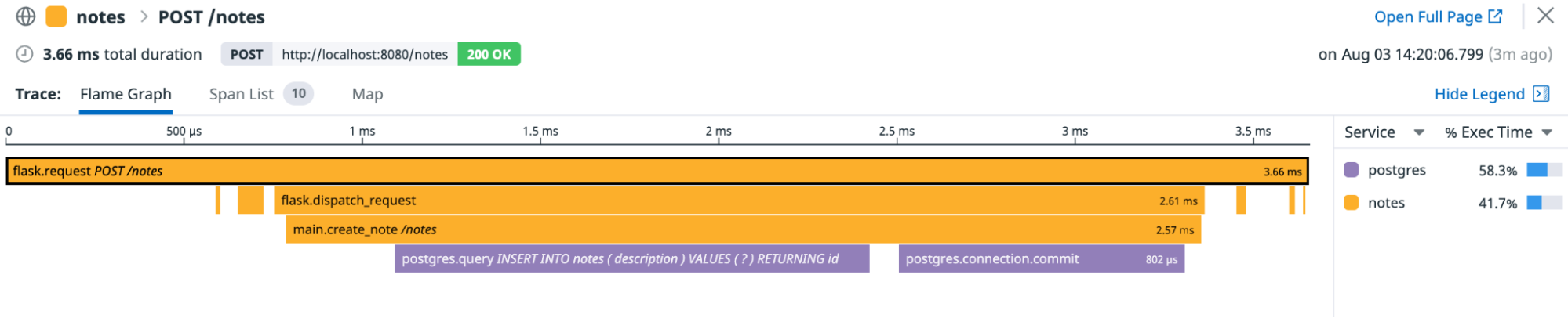

On the Traces page, click on a POST /notes trace to see a flame graph that shows how long each span took and what other spans occurred before a span completed. The bar at the top of the graph is the span you selected on the previous screen (in this case, the initial entry point into the notes application).

The width of a bar indicates how long it took to complete. A bar at a lower depth represents a span that completes during the lifetime of a bar at a higher depth.

The flame graph for a POST trace looks something like this:

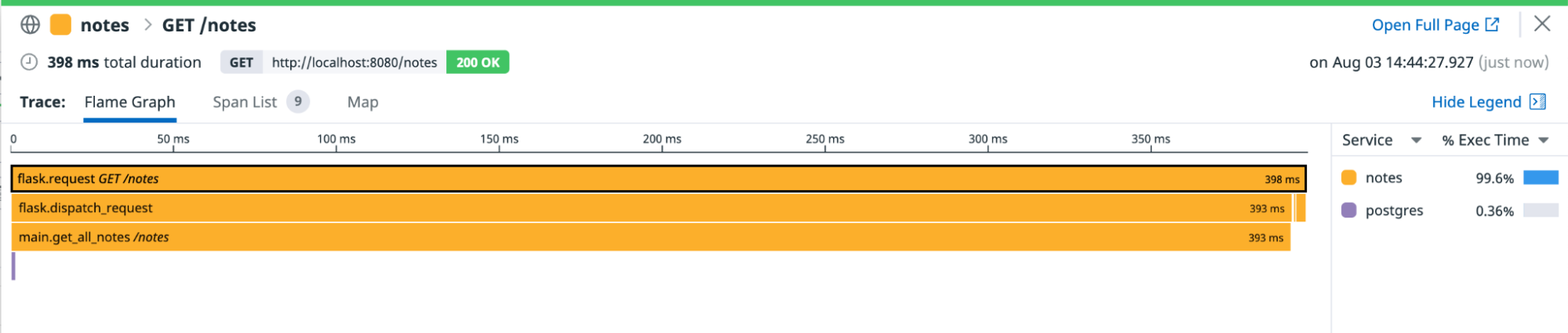

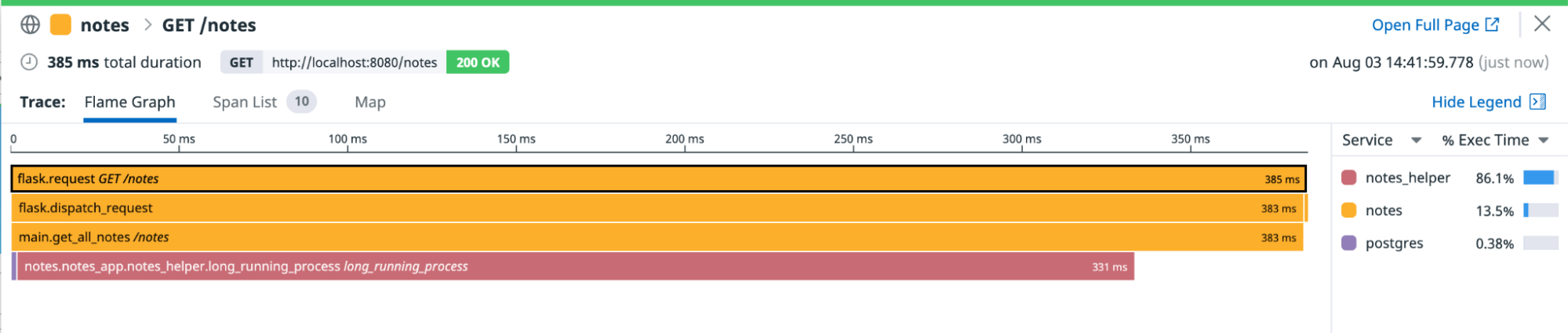

A GET /notes trace looks something like this:

Add custom instrumentation to the Python application

Automatic instrumentation is convenient, but sometimes you want more fine-grained spans. Datadog’s Python DD Trace API allows you to specify spans within your code using annotations or code.

The following steps walk you through adding annotations to the code to trace some sample methods.

Open

notes_app/notes_helper.py.Add the following import:

from ddtrace import tracerInside the

NotesHelperclass, add a tracer wrapper callednotes_helperto better see how thenotes_helper.long_running_processmethod works:class NotesHelper: @tracer.wrap(service="notes_helper") def long_running_process(self): time.sleep(.3) logging.info("Hello from the long running process") self.__private_method_1()Now, the tracer automatically labels the resource with the function name it is wrapped around, in this case,

long_running_process.Rebuild the containers by running:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml build notes_app docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml up db notes_appResend some HTTP requests, specifically some

GETrequests.On the Trace Explorer, click on one of the new

GETrequests, and see a flame graph like this:Note the higher level of detail in the stack trace now that the

get_notesfunction has custom tracing.

For more information, read Custom Instrumentation.

Add a second application to see distributed traces

Tracing a single application is a great start, but the real value in tracing is seeing how requests flow through your services. This is called distributed tracing.

The sample project includes a second application called calendar_app that returns a random date whenever it is invoked. The POST endpoint in the Notes application has a second query parameter named add_date. When it is set to y, Notes calls the calendar application to get a date to add to the note.

Configure the calendar app for tracing by adding

dd_traceto the startup command in the Dockerfile, like you previously did for the notes app. Opendocker/host-and-containers/exercise/Dockerfile.calendarand update the CMD line like this:CMD ["ddtrace-run", "python", "-m", "calendar_app.app"]Apply Universal Service Tags, just like we did for the notes app. Add the following environment variables in the

Dockerfile.calendarfile:ENV DD_SERVICE="calendar" ENV DD_ENV="dev" ENV DD_VERSION="0.1.0"Again, add Docker labels that correspond to the Universal Service Tags, allowing you to also get Docker metrics once your application runs.

LABEL com.datadoghq.tags.service="calendar" LABEL com.datadoghq.tags.env="dev" LABEL com.datadoghq.tags.version="0.1.0"Like you did earlier for the notes app, add the Agent container hostname,

DD_AGENT_HOST, to the calendar application container so that it sends traces to the correct location. Opendocker/host-and-containers/exercise/docker-compose.yamland add the following lines to thecalendar_appsection:environment: - DD_AGENT_HOST=host.docker.internalAnd, if you’re using Linux, add the

extra_hostalso:extra_hosts: - "host.docker.internal:host-gateway"To check that you’ve set things up correctly, compare your setup with the Dockerfile and

docker-config.yamlfiles provided in the sample repository’sdocker/host-and-containers/solutiondirectory.Build the multi-service application by restarting the containers. First, stop all running containers:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml downThen run the following commands to start them:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml build docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml upSend a POST request with the

add_dateparameter:

curl -X POST 'localhost:8080/notes?desc=hello_again&add_date=y'(2, hello_again with date 2022-11-06)

In the Trace Explorer, click this latest trace to see a distributed trace between the two services:

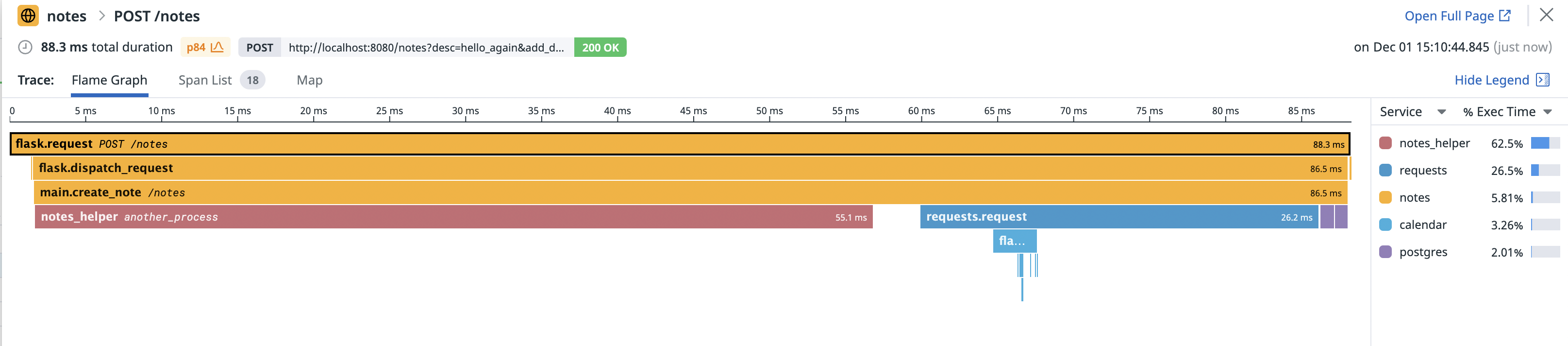

Add more custom instrumentation

You can add custom instrumentation by using code. Suppose you want to further instrument the calendar service to better see the trace:

Open

notes_app/notes_logic.py.Add the following import

from ddtrace import tracerInside the

tryblock, at about line 28, add the followingwithstatement:with tracer.trace(name="notes_helper", service="notes_helper", resource="another_process") as span:Resulting in this:

def create_note(self, desc, add_date=None): if (add_date): if (add_date.lower() == "y"): try: with tracer.trace(name="notes_helper", service="notes_helper", resource="another_process") as span: self.nh.another_process() note_date = requests.get(f"https://{CALENDAR_HOST}/calendar") note_date = note_date.text desc = desc + " with date " + note_date print(desc) except Exception as e: print(e) raise IOError("Cannot reach calendar service.") note = Note(description=desc, id=None) return self.db.create_note(note)Rebuild the containers:

docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml build notes_app docker-compose -f docker/host-and-containers/exercise/docker-compose.yaml upSend some more HTTP requests, specifically

POSTrequests, with theadd_dateargument.In the Trace Explorer, click into one of these new

Note the new span labeledPOSTtraces to see a custom trace across multiple services:notes_helper.another_process.

If you’re not receiving traces as expected, set up debug mode in the ddtrace Python package. Read Enable debug mode to find out more.

Further reading

Documentation, liens et articles supplémentaires utiles: