- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Catalog

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Software Catalog

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

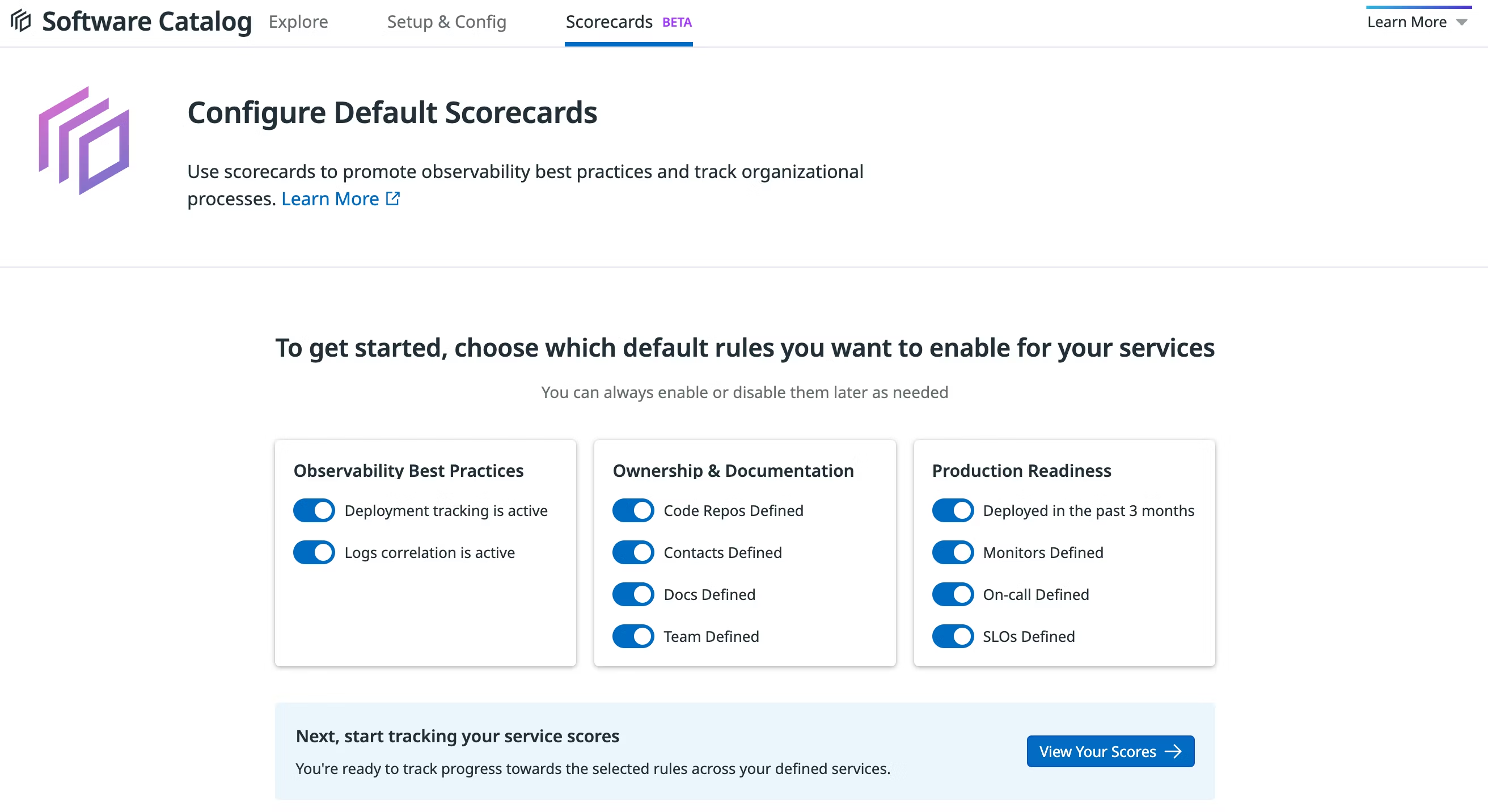

Scorecard Configuration

Scorecards are in Preview.

Datadog provides the following out-of-the-box scorecards based on a default set of rules: Production Readiness, Observability Best Practices, and Ownership & Documentation.

Set up default scorecards

To select which of the out-of-the-box rules are evaluated for each of the default scorecards:

- Open the Scorecards page in Software Catalog.

- Enable or disable rules to customize how the scores are calculated.

- Click View your scores to start tracking your progress toward the selected rules across your defined services.

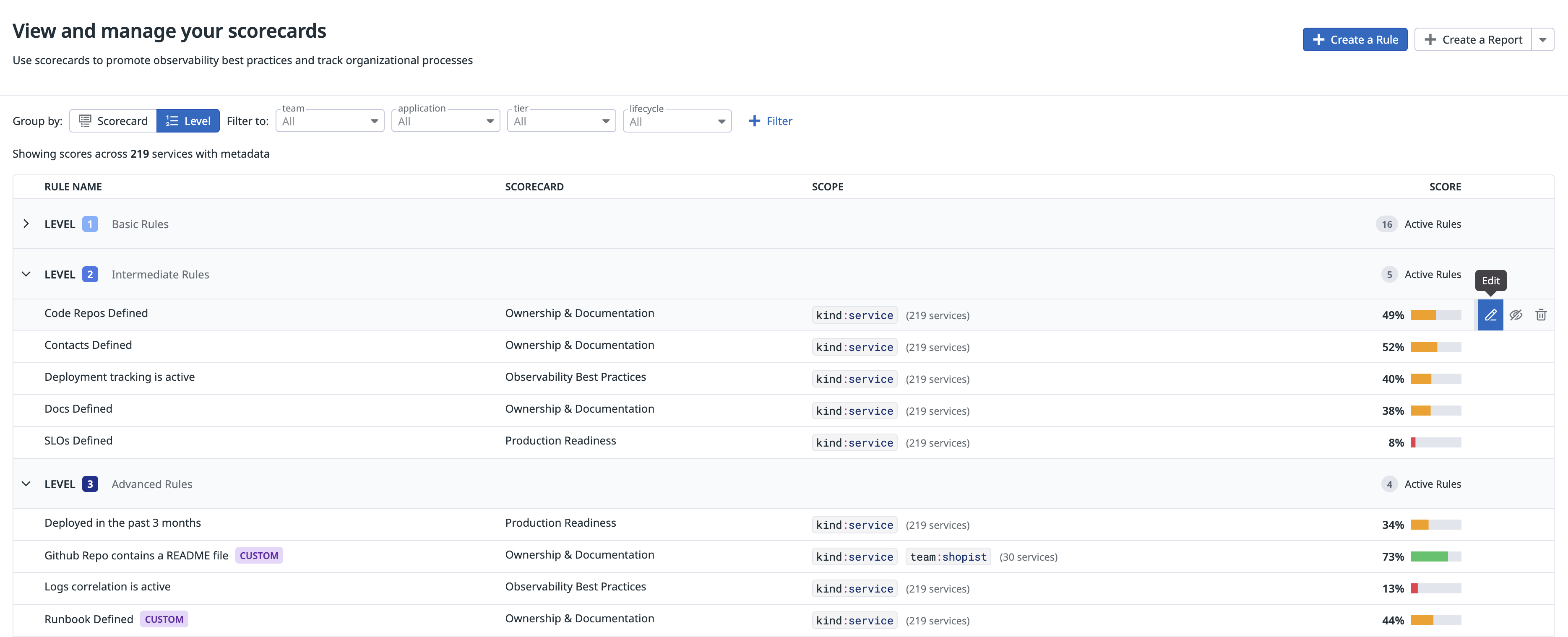

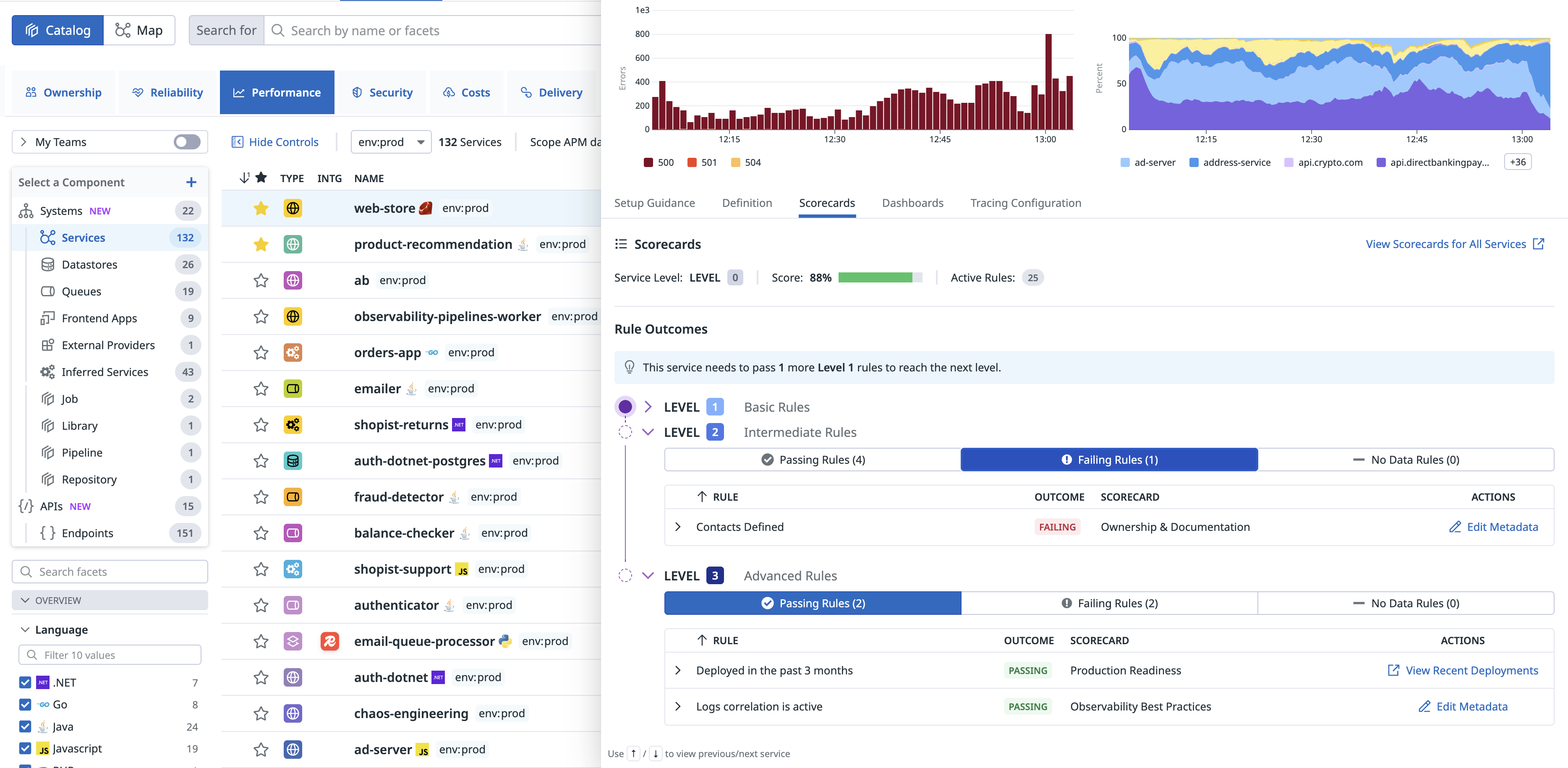

How services are evaluated

After the default scorecards are set up, the Scorecards page in the Software Catalog shows the list of out-of-the-box rules and the percentage of services passing those rules. Click on a rule to see more details about passing and failing services and the teams that own them.

Production Readiness

The Production Readiness score for all services (unless otherwise indicated) is based on these rules:

- Have any SLOs defined

- Service Level Objectives (SLOs) provide a framework for defining clear targets around application performance, which helps you provide a consistent customer experience, balance feature development with platform stability, and improve communication with internal and external users.

- Have any monitors defined

- Monitors reduce downtime by helping your team quickly react to issues in your environment. Review monitor templates.

- Specified on-call

- Improve the on-call experience for everyone by establishing clear ownership of your services. This gives your on-call engineers the correct point of contact during incidents, reducing the time it takes to resolve your incidents.

- Last deployment occurred within the last 3 months

- For services monitored by APM or USM. Agile development practices give you the ability to quickly address user feedback and pivot to developing the most important functionality for your end users.

Observability Best Practices

The Observability Best Practices score is based on the following rules:

- Deployment tracking is active

- For services monitored by APM or USM. Ensure smooth rollouts by implementing a version tag with Unified Service Tagging. As you roll out new versions of your functionality, Datadog captures and alerts on differences between the versions in error rates, number of requests, and more. This can help you understand when to roll back to previous versions to improve end user experience.

- Logs correlation is active

- For APM services, evaluated based on the past hour of logs detected. Correlation between APM and Logs improves the speed of troubleshooting for end users, saving you time during incidents and outages.

Ownership & Documentation

The Ownership & Documentation score is based on the following rules:

- Team defined

- Defining a Team makes it easier for your on-call staff to know which team to escalate to in case a service they are not familiar with is the root cause of an issue.

- Contacts defined

- Defining contacts reduces the time it takes for your on-call staff to escalate to the owner of another service, helping you recover your services faster from outages and incidents.

- Code repos defined

- Identifying code repositories enables your engineers to perform an initial investigation into an issue without having to contact the service’s owning team. This improves collaboration and helps your engineers increase their overall understanding of integration points.

- Any docs defined

- In the Software Catalog Other Links section, specify additional links to resources such as runbooks, dashboards, or other internal documentation. This helps with initial investigations and provides quick access to emergency remediation runbooks for outages and incidents.

How scores are calculated

Each out-of-the-box scorecard (Production Readiness, Observability Best Practices, Ownership & Documentation) is made up of a default set of rules. These reflect pass-fail conditions and are automatically evaluated once per day. A service’s score against custom rules is based on outcomes sent using the Scorecards API. To exclude a particular custom rule from a service’s score calculation, set its outcome to skip in the Scorecards API.

Individual rules may have restrictions based on data availability. For example, deployment-related rules rely on the availability of version tags through APM Unified Service Tagging.

Each rule lists a score for the percentage of services that are passing. Each scorecard has an overall score percentage that totals how many services are passing, across all rules—not how many services are passing all rules. Skipped and disabled rules are not included in this calculation.

Group rules into levels

You can group rules into levels to categorize them by their criticality. There are three predefined levels:

- Level 1 - Basic rules: These rules reflect the baseline expectations for every production service, such as having an on-call owner, monitoring in place, or a team defined.

- Level 2 - Intermediate rules: These rules reflect strong engineering practices that should be adopted across most services. Examples might include defining SLOs or linking documentation within Software Catalog.

- Level 3 - Advanced rules: These aspirational rules represent mature engineering practices. These may not apply to every service but are valuable goals for teams.

You can set levels for any out-of-the-box or custom rules. By default, rules without levels are automatically placed in level 3. You can change this default assignment by editing the rule.

You can group rules by scorecard or level in the Scorecards UI. In the Software Catalog, you can track how a specific service is progressing through each level. Each service starts at Level 0. The service progresses to Level 1 once it passes all level 1 rules until it reaches a Level 3 status.

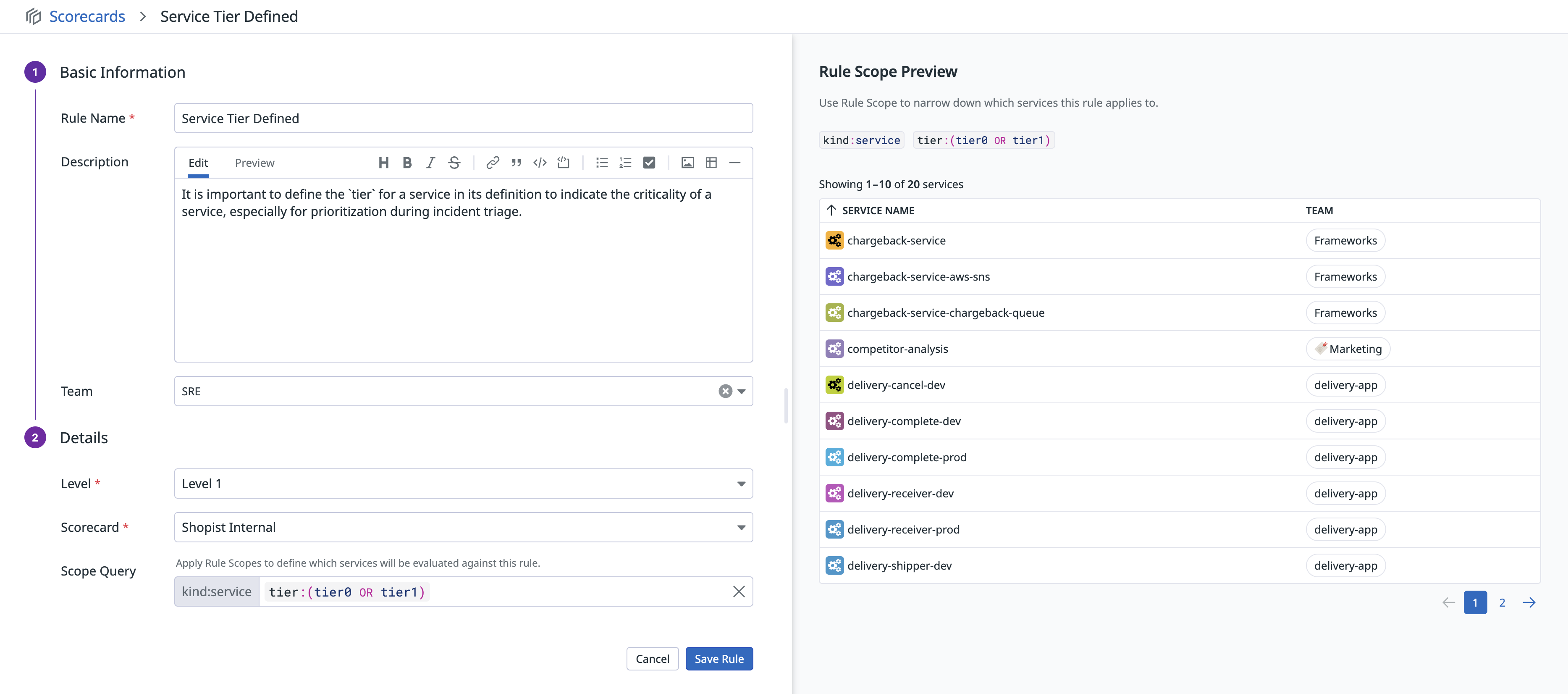

Scope scorecard rules

Scopes allow you to define which entities a rule applies to, using metadata from entity definitions in Software Catalog. Without a scope defined, a rule applies to all defined services in the catalog. You can scope by any field within an entity definition, including team, tier, and custom tags.

By default, a service must match all specified conditions to be evaluated against the rule. You can use OR statements to include multiple values for the same field.

You can set scopes for both out-of-the-box and custom rules. When you add a scope to a rule, any previously recorded outcomes for services that no longer match the scope are hidden from the UI and excluded from score calculations. If you later remove the scope, these outcomes reappear and are counted again.

Further reading

Additional helpful documentation, links, and articles: