- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Actualizar el Agent

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

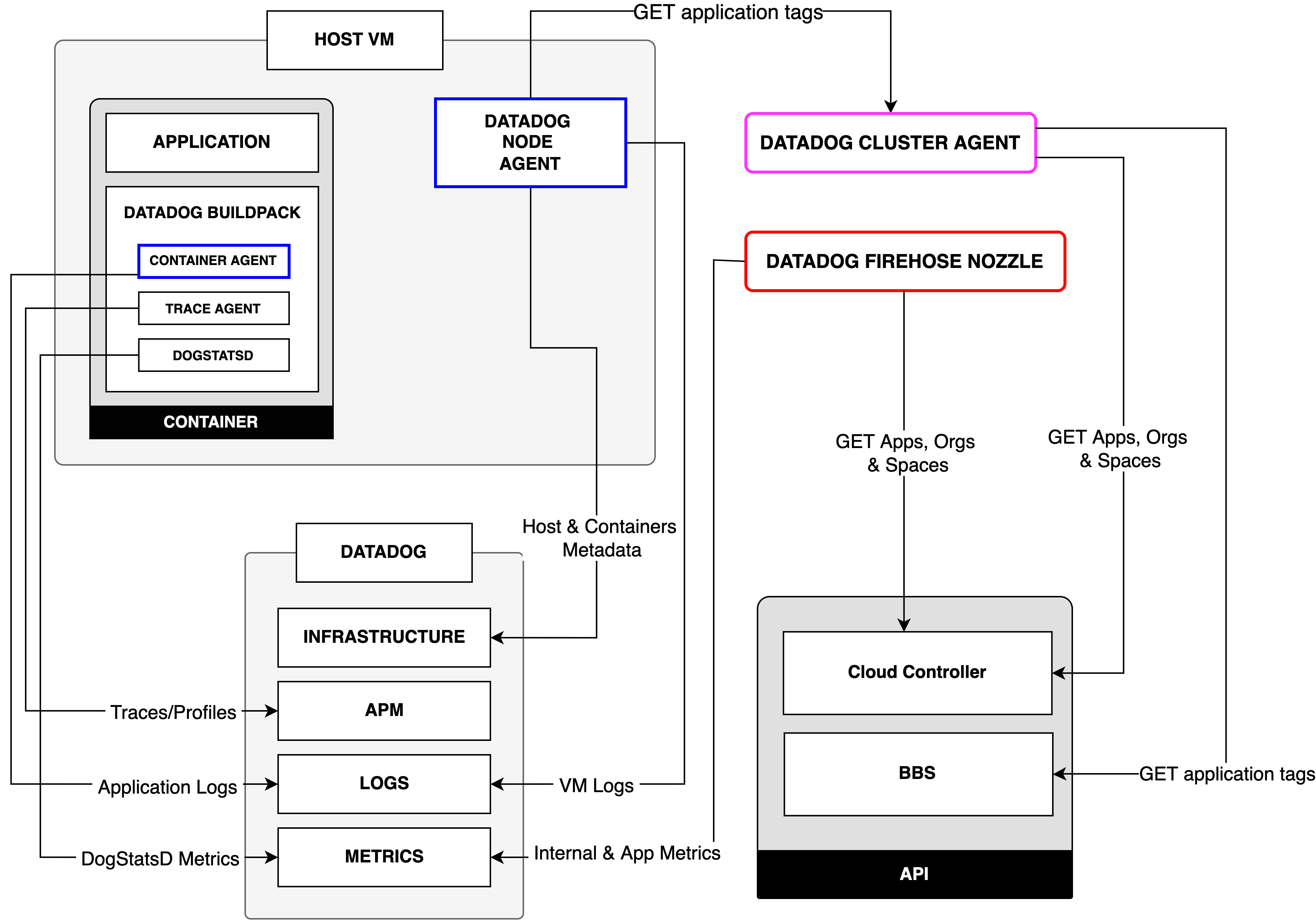

Datadog VMware Tanzu Application Service Integration Architecture

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

Overview

This page describes the architecture behind the Datadog VMware Tanzu Application Service integration.

The following sections provide further detail about the individual components and their interrelationships.

Datadog components for Pivotal Cloud Foundry/PAS

You can deploy and configure the components of the Datadog integration with VMware Tanzu Application Service from the Tanzu Ops Manager, with the Application Monitoring and Cluster Monitoring tiles.

- Datadog Cluster Monitoring Tile - Platform Engineers use this to collect, visualize, and alert on metrics and logs from PCF Platform Components. From this tile, users can deploy the Datadog Node Agent, Datadog Cluster Agent (DCA), and the Firehose Nozzle.

- Datadog Application Monitoring Tile - Application developers use this to collect custom metrics, traces, and logs from their applications. From this tile, users can deploy the Datadog Buildpack which contains the Container Agent, Trace Agent, and DogStatsD.

Datadog Cluster Monitoring tile components

Node Agent

The Node Agent exists on every BOSH VM and reports hosts (VMs), containers, and processes to Datadog. The Node Agent is deployed and configured from the Cluster Monitoring tile in Tanzu Ops Manager. You can also use the Node Agent to retrieve logs from supported integrations.

Tags collected

- Metadata pertaining to the underlying BOSH VMs (for example,

bosh_job,bosh_name). - Corresponding container and application tags from the Datadog Cluster Agent (DCA).

- Custom tags added from the tile during configuration.

All metadata collected appears as host VM and container tags. The host VM monitored by the Node Agent appear in the Infrastructure List page, and the underlying containers within the host appear in the Container Map and Live Containers page.

Datadog Cluster Agent (DCA)

The DCA provides a streamlined, centralized approach to collecting cluster-level monitoring data. It performs similarly to the DCA in a Kubernetes environment. The DCA reduces the load on the Cloud Controller API (CAPI) as it queries the CAPI on behalf of all the singular Node Agents. It relays the cluster-level metadata to the Node Agents, allowing enrichment of the locally-collected metrics. The Node Agents periodically poll an internal API endpoint on the DCA for cluster metadata, and assign it to the corresponding containers within the VM.

Metadata Collected

- Cluster-level metadata (for example,

org_id,org_name,space_id,space_name). - Labels and annotations exposed from the application metadata following the autodiscovery tags format

tags.datadoghq.com/k=v. - List of apps running on each host VM.

Autodiscovery tags are added at the CAPI level as metadata for the application. You can add custom tags through the CAPI, and the DCA picks up these tags periodically. You can also configure the DCA to act as a cache for the Firehose Nozzle with a configuration option within the Cluster Monitoring Tile. This enables the nozzle to query data from the DCA instead of the CAPI, further reducing the load on the CAPI. The metadata collected by the DCA can be seen in the Containers page, with PCF containers assigned the following tags: cloudfoundry, app_name, app_id, and bosh_deployment.

Firehose Nozzle

The Datadog Firehose Nozzle consumes information from your deployment’s Loggregator (PCF’s system for aggregating deployment metrics and application logs). The nozzle collects internal nozzle metrics, application metrics, organization metrics, and logs from the Firehose, and adds the corresponding tags and application metadata it collects from the CAPI. You can configure the metadata filter from the Cluster Monitoring Tile for the Datadog Firehose Nozzle with an allow- and deny-list mechanism. Specify which metadata to add to the metrics collected from the nozzle, and view the metrics and their corresponding tags in the Metrics Summary and Metrics Explorer.

Metadata Collected

- Tags exposed from the application metadata following the autodiscovery tags format

tags.datadoghq.com/k=v. These are the tags a user can add to the application metadata from the CAPI.

Datadog Application Monitoring tile components

Buildpack

The Datadog Buildpack installs the lightweight Datadog Container Agent and Datadog Trace Agent for APM inside the container alongside the application. The Agent is only launched or started if logs collection is enabled through setting DD_LOGS_ENABLED=TRUE, used to send application-level logs to Datadog. Otherwise, DogStatsD is launched to send metrics. When the application is running with the Datadog buildpack, you can pass multiple configuration options using environment variables for the application. The variables can come from the application manifest (manifest.yml) or the Cloud Foundry(CF) CLI using the cf set-env command.

Metadata Collected

- Tags extracted from the

VCAP_APPLICATIONenvironment variable (for example,application_id,name,instance_index,space_name,uris), and theCF_INSTANCE_IPenvironment variable for thecf_instance_iptag. - Tags added using the

DD_TAGSenvironment variable.

These tags are present in the metrics, traces, and logs collected by the Datadog Agent. Depending on the data collected, view it in the Metrics Explorer or Metrics Summary, the Trace Explorer, or the Log Explorer.

Further Reading

Más enlaces, artículos y documentación útiles: