- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Test Impact Analysis

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Sheets

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

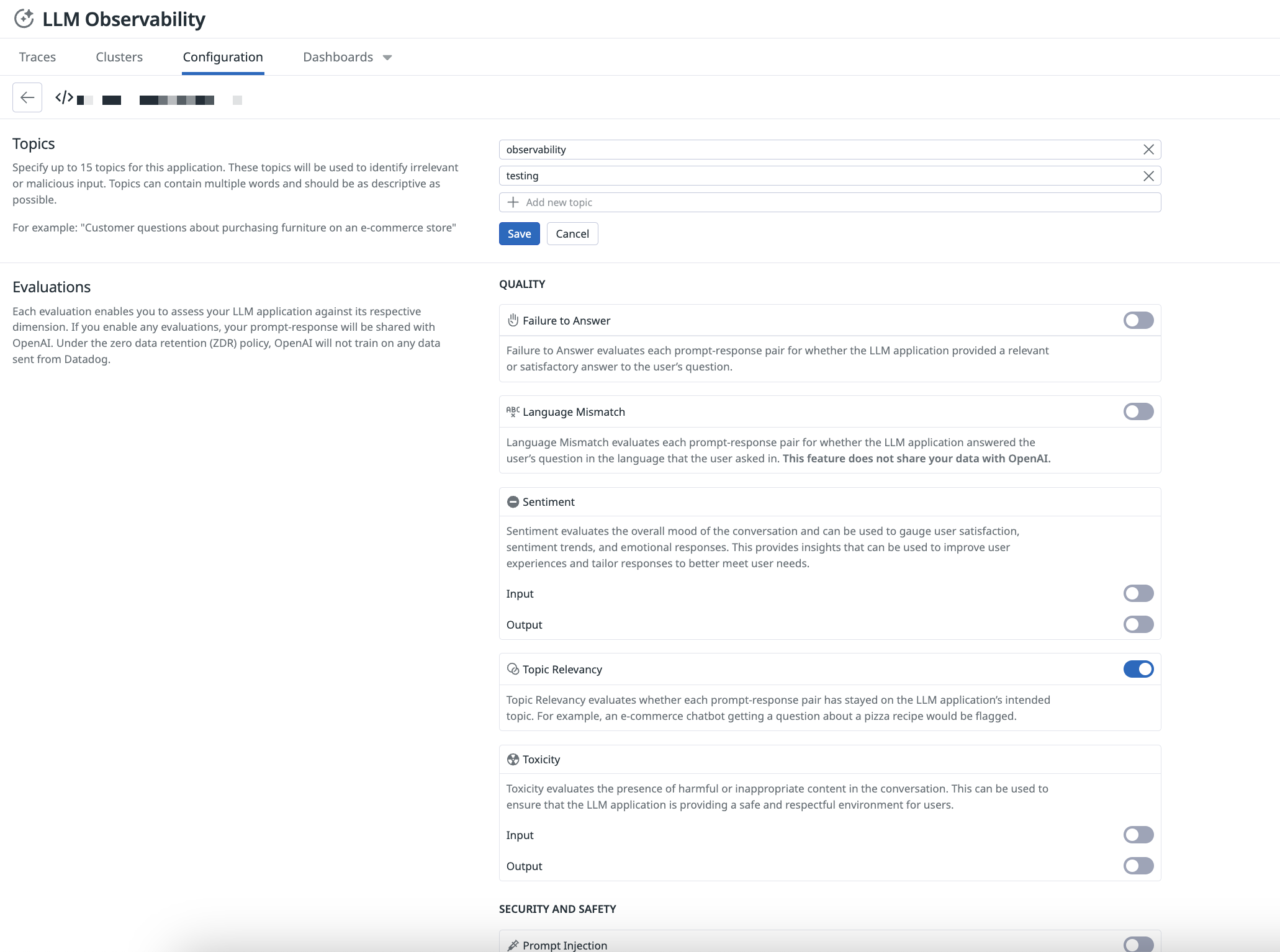

Configuration

Overview

You can configure your LLM applications on the Configuration page with settings that can optimize your application’s performance and security.

- Topics

- Helps identify irrelevant input for the

topic relevancyout-of-the-box evaluation, ensuring your LLM application stays focused on its intended purpose. - Evaluations

- Enables Datadog to assess your LLM application against respective dimensions like Quality and Security and Safety. By enabling evaluations, you can assess the effectiveness of your application’s responses and maintain high standards for both performance and user safety.

Select an LLM application set up with LLM Observability to start customizing its topics and evaluations.

Enabling any of the out-of-the-box evaluations outside of Language Mismatch shares your input and output to OpenAI.

Enter a topic

To enter a topic, click the Edit icon and add keywords. For example, for an LLM application that was designed for incident management, add observability, software engineering, or incident resolution.

Topics can contain multiple words and should be as specific and descriptive as possible. For example, if your application handles customer inquiries for an e-commerce store, you can use “Customer questions about purchasing furniture on an e-commerce store”.

Select evaluations

To enable evaluations, click the toggle for the respective evaluations that you’d like to assess your LLM application against in the Quality and Security and Safety sections. For more information about evaluations, see Terms and Concepts.

By enabling the out-of-the-box evaluations, you acknowledge that Datadog is authorized to share your company's data with OpenAI LLC for the purpose of providing and improving LLM Observability. OpenAI will not use your data for training or tuning purposes. If you have any questions or want to opt out of features that depend on OpenAI, reach out to your account representative.

Further Reading

Additional helpful documentation, links, and articles: