- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- Administrator's Guide

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

Install the Datadog Agent with Embedded OpenTelemetry Collector

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

Join the Preview!

The Datadog Agent with embedded OpenTelemetry Collector is in Preview. To request access, fill out this form.

Request AccessFedRAMP customers should not enable or use the embedded OpenTelemetry Collector.

Overview

Follow this guide to install the Datadog Agent with the OpenTelemetry Collector using Helm.

If you need OpenTelemetry components beyond what's provided in the default package, follow Use Custom OpenTelemetry Components to bring-your-Otel-Components to extend the Datadog Agent's capabilities. For a list of components included by default, see Included components.

Requirements

To complete this guide, you need the following:

Datadog account:

- Create a Datadog account if you don’t have one.

- Find or create your Datadog API key.

- Find or create your Datadog application key.

Software: Install and set up the following on your machine:

- A Kubernetes cluster (v1.29+)

- Note: EKS Fargate environments are not supported

- Helm (v3+)

- Docker

- kubectl

Install the Datadog Agent with OpenTelemetry Collector

Select installation method

Choose one of the following installation methods:

- Datadog Operator: A Kubernetes-native approach that automatically reconciles and maintains your Datadog setup. It reports deployment status, health, and errors in its Custom Resource status, and it limits the risk of misconfiguration thanks to higher-level configuration options.

- Helm chart: A straightforward way to deploy Datadog Agent. It provides versioning, rollback, and templating capabilities, making deployments consistent and easier to replicate.

Install the Datadog Operator

You can install the Datadog Operator in your cluster using the Datadog Operator Helm chart:

helm repo add datadog https://helm.datadoghq.com

helm repo update

helm install datadog-operator datadog/datadog-operator

Add the Datadog Helm Repository

To add the Datadog repository to your Helm repositories:

helm repo add datadog https://helm.datadoghq.com

helm repo update

Set up Datadog API and application keys

- Get the Datadog API and application keys.

- Store the keys as a Kubernetes secret:Replace

kubectl create secret generic datadog-secret \ --from-literal api-key=<DD_API_KEY> \ --from-literal app-key=<DD_APP_KEY><DD_API_KEY>and<DD_APP_KEY>with your actual Datadog API and application keys.

Configure the Datadog Agent

After deploying the Datadog Operator, create the DatadogAgent resource that triggers the deployment of the Datadog Agent, Cluster Agent and Cluster Checks Runners (if used) in your Kubernetes cluster. The Datadog Agent deploys as a DaemonSet, running a pod on every node of your cluster.

- Use the

datadog-agent.yamlfile to specify yourDatadogAgentdeployment configuration.

datadog-agent.yaml

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

clusterName: <CLUSTER_NAME>

site: <DATADOG_SITE>

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

appSecret:

secretName: datadog-secret

keyName: app-key- Replace

<CLUSTER_NAME>with a name for your cluster. - Replace

<DATADOG_SITE>with your Datadog site. Your site is. (Ensure the correct DATADOG SITE is selected on the right.)

- Switch the Datadog Agent image to use builds with embedded OpenTelemetry Collector:

datadog-agent.yaml

...

override:

# Node Agent configuration

nodeAgent:

image:

name: "gcr.io/datadoghq/agent:7.62.2-ot-beta"

pullPolicy: AlwaysThis guide uses a Java application example. The

For more details, see Autodiscovery and JMX integration guide.

-jmx suffix in the image tag enables JMX utilities. For non-Java applications, use 7.62.2-ot-beta instead.For more details, see Autodiscovery and JMX integration guide.

By default, the Agent image is pulled from Google Artifact Registry (gcr.io/datadoghq). If Artifact Registry is not accessible in your deployment region, use another registry.

- Enable the OpenTelemetry Collector:

datadog-agent.yaml

...

# Enable Features

features:

otelCollector:

enabled: trueThe Datadog Operator automatically binds the OpenTelemetry Collector to ports 4317 (named otel-grpc) and 4318 (named otel-http) by default.

To explicitly override the default ports, use features.otelCollector.ports parameter:

datadog-agent.yaml

...

# Enable Features

features:

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-httpWhen configuring ports

4317 and 4318, you must use the default names otel-grpc and otel-http respectively to avoid port conflicts.- (Optional) Enable additional Datadog features:

Enabling these features may incur additional charges. Review the pricing page and talk to your CSM before proceeding.

datadog-agent.yaml

# Enable Features

features:

...

apm:

enabled: true

orchestratorExplorer:

enabled: true

processDiscovery:

enabled: true

liveProcessCollection:

enabled: true

usm:

enabled: true

clusterChecks:

enabled: trueCompleted datadog-agent.yaml file

Completed datadog-agent.yaml file

Your datadog-agent.yaml file should look something like this:

datadog-agent.yaml

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

clusterName: <CLUSTER_NAME>

site: <DATADOG_SITE>

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

appSecret:

secretName: datadog-secret

keyName: app-key

override:

# Node Agent configuration

nodeAgent:

image:

name: "gcr.io/datadoghq/agent:7.62.2-ot-beta"

pullPolicy: Always

# Enable Features

features:

apm:

enabled: true

orchestratorExplorer:

enabled: true

processDiscovery:

enabled: true

liveProcessCollection:

enabled: true

usm:

enabled: true

clusterChecks:

enabled: true

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-httpUse a YAML file to specify the Helm chart parameters for the Datadog Agent chart.

- Create an empty

datadog-values.yamlfile:

touch datadog-values.yaml

Unspecified parameters use defaults from values.yaml.

- Configure the Datadog API and application key secrets:

datadog-values.yaml

datadog:

site: <DATADOG_SITE>

apiKeyExistingSecret: datadog-secret

appKeyExistingSecret: datadog-secret

logLevel: infoSet <DATADOG_SITE> to your Datadog site. Otherwise, it defaults to datadoghq.com, the US1 site.

The log level

datadog.logLevel parameter value should be set in lower case. Valid log levels are: trace, debug, info, warn, error, critical, off.- Switch the Datadog Agent image tag to use builds with the embedded OpenTelemetry Collector:

datadog-values.yaml

agents:

image:

repository: gcr.io/datadoghq/agent

tag: 7.62.2-ot-beta-jmx

doNotCheckTag: true

...This guide uses a Java application example. The

For more details, see Autodiscovery and JMX integration guide.

-jmx suffix in the image tag enables JMX utilities. For non-Java applications, use 7.62.2-ot-beta instead.For more details, see Autodiscovery and JMX integration guide.

By default, the Agent image is pulled from Google Artifact Registry (gcr.io/datadoghq). If Artifact Registry is not accessible in your deployment region, use another registry.

- Enable the OpenTelemetry Collector and configure the essential ports:

datadog-values.yaml

datadog:

...

otelCollector:

enabled: true

ports:

- containerPort: "4317" # default port for OpenTelemetry gRPC receiver.

hostPort: "4317"

name: otel-grpc

- containerPort: "4318" # default port for OpenTelemetry HTTP receiver

hostPort: "4318"

name: otel-httpSet the hostPort to expose the container port to the external network. This enables configuring the OTLP exporter to point to the IP address of the node where the Datadog Agent is assigned.

If you don’t want to expose the port, you can use the Agent service instead:

- Remove the

hostPortentries from yourdatadog-values.yamlfile. - In your application’s deployment file (

deployment.yaml), configure the OTLP exporter to use the Agent service:env: - name: OTEL_EXPORTER_OTLP_ENDPOINT value: 'http://<SERVICE_NAME>.<SERVICE_NAMESPACE>.svc.cluster.local' - name: OTEL_EXPORTER_OTLP_PROTOCOL value: 'grpc'

- (Optional) Enable additional Datadog features:

Enabling these features may incur additional charges. Review the pricing page and talk to your CSM before proceeding.

datadog-values.yaml

datadog:

...

apm:

portEnabled: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

peer_service_aggregation: true

orchestratorExplorer:

enabled: true

processAgent:

enabled: true

processCollection: true- (Optional) Collect pod labels and use them as tags to attach to metrics, traces, and logs:

Custom metrics may impact billing. See the custom metrics billing page for more information.

datadog-values.yaml

datadog:

...

podLabelsAsTags:

app: kube_app

release: helm_releaseCompleted datadog-values.yaml file

Completed datadog-values.yaml file

Your datadog-values.yaml file should look something like this:

datadog-values.yaml

agents:

image:

repository: gcr.io/datadoghq/agent

tag: 7.62.2-ot-beta-jmx

doNotCheckTag: true

datadog:

site: datadoghq.com

apiKeyExistingSecret: datadog-secret

appKeyExistingSecret: datadog-secret

logLevel: info

otelCollector:

enabled: true

ports:

- containerPort: "4317"

hostPort: "4317"

name: otel-grpc

- containerPort: "4318"

hostPort: "4318"

name: otel-http

apm:

portEnabled: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

peer_service_aggregation: true

orchestratorExplorer:

enabled: true

processAgent:

enabled: true

processCollection: true

podLabelsAsTags:

app: kube_app

release: helm_release

Configure the OpenTelemetry Collector

The Datadog Operator provides a sample OpenTelemetry Collector configuration that you can use as a starting point. If you need to modify this configuration, the Datadog Operator supports two ways of providing a custom Collector configuration:

- Inline configuration: Add your custom Collector configuration directly in the

features.otelCollector.conf.configDatafield. - ConfigMap-based configuration: Store your Collector configuration in a ConfigMap and reference it in the

features.otelCollector.conf.configMapfield. This approach allows you to keep Collector configuration decoupled from theDatadogAgentresource.

Inline Collector configuration

In the snippet below, the Collector configuration is placed directly under the features.otelCollector.conf.configData parameter:

datadog-agent.yaml

...

# Enable Features

features:

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-http

conf:

configData: |-

receivers:

prometheus:

config:

scrape_configs:

- job_name: "otelcol"

scrape_interval: 10s

static_configs:

- targets:

- 0.0.0.0:8888

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}

processors:

infraattributes:

cardinality: 2

batch:

timeout: 10s

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog, datadog/connector]

metrics:

receivers: [otlp, datadog/connector, prometheus]

processors: [infraattributes, batch]

exporters: [debug, datadog]

logs:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog] When you apply the datadog-agent.yaml file containing this DatadogAgent resource, the Operator automatically mounts the Collector configuration into the Agent DaemonSet.

Completed datadog-agent.yaml file

Completed datadog-agent.yaml file

Completed datadog-agent.yaml with inline Collector configuration should look something like this:

datadog-agent.yaml

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

clusterName: <CLUSTER_NAME>

site: <DATADOG_SITE>

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

appSecret:

secretName: datadog-secret

keyName: app-key

override:

# Node Agent configuration

nodeAgent:

image:

name: "gcr.io/datadoghq/agent:7.62.2-ot-beta"

pullPolicy: Always

# Enable Features

features:

apm:

enabled: true

orchestratorExplorer:

enabled: true

processDiscovery:

enabled: true

liveProcessCollection:

enabled: true

usm:

enabled: true

clusterChecks:

enabled: true

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-http

conf:

configData: |-

receivers:

prometheus:

config:

scrape_configs:

- job_name: "datadog-agent"

scrape_interval: 10s

static_configs:

- targets:

- 0.0.0.0:8888

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}

processors:

infraattributes:

cardinality: 2

batch:

timeout: 10s

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog, datadog/connector]

metrics:

receivers: [otlp, datadog/connector, prometheus]

processors: [infraattributes, batch]

exporters: [debug, datadog]

logs:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog] ConfigMap-based Collector Configuration

For more complex or frequently updated configurations, storing Collector configuration in a ConfigMap can simplify version control.

- Create a ConfigMap that contains your Collector configuration:

configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-config-map

namespace: system

data:

# must be named otel-config.yaml

otel-config.yaml: |-

receivers:

prometheus:

config:

scrape_configs:

- job_name: "datadog-agent"

scrape_interval: 10s

static_configs:

- targets:

- 0.0.0.0:8888

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}

processors:

infraattributes:

cardinality: 2

batch:

timeout: 10s

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog, datadog/connector]

metrics:

receivers: [otlp, datadog/connector, prometheus]

processors: [infraattributes, batch]

exporters: [debug, datadog]

logs:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog] The field for Collector config in the ConfigMap must be called

otel-config.yaml.- Reference the

otel-agent-config-mapConfigMap in yourDatadogAgentresource usingfeatures.otelCollector.conf.configMapparameter:

datadog-agent.yaml

...

# Enable Features

features:

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-http

conf:

configMap:

name: otel-agent-config-mapThe Operator automatically mounts otel-config.yaml from the ConfigMap into the Agent’s OpenTelemetry Collector DaemonSet.

Completed datadog-agent.yaml file

Completed datadog-agent.yaml file

Completed datadog-agent.yaml with Collector configuration defined as ConfigMap should look something like this:

datadog-agent.yaml

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

clusterName: <CLUSTER_NAME>

site: <DATADOG_SITE>

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

appSecret:

secretName: datadog-secret

keyName: app-key

override:

# Node Agent configuration

nodeAgent:

image:

name: "gcr.io/datadoghq/agent:7.62.2-ot-beta"

pullPolicy: Always

# Enable Features

features:

apm:

enabled: true

orchestratorExplorer:

enabled: true

processDiscovery:

enabled: true

liveProcessCollection:

enabled: true

usm:

enabled: true

clusterChecks:

enabled: true

otelCollector:

enabled: true

ports:

- containerPort: 4317

hostPort: 4317

name: otel-grpc

- containerPort: 4318

hostPort: 4318

name: otel-http

conf:

configMap:

name: otel-agent-config-map

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-config-map

namespace: system

data:

# must be named otel-config.yaml

otel-config.yaml: |-

receivers:

prometheus:

config:

scrape_configs:

- job_name: "datadog-agent"

scrape_interval: 10s

static_configs:

- targets:

- 0.0.0.0:8888

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}

processors:

infraattributes:

cardinality: 2

batch:

timeout: 10s

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog, datadog/connector]

metrics:

receivers: [otlp, datadog/connector, prometheus]

processors: [infraattributes, batch]

exporters: [debug, datadog]

logs:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [debug, datadog] The Datadog Helm chart provides a sample OpenTelemetry Collector configuration that you can use as a starting point. This section walks you through the predefined pipelines and included OpenTelemetry components.

This is the full OpenTelemetry Collector configuration in otel-config.yaml:

otel-config.yaml

receivers:

prometheus:

config:

scrape_configs:

- job_name: "otelcol"

scrape_interval: 10s

static_configs:

- targets: ["0.0.0.0:8888"]

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}

processors:

infraattributes:

cardinality: 2

batch:

timeout: 10s

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [datadog, datadog/connector]

metrics:

receivers: [otlp, datadog/connector, prometheus]

processors: [infraattributes, batch]

exporters: [datadog]

logs:

receivers: [otlp]

processors: [infraattributes, batch]

exporters: [datadog]Key components

To send telemetry data to Datadog, the following components are defined in the configuration:

Datadog connector

The Datadog connector computes Datadog APM trace metrics.

otel-config.yaml

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: trueDatadog exporter

The Datadog exporter exports traces, metrics, and logs to Datadog.

otel-config.yaml

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

site: ${env:DD_SITE}Note: If key is not specified or set to a secret, or if site is not specified, the system uses values from the core Agent configuration. By default, the core Agent sets site to datadoghq.com (US1).

Prometheus receiver

The Prometheus receiver collects health metrics from the OpenTelemetry Collector for the metrics pipeline.

otel-config.yaml

receivers:

prometheus:

config:

scrape_configs:

- job_name: "otelcol"

scrape_interval: 10s

static_configs:

- targets: ["0.0.0.0:8888"]For more information, see the Collector Health Metrics documentation.

Deploy the Agent with the OpenTelemetry Collector

Deploy the Datadog Agent with the configuration file:

kubectl apply -f datadog-agent.yaml

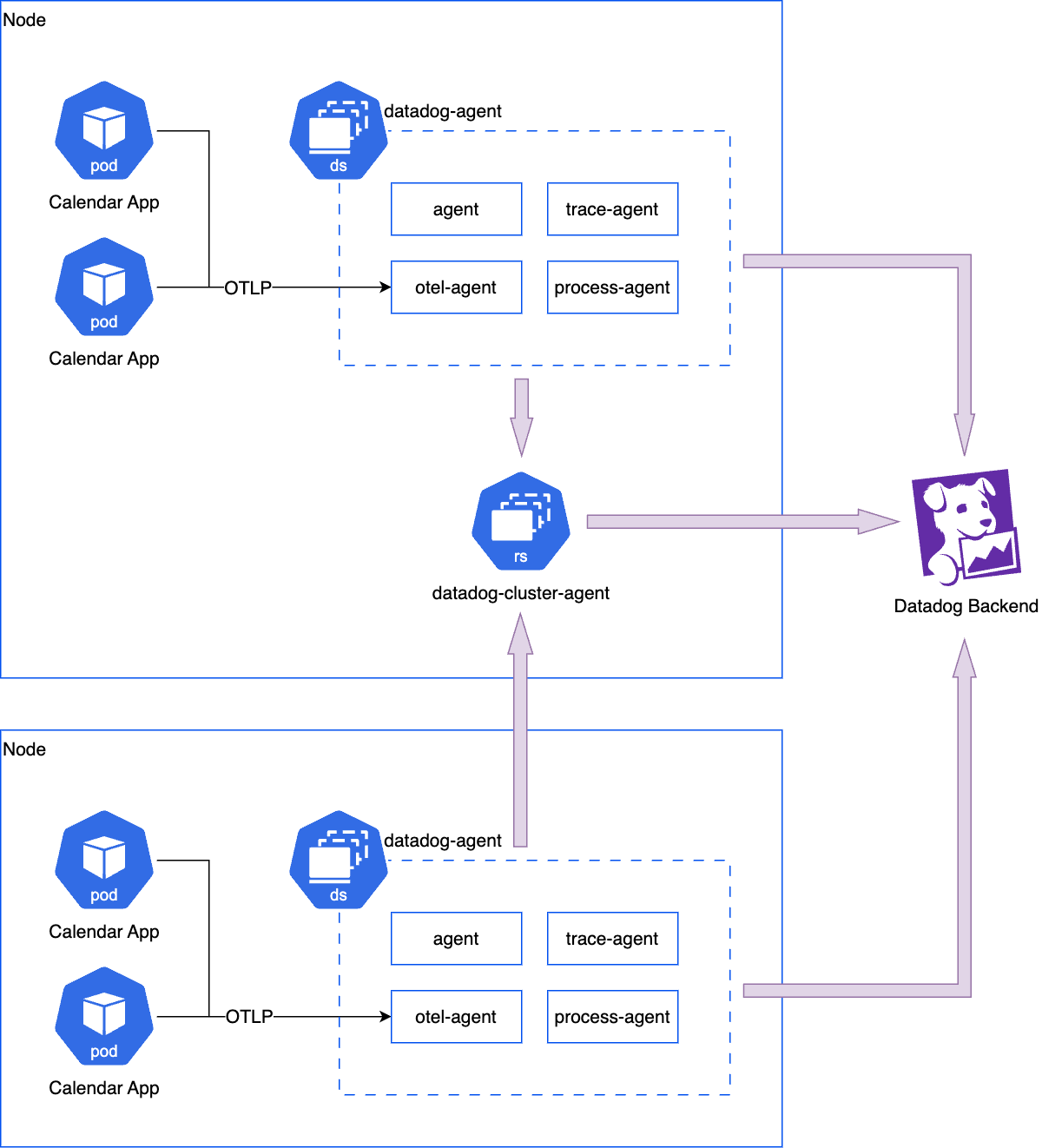

This deploys the Datadog Agent as a DaemonSet with the embedded OpenTelemetry Collector. The Collector runs on the same host as your application, following the Agent deployment pattern. The Gateway deployment pattern is not supported.

To install or upgrade the Datadog Agent with OpenTelemetry Collector in your Kubernetes environment, use one of the following Helm commands:

For default OpenTelemetry Collector configuration:

helm upgrade -i <RELEASE_NAME> datadog/datadog -f datadog-values.yamlFor custom OpenTelemetry Collector configuration:

helm upgrade -i <RELEASE_NAME> datadog/datadog \ -f datadog-values.yaml \ --set-file datadog.otelCollector.config=otel-config.yamlThis command allows you to specify your own

otel-config.yamlfile.

Replace <RELEASE_NAME> with the Helm release name you are using.

You may see warnings during the deployment process. These warnings can be ignored.

This Helm chart deploys the Datadog Agent with OpenTelemetry Collector as a DaemonSet. The Collector is deployed on the same host as your application, following the Agent deployment pattern. The Gateway deployment pattern is not supported.

Deployment diagram

Deployment diagram

Send your telemetry to Datadog

To send your telemetry data to Datadog:

- Instrument your application

- Configure the application

- Correlate observability data

- Run your application

Instrument the application

Instrument your application using the OpenTelemetry API.

As an example, you can use the Calendar sample application that’s already instrumented for you.

- Clone the

opentelemetry-examplesrepository to your device:git clone https://github.com/DataDog/opentelemetry-examples.git - Navigate to the

/calendardirectory:cd opentelemetry-examples/apps/rest-services/java/calendar - The following code instruments the CalendarService.getDate() method using the OpenTelemetry annotations and API:

CalendarService.java

@WithSpan(kind = SpanKind.CLIENT) public String getDate() { Span span = Span.current(); span.setAttribute("peer.service", "random-date-service"); ... }

Configure the application

To configure your application container, ensure that the correct OTLP endpoint hostname is used. The Datadog Agent with OpenTelemetry Collector is deployed as a DaemonSet, so the current host needs to be targeted.

The Calendar application container is already configure with correct OTEL_EXPORTER_OTLP_ENDPOINT environment variable as defined in Helm chart:

- Go to the Calendar application’s Deployment manifest file:

./deploys/calendar/templates/deployment.yaml - The following environment variables configure the OTLP endpoint:

deployment.yaml

env: ... - name: HOST_IP valueFrom: fieldRef: fieldPath: status.hostIP - name: OTLP_GRPC_PORT value: "4317" - name: OTEL_EXPORTER_OTLP_ENDPOINT value: 'http://$(HOST_IP):$(OTLP_GRPC_PORT)' - name: OTEL_EXPORTER_OTLP_PROTOCOL value: 'grpc'

Correlate observability data

Unified service tagging ties observability data together in Datadog so you can navigate across metrics, traces, and logs with consistent tags.

In this example, the Calendar application is already configured with unified service tagging as defined in Helm chart:

- Go to the Calendar application’s Deployment manifest file:

./deploys/calendar/templates/deployment.yaml - The following environment variables configure the OTLP endpoint:

deployment.yaml

env: ... - name: OTEL_SERVICE_NAME value: {{ include "calendar.fullname" . }} - name: OTEL_K8S_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace - name: OTEL_K8S_NODE_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: spec.nodeName - name: OTEL_K8S_POD_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.name - name: OTEL_EXPORTER_OTLP_PROTOCOL value: 'grpc' - name: OTEL_RESOURCE_ATTRIBUTES value: >- service.name=$(OTEL_SERVICE_NAME), k8s.namespace.name=$(OTEL_K8S_NAMESPACE), k8s.node.name=$(OTEL_K8S_NODE_NAME), k8s.pod.name=$(OTEL_K8S_POD_NAME), k8s.container.name={{ .Chart.Name }}, host.name=$(OTEL_K8S_NODE_NAME), deployment.environment=$(OTEL_K8S_NAMESPACE)

Run the application

To start generating and forwarding observability data to Datadog, you need to deploy the Calendar application with the OpenTelemetry SDK using Helm.

- Run the following

helmcommand from thecalendar/folder:

helm upgrade -i <CALENDAR_RELEASE_NAME> ./deploys/calendar/

- This Helm chart deploys the sample Calendar application as a ReplicaSet.

- To test that the Calendar application is running correctly, execute the following command from another terminal window:

curl localhost:9090/calendar - Verify that you receive a response like:

{"date":"2024-12-30"}

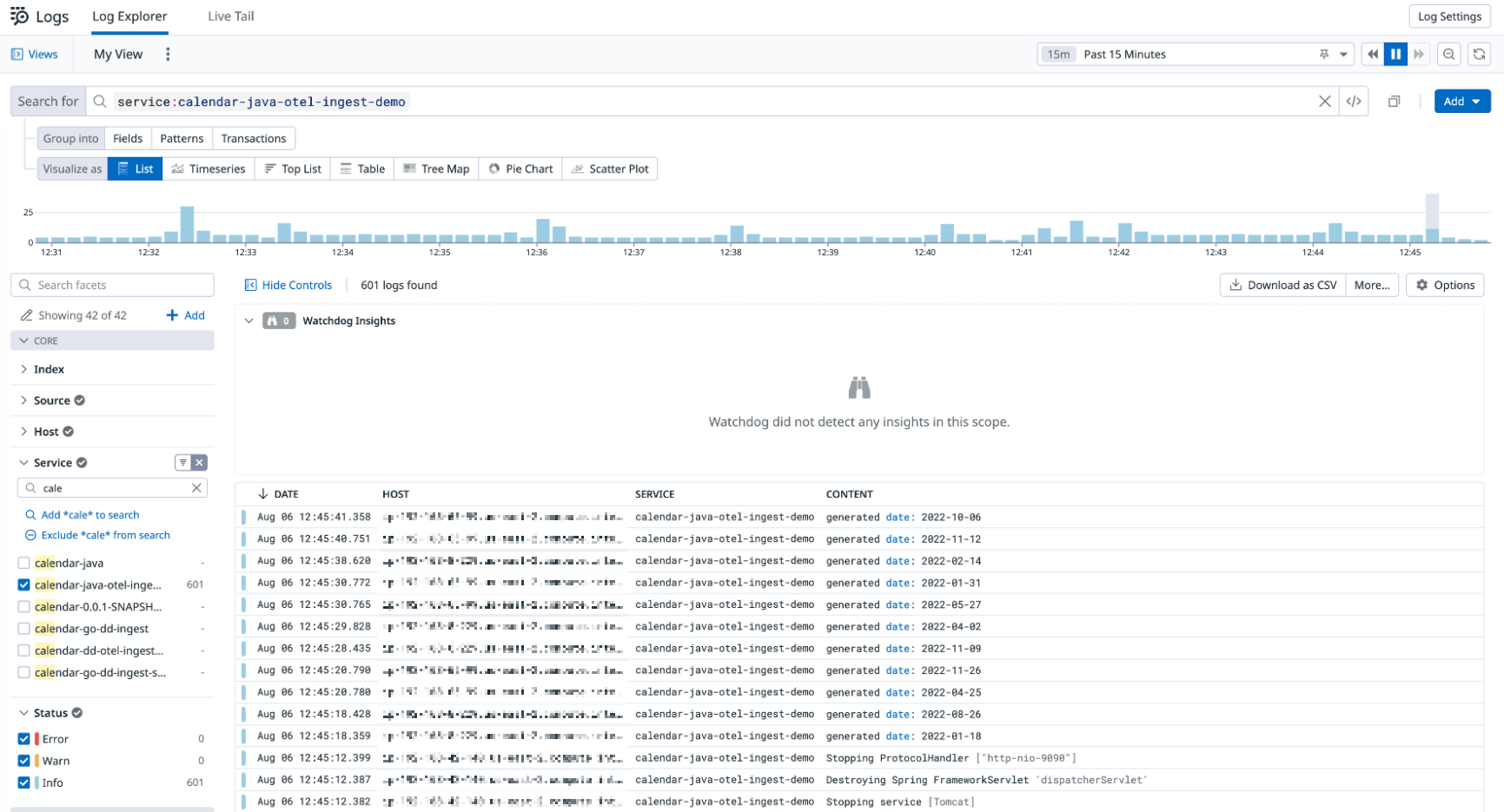

Each call to the Calendar application results in metrics, traces, and logs being forwarded to the Datadog backend.

Explore observability data in Datadog

Use Datadog to explore the observability data for the sample Calendar app.

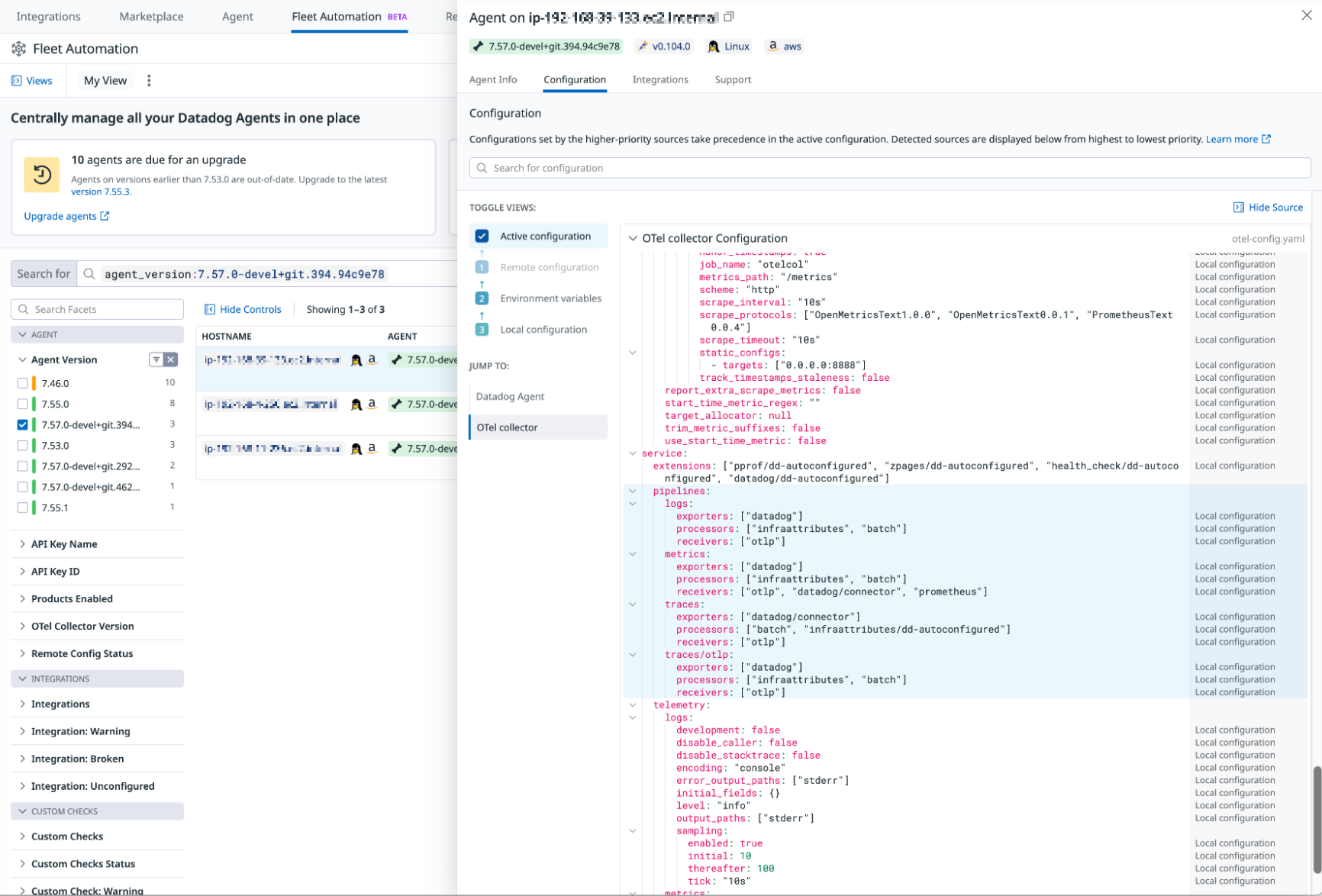

Fleet automation

Explore your Datadog Agent and Collector configuration.

Live container monitoring

Monitor your container health using Live Container Monitoring capabilities.

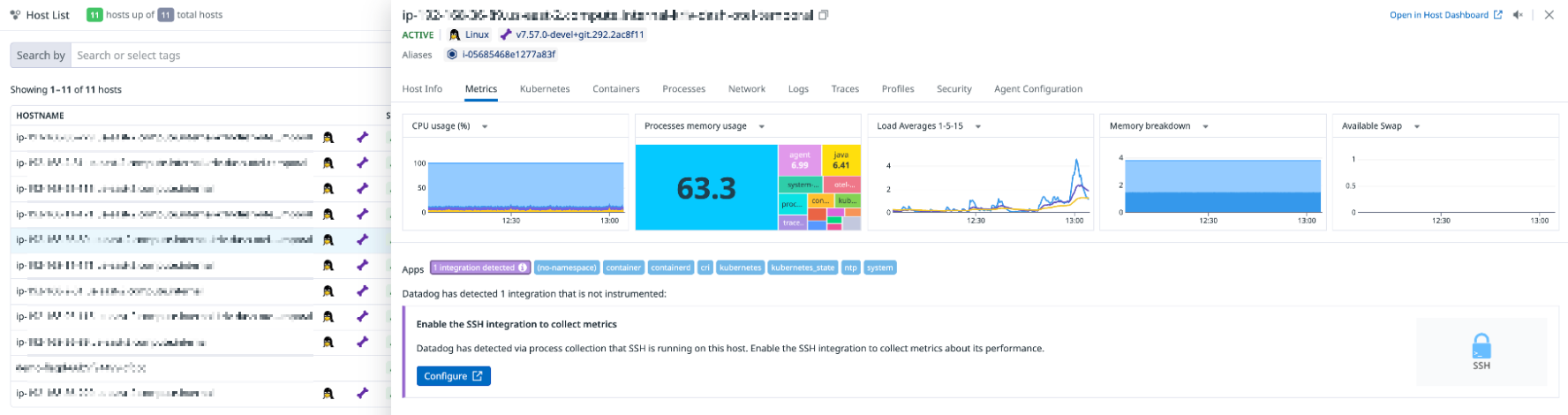

Infrastructure node health

View runtime and infrastructure metrics to visualize, monitor, and measure the performance of your nodes.

Logs

View logs to monitor and troubleshoot application and system operations.

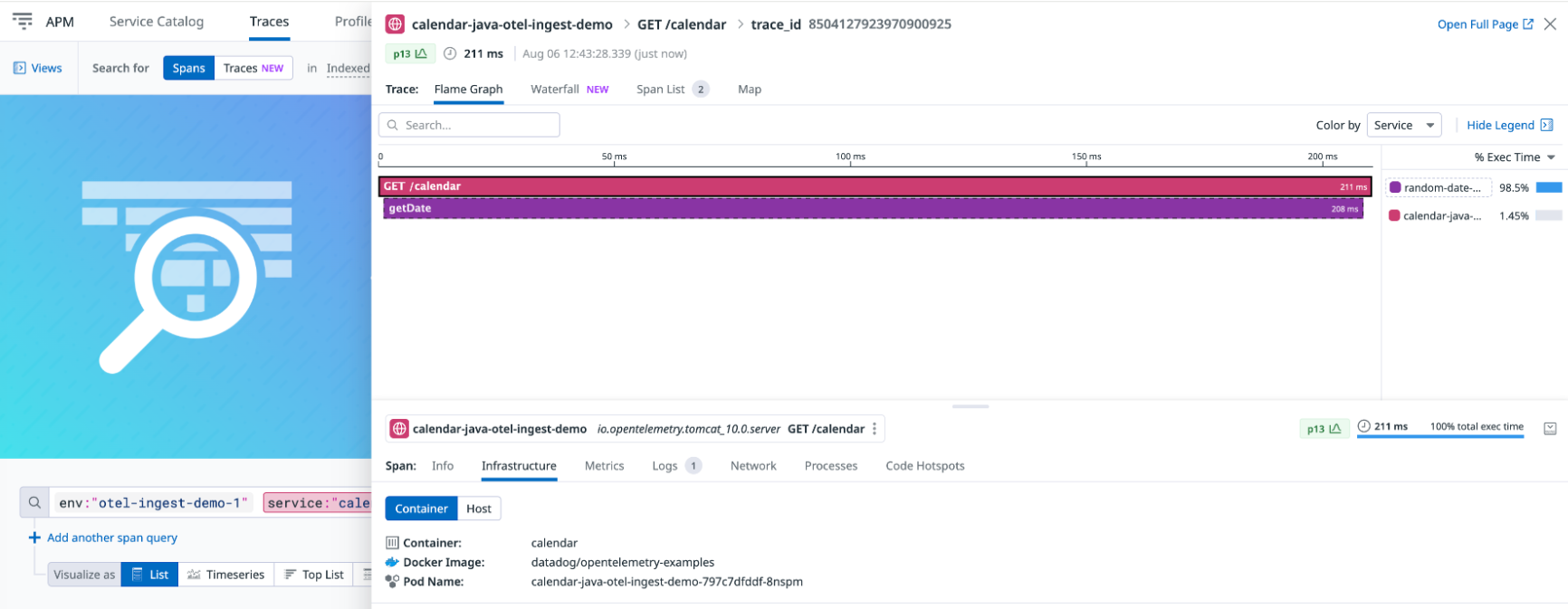

Traces

View traces and spans to observe the status and performance of requests processed by your application, with infrastructure metrics correlated in the same trace.

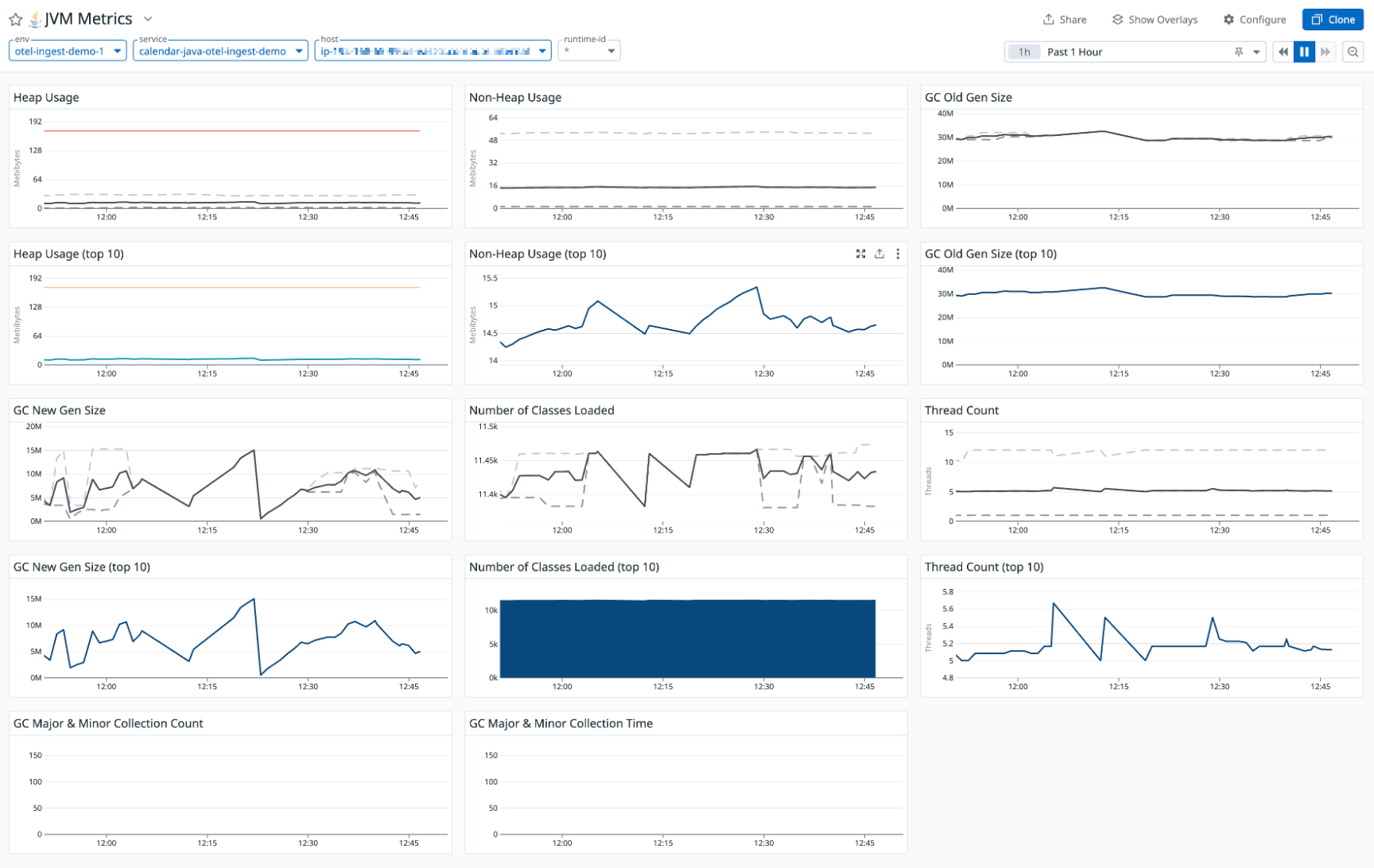

Runtime metrics

Monitor your runtime (JVM) metrics for your applications.

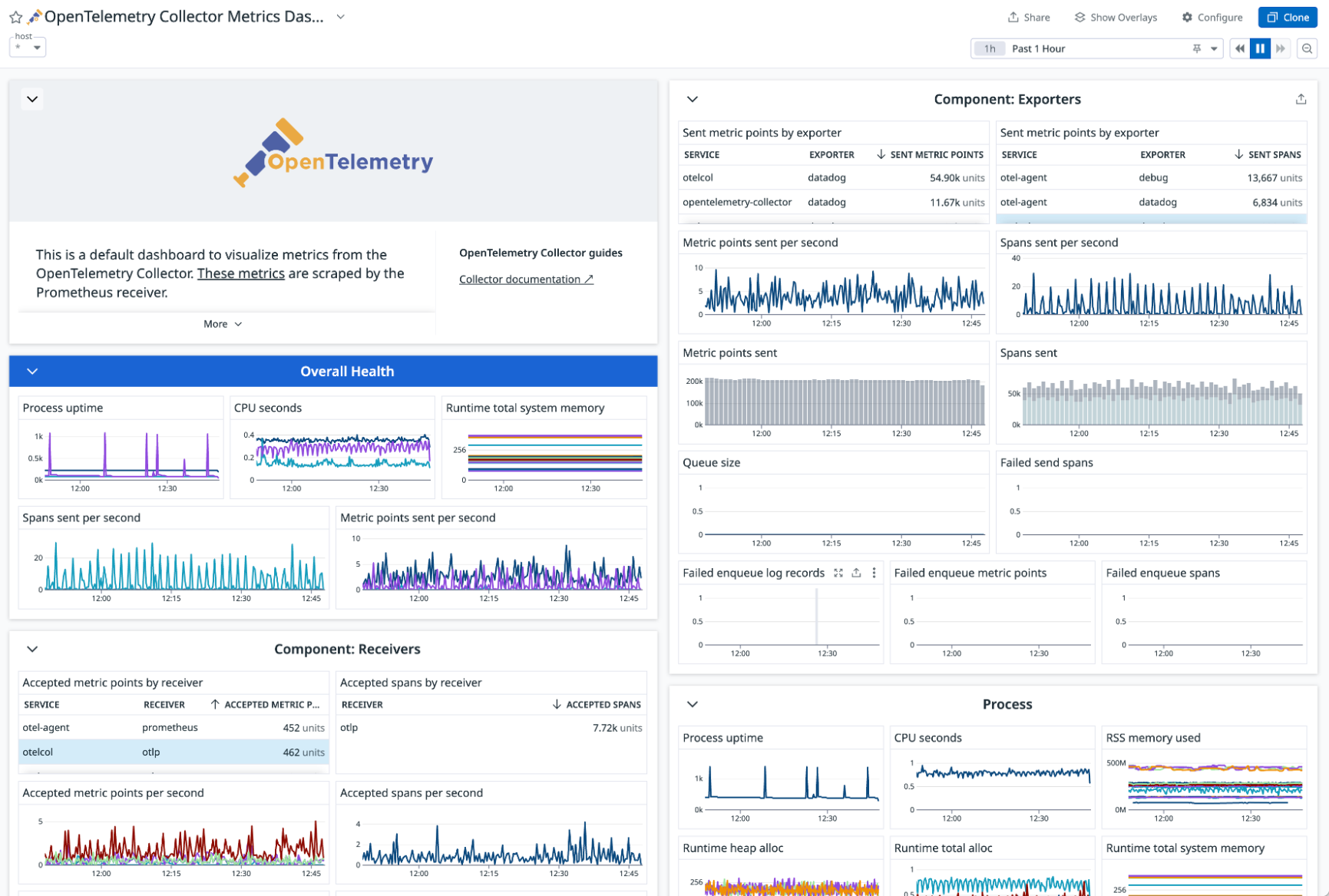

Collector health metrics

View metrics from the embedded Collector to monitor the Collector health.

Included components

By default, the Datadog Agent with embedded Collector ships with the following Collector components. You can also see the list in YAML format.

Receivers

Receivers

Processors

Processors

Connectors

Connectors

Further reading

Más enlaces, artículos y documentación útiles: