- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Test Impact Analysis

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- Administrator's Guide

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Sheets

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Understand Datadog retention policy to efficiently retain trace data

Ingesting and retaining the traces you care about

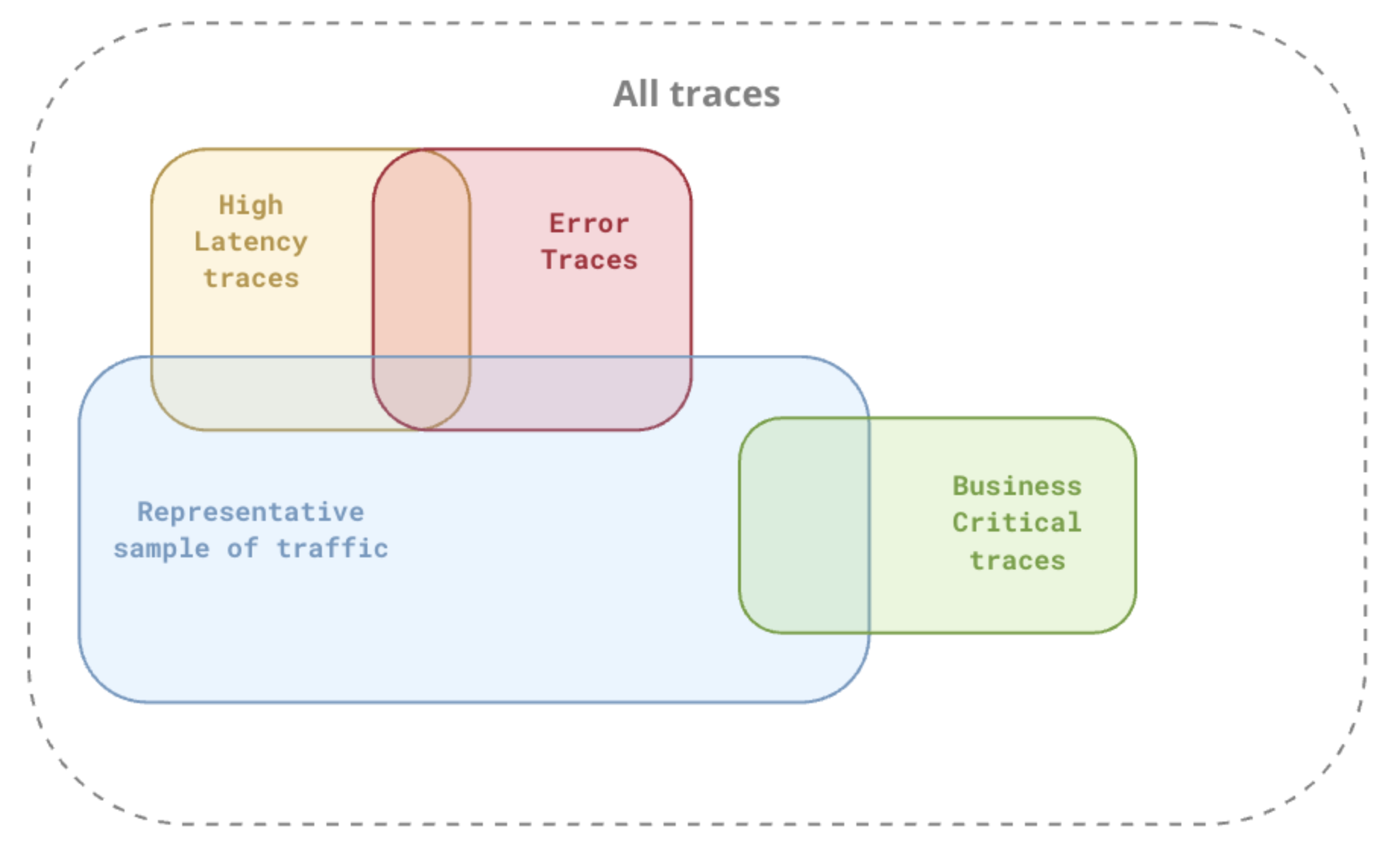

Most traces generated by your applications are repetitive, and it’s not necessarily relevant to ingest and retain them all. For successful requests, retaining a representative sample of your applications’ traffic is enough, since you can’t possibly scan through dozens of individual traced requests every second.

What’s most important are the traces that contain symptoms of potential issues in your infrastructure, that is, traces with errors or unusual latency. In addition, for specific endpoints that are critical to your business, you might want to retain 100% of the traffic, to ensure that are you are able to investigate and troubleshoot any customer problem in great detail.

How Datadog’s retention policy helps you retain what matters

Datadog provides two main ways of retaining data past 15 minutes:

- The Intelligent retention filter which is always enabled.

- Custom tag-based retention filters that you can manually configure.

Diversity sampling algorithm: Intelligent retention filter

By default, the Intelligent retention filter keeps a representative selection of traces without requiring you to create dozens of custom retention filters.

It keeps at least one span (and the associated distributed trace) for each combination of environment, service, operation, and resource every 15 minutes at most for the p75, p90, and p95 latency percentiles, as well as a representative selection of errors, for each distinct response status code.

To learn more, read the Intelligent retention filter documentation.

Tag-based retention filters

Tag-based retention filters provide the flexibility to keep traces that are the most critical to your business. When indexing spans with retention filters, the associated trace is also stored, which ensures that you keep visibility over the entire request and its distributed context.

Searching and analyzing indexed span data effectively

The set of data captured by diversity sampling is not uniformly sampled (that is, it is not proportionally representative of the full traffic). It is biased towards errors and high latency traces. If you want to build analytics only on top of a uniformly sampled dataset, exclude these spans that are sampled for diversity reasons by adding the -retained_by:diversity_sampling query parameter in the Trace Explorer.

For example, to measure the number of checkout operations grouped by merchant tier on your application, excluding the diversity sampling dataset ensures that you perform this analysis on top of a representative set of data, and so proportions of basic, enterprise, and premium checkouts are realistic:

On the other hand, if you want to measure the number of unique merchants by merchant tier, include the diversity sampling dataset which might capture additional merchant IDs not caught by custom retention filters:

Additional helpful documentation, links, and articles: