- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- Administrator's Guide

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

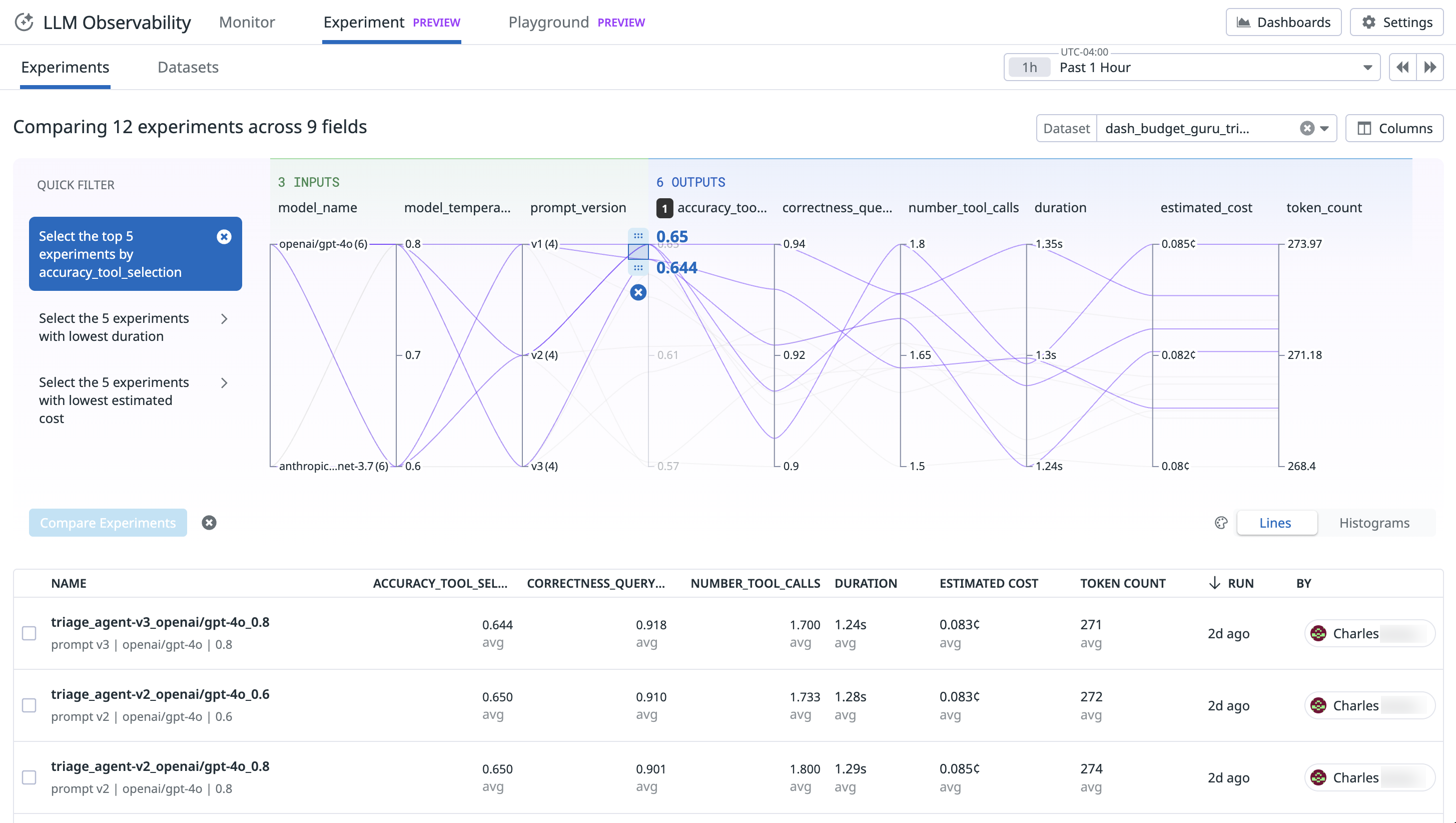

Experiments

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

LLM Observability Experiments supports the entire lifecycle of building LLM applications and agents. It helps you understand how changes to prompts, models, providers, or system architecture affect performance. With this feature, you can:

- Create and version datasets

- Run and manage experiments

- Compare results to evaluate impact

There are two ways to use Experiments:

- Python SDK (Recommended)

- LLM Observability API

Explore Experiments with Jupyter notebooks

You can use the Jupyter notebooks in the LLM Observability Experiments repository to learn more about Experiments.

Usage: Python SDK

Installation

Install Datadog’s LLM Observability Python SDK:

export DD_FAST_BUILD=1

pip install git+https://github.com/DataDog/dd-trace-py.git@llm-experiments

If you see errors regarding the Rust toolchain, ensure that Rust is installed. Instructions are provided in the error message.

Setup

Environment variables

Specify the following environment variables in your application startup command:

| Variable | Description |

|---|---|

DD_API_KEY | Your Datadog API key |

DD_APP_KEY | Your Datadog application key |

DD_SITE | Your Datadog site. Defaults to datadoghq.com. |

Project initialization

Call init() to define the project where you want to write your experiments.

import ddsource.llmobs.experimentation as dne

dne.init(project_name="example")

Dataset class

A dataset is a collection of inputs and expected outputs. You can construct datasets from production data, from staging data, or manually. You can also push and retrieve datasets from Datadog.

Constructor

Constructor

| Parameter | Type | Description |

|---|---|---|

name (required) | string | Name of the dataset |

data (required) | List[Dict[str, Union[str, Dict[str, Any]]]] | List of dictionaries. The key is a string. The value can be a string or a dictionary. The dictionaries should all have the same schema and contain the following keys: input: String or dictionary of input dataexpected_output (optional): String or dictionary of expected output data |

description | string | Description of the dataset |

Returns

Instance of Dataset

Example

import ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

dataset = dne.Dataset(

name="capitals-of-the-world",

data=[

{"input": "What is the capital of China?", "expected_output": "Beijing"},

{

"input": "Which city serves as the capital of South Africa?",

"expected_output": "Pretoria",

},

{

"input": "What is the capital of Switzerland?",

"expected_output": "Bern",

},

{

"input": "Name the capital city of a country that starts with 'Z'." # Open-ended question

}

],

)

Pull a dataset from Datadog

Pull a dataset from Datadog

Dataset.pull(name: str) -> Dataset

| Parameter | Type | Description |

|---|---|---|

name (required) | string | Name of the dataset to retrieve from Datadog |

Returns

Instance of Dataset

Example

import ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

dataset = dne.Dataset.pull("capitals-of-the-world")

Create a dataset from a CSV file

Create a dataset from a CSV file

Dataset.from_csv(

cls,

filepath: str,

name: str,

description: str = "",

delimiter: str = ",",

input_columns: List[str] = None,

expected_output_columns: List[str] = None,

) -> Dataset:

| Parameter | Type | Description |

|---|---|---|

path (required) | string | Local path to the CSV file |

name (required) | string | Name of the dataset |

description | string | Description of the dataset |

input_columns (required) | List[str] | List of column names to use as input data |

expected_output_columns (required) | List[str] | List of column names to use as output data |

metadata_columns | string | List of column names to include as metadata |

delimiter | string | Delimiter character for CSV files. Defaults to ,. |

The CSV file must have a header row so that input and expected output columns can be mapped.

Returns

Instance of Dataset

Example

data.csv

question,answer,difficulty,category

What is 2+2?,4,easy,math

What is the capital of France?,Paris,medium,geographyimport ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

dataset = dne.Dataset.from_csv(

path="data.csv",

name="my_dataset",

input_columns=["question", "category", "difficulty"],

expected_output_columns=["answer"]

)

Push a dataset to Datadog

Push a dataset to Datadog

Dataset.push(overwrite: boolean = None, new_version: boolean = None)

| Parameter | Type | Description |

|---|---|---|

overwrite | boolean | If True, overwrites the dataset rows of an existing version. |

new_version | boolean | If True, creates a new version of the dataset in Datadog. Defaults to True.This flag is useful for creating a new dataset with entirely new data. |

Example

import ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

dataset = dne.Dataset(...)

dataset.push()

Convert a dataset to a pandas DataFrame

Convert a dataset to a pandas DataFrame

Dataset.as_dataframe(multiindex: bool = True) -> pd.DataFrame

| Parameter | Type | Description |

|---|---|---|

multiindex | boolean | If True, expands nested dictionaries into MultiIndex columns. Defaults to True. |

Returns

Instance of pandas DataFrame

Experiment class

An experiment is a collection of traces that tests the behavior of an LLM feature or LLM application against a dataset. The input data comes from the dataset, and the outputs are the final generations of the feature or application that is being tested. The Experiment class manages the execution and evaluation of LLM tasks on datasets.

Constructor

Constructor

Experiment(

name: str,

task: Callable,

dataset: Dataset,

evaluators: List[Callable],

tags: List[str] = [],

description: str = "",

metadata: Dict[str, Any] = {},

config: Optional[Dict[str, Any]] = None

)

| Parameter | Type | Description |

|---|---|---|

name (required) | string | Name of the experiment |

task (required) | function | Function decorated with @task that processes each dataset record |

dataset (required) | Dataset | Dataset to run the experiment against |

evaluators | function[] | List of functions decorated with @evaluator that run against all outputs in the results |

tags | string[] | Optional list of tags for organizing experiments |

description | string | Description of the experiment |

metadata | Dict[str, Any] | Additional metadata about the experiment |

config | Dict[str, Any] | A key-value pair collection used inside a task to determine its behavior |

Returns

Instance of Experiment

Execute task and evaluations, push results

Execute task and evaluations, push results

Experiment.run(jobs: int = 10, raise_errors: bool = False, sample_size: int = None) -> ExperimentResults

| Parameter | Type | Description |

|---|---|---|

jobs | int | Number of worker threads used to run the task concurrently. Defaults to 10. |

raise_errors | boolean | If True, stops execution as soon as the first exception from the task is raised.If False, every exception is handled, and the experiment runs continually until finished. |

sample_size | int | Number of rows used for the experiment. You can use sample_size with raise_errors to test before you run a long experiment. |

Returns

Instance of ExperimentResults

Example

# To test, run top 10 rows and see if it throws errors

results = experiment.run(raise_errors=True, sample_size=10)

# If it's acceptable after that, run the whole thing

results = experiment.run()

Run evaluators on outputs, push results

Run evaluators on outputs, push results

Experiment.run_evaluations(evaluators: Optional[List[Callable]] = None, raise_errors: bool = False) -> ExperimentResults

| Parameter | Type | Description |

|---|---|---|

evaluators | function[] | List of functions decorated with @evaluator that run against all outputs in the results. |

raise_errors | boolean | If True, stops execution as soon as the first exception from the task is raised.If False, every exception is handled, and the experiment runs continually until finished. |

Returns

Instance of ExperimentResults

ExperimentResults class

Contains and manages the results of an experiment run.

Convert experiment results to a pandas DataFrame

Convert experiment results to a pandas DataFrame

ExperimentResults.as_dataframe(multiindex: bool = True) -> pd.DataFrame

| Parameter | Type | Description |

|---|---|---|

multiindex | boolean | If True, expands nested dictionaries into MultiIndex columns. Defaults to True. |

Returns

Instance of pandas DataFrame

Decorators

Decorators are required to define the task functions and evaluator functions that an experiment uses.

@task: Mark a function as a task

@task: Mark a function as a task

| Parameter | Type | Description |

|---|---|---|

input (required) | Dict[str, Any] | Dataset input field used for your business logic |

config | Dict[str, Any] | Modifies the behavior of the task (prompts, models, etc). |

import ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

@dne.task

def process(input: Dict[str, Any], config: Optional[Dict[str, Any]] = None) -> Any:

# Your business logic

@evaluator: Mark a function as an evaluator

@evaluator: Mark a function as an evaluator

| Parameter | Type | Description |

|---|---|---|

input (required) | Any | Dataset input field used for your business logic |

output (required) | Any | Task output field used for your business logic |

expected_output (required) | Any | Dataset expected_output field used for your business logic |

import ddtrace.llmobs.experimentation as dne

dne.init(project_name="example")

@dne.evaluator

def evaluate(input: Any, output: Any, expected_output: Any) -> Any:

# Your evaluation logic

Usage: LLM Observability Experiments API

Postman quickstart

Datadog highly recommends importing the Experiments Postman collection into Postman. Postman’s View documentation feature can help you better understand this API.

Request format

| Field | Type | Description |

|---|---|---|

data | Object: Data | The request body is nested within a top level data field. |

Example: Creating a project

{

"data": {

"type": "projects", # request type

"attributes": {

"name": "Project example",

"description": "Description example"

}

}

}

Response format

| Field | Type | Description |

|---|---|---|

data | Object: Data | The request body of an experimentation API is nested within a top level data field. |

meta | Object: Page | Pagination attributes. |

Example: Retrieving projects

{

"data": [

{

"id": "4ac5b6b2-dcdb-40a9-ab29-f98463f73b4z",

"type": "projects",

"attributes": {

"created_at": "2025-02-19T18:53:03.157337Z",

"description": "Description example",

"name": "Project example",

"updated_at": "2025-02-19T18:53:03.157337Z"

}

}

],

"meta": {

"after": ""

}

}

Object: Data

| Field | Type | Description |

|---|---|---|

id | string | The ID of an experimentation entity. Note: Set your ID field reference at this level. |

type | string | Identifies the kind of resource an object represents. For example: projects, experiments, datasets, etc. |

attributes | json | Contains all the resource’s data except for the ID. |

Object: Page

| Field | Type | Description |

|---|---|---|

after | string | The cursor to use to get the next results, if any. Provide the page[cursor] query parameter in your request to get the next results. |

Projects API

Request type: projects

GET /api/unstable/llm-obs/v1/projects

GET /api/unstable/llm-obs/v1/projects

List all projects, sorted by creation date. The most recently-created projects are first.

Query parameters

| Parameter | Type | Description |

|---|---|---|

filter[name] | string | The name of a project to search for. |

filter[id] | string | The ID of a project to search for. |

page[cursor] | string | List results with a cursor provided in the previous query. |

page[limit] | int | Limits the number of results. |

Response

Object: Project

| Field | Type | Description |

|---|---|---|

id | string | Unique project ID. Set at the top level id field within the Data object. |

ml_app | string | ML app name. |

name | string | Unique project name. |

description | string | Project description. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/projects

POST /api/unstable/llm-obs/v1/projects

Create a project. If there is an existing project with the same name, the API returns the existing project unmodified.

Request

| Field | Type | Description |

|---|---|---|

name (required) | string | Unique project name. |

ml_app | string | ML app name. |

description | string | Project description. |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique ID for the project. Set at the top level id field within the Data object. |

ml_app | string | ML app name. |

name | string | Unique project name. |

description | string | Project description. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

PATCH /api/unstable/llm-obs/v1/projects/{project_id}

PATCH /api/unstable/llm-obs/v1/projects/{project_id}

Partially update a project object. Specify the fields to update in the payload.

Request

| Field | Type | Description |

|---|---|---|

name | string | Unique project name. |

ml_app | string | ML app name. |

description | string | Project description. |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique ID for the project. Set at the top level id field within the Data object. |

ml_app | string | ML app name. |

name | string | Unique project name. |

description | string | Project description. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/projects/delete

POST /api/unstable/llm-obs/v1/projects/delete

Batch delete operation.

Request

| Field | Type | Description |

|---|---|---|

project_ids (required) | []string | List of project IDs to delete. |

Response

200 - OK

Datasets API

Request type: datasets

GET /api/unstable/llm-obs/v1/datasets

GET /api/unstable/llm-obs/v1/datasets

List all datasets, sorted by creation date. The most recently-created datasets are first.

Query parameters

| Parameter | Type | Description |

|---|---|---|

filter[name] | string | The name of a dataset to search for. |

filter[id] | string | The ID of a dataset to search for. |

page[cursor] | string | List results with a cursor provided in the previous query. |

page[limit] | int | Limits the number of results. |

Response

Object: Dataset

| Field | Type | Description |

|---|---|---|

id | string | Unique dataset ID. Set at the top level id field within the Data object. |

name | string | Unique dataset name. |

description | string | Dataset description. |

metadata | json | Arbitrary user-defined metadata |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/datasets

POST /api/unstable/llm-obs/v1/datasets

Create a dataset. If there is an existing dataset with the same name, the API returns the existing dataset unmodified.

Request

| Field | Type | Description |

|---|---|---|

name (required) | string | Unique dataset name. |

description | string | Dataset description. |

metadata | json | Arbitrary user-defined metadata. |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique ID for the dataset. Set at the top level id field within the Data object. |

name | string | Unique dataset name. |

description | string | Dataset description. |

metadata | json | Arbitrary user-defined metadata. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

PATCH /api/unstable/llm-obs/v1/datasets/{dataset_id}

PATCH /api/unstable/llm-obs/v1/datasets/{dataset_id}

Partially update a dataset object. Specify the fields to update in the payload.

Request

| Field | Type | Description |

|---|---|---|

name | string | Unique dataset name. |

description | string | Dataset description. |

metadata | json | Arbitrary user-defined metadata. |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique ID for the dataset. Set at the top level id field within the Data object. |

name | string | Unique dataset name. |

description | string | Dataset description. |

metadata | json | Arbitrary user-defined metadata. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/datasets/delete

POST /api/unstable/llm-obs/v1/datasets/delete

Batch delete operation.

Request

| Field | Type | Description |

|---|---|---|

dataset_ids (required) | []string | List of dataset IDs to delete. |

Response

200 - OK

GET /api/unstable/llm-obs/v1/datasets/{dataset_id}/records

GET /api/unstable/llm-obs/v1/datasets/{dataset_id}/records

List all dataset records, sorted by creation date. The most recently-created records are first.

Query parameters

| Parameter | Type | Description |

|---|---|---|

filter[version] | string | List results for a given dataset version. |

page[cursor] | string | List results with a cursor provided in the previous query. |

page[limit] | int | Limits the number of results. |

Response

Object: Record

| Field | Type | Description |

|---|---|---|

id | string | Unique record ID. |

dataset_id | string | Unique dataset ID. |

input | any valid JSON type (string, int, object, etc.) | Data that serves as the starting point for an experiment. |

expected_output | any valid JSON type (string, int, object, etc.) | Expected output |

metadata | json | Arbitrary user-defined metadata. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/datasets/{dataset_id}/records

POST /api/unstable/llm-obs/v1/datasets/{dataset_id}/records

Appends records for a given dataset.

Request

| Field | Type | Description |

|---|---|---|

records (required) | []RecordReq | List of records to create. |

Object: RecordReq

| Field | Type | Description |

|---|---|---|

input (required) | any valid JSON type (string, int, object, etc.) | Data that serves as the starting point for an experiment. |

expected_output | any valid JSON type (string, int, object, etc.) | Expected output |

metadata | json | Arbitrary user-defined metadata. |

Response

| Field | Type | Description |

|---|---|---|

records | []Record | List of created records. |

PATCH /api/unstable/llm-obs/v1/datasets/{dataset_id}/records/{record_id}

PATCH /api/unstable/llm-obs/v1/datasets/{dataset_id}/records/{record_id}

Partially update a dataset record object. Specify the fields to update in the payload.

Request

| Field | Type | Description |

|---|---|---|

input | any valid JSON type (string, int, object, etc.) | Data that serves as the starting point for an experiment. |

expected_output | any valid JSON type (string, int, object, etc.) | Expected output |

metadata | json | Arbitrary user-defined metadata. |

Response

| Field | Type | Description |

|---|---|---|

id | string | Unique record ID. |

dataset_id | string | Unique dataset ID. |

input | any valid JSON type (string, int, object, etc.) | Data that serves as the starting point for an experiment. |

expected_output | any valid JSON type (string, int, object, etc.) | Expected output |

metadata | json | Arbitrary user-defined metadata. |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/datasets/{dataset_id}/records/delete

POST /api/unstable/llm-obs/v1/datasets/{dataset_id}/records/delete

Batch delete operation.

Request

| Field | Type | Description |

|---|---|---|

record_ids (required) | []string | List of dataset record IDs to delete. |

Response

200 - OK

Experiments API

Request type: experiments

GET /api/unstable/llm-obs/v1/experiments

GET /api/unstable/llm-obs/v1/experiments

List all experiments, sorted by creation date. The most recently-created experiments are first.

Query parameters

| Parameter | Type | Description |

|---|---|---|

filter[project_id] (required if dataset not provided) | string | The ID of a project to retrieve experiments for. |

filter[dataset_id] | string | The ID of a dataset to retrieve experiments for. |

filter[id] | string | The ID(s) of an experiment to search for. To query for multiple experiments, use ?filter[id]=<>&filter[id]=<>. |

filter[name] | string | The name of an experiment to search for. |

page[cursor] | string | List results with a cursor provided in the previous query. |

page[limit] | int | Limits the number of results. |

Response

| Field | Type | Description |

|---|---|---|

| within Data | []Experiment | List of experiments. |

Object: Experiment

| Field | Type | Description |

|---|---|---|

id | UUID | Unique experiment ID. Set at the top level id field within the Data object. |

project_id | string | Unique project ID. |

dataset_id | string | Unique dataset ID. |

name | string | Unique experiment name. |

description | string | Experiment description. |

metadata | json | Arbitrary user-defined metadata |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/experiments

POST /api/unstable/llm-obs/v1/experiments

Create an experiment. If there is an existing experiment with the same name, the API returns the existing experiment unmodified.

Request

| Field | Type | Description |

|---|---|---|

project_id (required) | string | Unique project ID. |

dataset_id (required) | string | Unique dataset ID. |

dataset_version | int | Dataset version. |

name (required) | string | Unique experiment name. |

description | string | Experiment description. |

metadata | json | Arbitrary user-defined metadata |

ensure_unique | bool | If true, Datadog generates a new experiment with a unique name in the case of a conflict. Datadog recommends you set this field to true. |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique experiment ID. Set at the top level id field within the Data object. |

project_id | string | Unique project ID. |

dataset_id | string | Unique dataset ID. |

name | string | Unique experiment name. |

description | string | Experiment description. |

metadata | json | Arbitrary user-defined metadata |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

PATCH /api/unstable/llm-obs/v1/experiments/{experiment_id}

PATCH /api/unstable/llm-obs/v1/experiments/{experiment_id}

Partially update an experiment object. Specify the fields to update in the payload.

Request

| Field | Type | Description |

|---|---|---|

dataset_id | string | Unique dataset ID. |

name | string | Unique experiment name. |

description | string | Experiment description. |

metadata | json | Arbitrary user-defined metadata |

Response

| Field | Type | Description |

|---|---|---|

id | UUID | Unique experiment ID. Set at the top level id field within the Data object. |

project_id | string | Unique project ID. |

dataset_id | string | Unique dataset ID. |

name | string | Unique experiment name. |

description | string | Experiment description. |

metadata | json | Arbitrary user-defined metadata |

created_at | timestamp | Timestamp representing when the resource was created. |

updated_at | timestamp | Timestamp representing when the resource was last updated. |

POST /api/unstable/llm-obs/v1/experiments/delete

POST /api/unstable/llm-obs/v1/experiments/delete

Batch delete operation.

Request

| Field | Type | Description |

|---|---|---|

experiment_ids (required) | []string | List of experiment IDs to delete. |

Response

200 - OK

POST /api/unstable/llm-obs/v1/experiments/{experiment_id}/events

POST /api/unstable/llm-obs/v1/experiments/{experiment_id}/events

Handle the ingestion of experiment spans or respective evaluation metrics.

Request

| Field | Type | Description |

|---|---|---|

tags | []string | Key-value pair of strings. |

spans (required) | []Span | Spans that represent an evaluation. |

metrics | []EvalMetric | Generated evaluation metrics. |

Response

202 - Accepted

Object: Span

| Field | Type | Description |

|---|---|---|

span_id (required) | string | Unique span ID. |

trace_id | string | Trace ID. Only needed if tracing. |

start_ns (required) | uint64 | The span’s start time in nanoseconds. |

duration (required) | uint64 | The span’s duration in nanoseconds. |

dataset_record_id | string | The dataset record referenced. |

meta (required) | Meta | The core content of the span. |

Object: Meta

| Field | Type | Description |

|---|---|---|

error | Error | Captures errors. |

input (required) | any valid JSON type (string, int, object, etc.) | Input value to an operation. |

output (required) | any valid JSON type (string, int, object, etc.) | Output value to an operation. |

expected_output | any valid JSON type (string, int, object, etc.) | Expected output value. |

metadata | json | Arbitrary user-defined metadata. |

Object: EvalMetric

| Field | Type | Description |

|---|---|---|

span_id (required) | string | Unique span ID to join on. |

trace_id | string | Trace ID. Only needed if tracing. |

error | Error | Captures errors. |

metric_type (required) | enum | Defines the metric type. Accepted values: categorical, score. |

timestamp_ms (required) | uint64 | Timestamp in which the evaluation occurred. |

label (required) | string | Label for the metric. |

categorical_value (required, if type is categorical) | string | Category value of the metric. |

score_value (required, if type is score) | float64 | Score value of the metric. |

Object: Error

| Field | Type | Description |

|---|---|---|

Message | string | Error message. |

Stack | string | Error stack. |

Type | string | Error type. For example, http. |