- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Catalog

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Software Catalog

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

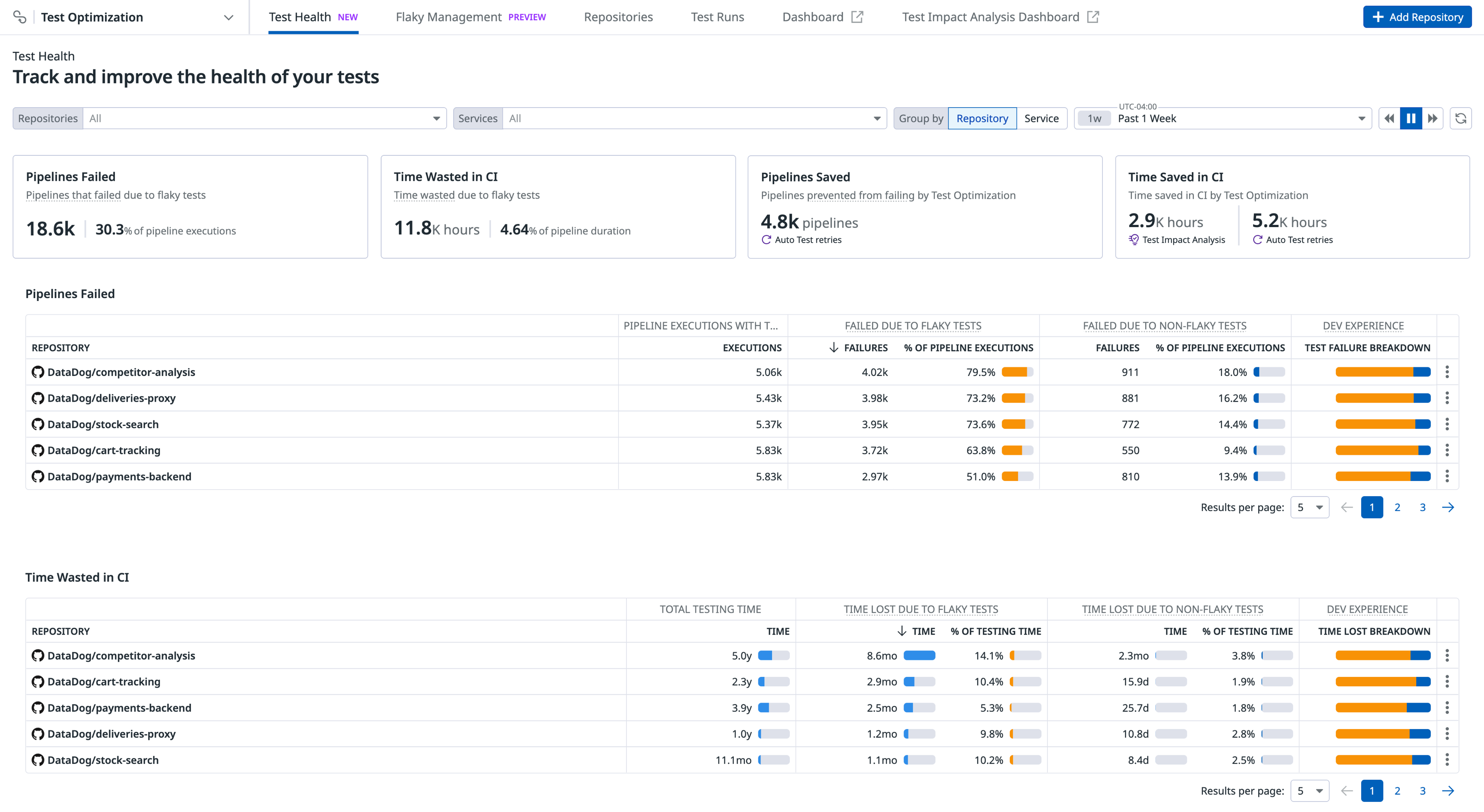

Test Health

Overview

The Test Health dashboard provides analytics to help teams manage and optimize their testing in CI. This includes sections showing the current impact of test flakiness and how Test Optimization is mitigating these problems.

Summary metrics

Based on the current time frame and filters applied, the dashboard highlights the following key metrics:

- Pipelines Failed: Sum total of pipelines that failed due to flaky tests

- Time Wasted in CI: Total time spent in CI due to flaky tests

- Pipelines Saved: How many pipelines were prevented from failing by Auto Test Retries

- Time Saved in CI: How much time has been saved by Test Impact Analysis and Auto Test Retries

Pipelines Failed

This table provides details on pipeline executions, failures, and their impact on developer experience.

| Metric | Description |

|---|---|

| Pipeline Executions with Tests | Number of pipeline executions with one or more test sessions. |

| Failures Due to Flaky Tests | Number of pipeline executions that failed solely due to flaky tests. All tests that failed have one or more of the following tags: @test.is_known_flaky or @test.is_new_flaky. |

| Failures Due to Non-Flaky Tests | Number of pipeline executions that failed due to tests without any flakiness. None of the failing tests have any of the following tags: @test.is_known_flaky, @test.is_new_flaky, and @test.is_flaky. |

| Dev Experience - Test Failure Breakdown | Ratio of flaky to non-flaky test failures. When pipelines fail due to tests, how often is it a flaky test? A higher ratio of flaky test failures erodes trust in test results. Developers may stop paying attention to failing tests, assume they’re flakes, and manually retry. |

Time Wasted in CI

This table provides details on testing time, time lost due to failures, and the impact on developer experience.

| Metric | Description |

|---|---|

| Total Testing Time | Sum of the duration of all test sessions. |

| Time Lost Due to Flaky Tests | Total duration of test sessions that failed solely due to flaky tests. All tests that failed have one or more of the following tags: @test.is_known_flaky, @test.is_new_flaky, or @test.is_flaky. |

| Time Lost Due to Non-Flaky Tests | Total duration of test sessions that failed due to tests without any flakiness. All tests that failed do not have any of the following tags: @test.is_known_flaky, @test.is_new_flaky, and @test.is_flaky. |

| Dev Experience - Time Lost Breakdown | Ratio of time lost due to flaky vs. non-flaky test failures. When you lose time due to tests, how much is due to flaky tests? A higher ratio of time lost to flaky test failures leads to developer frustration. |

Pipelines Saved

This table shows how many pipelines Auto Test Retries have prevented from failing.

These metrics include test sessions in which a flaky test failed, and then was automatically retried and passed. Newer versions of test libraries provide more accurate results, as they include more precise telemetry regarding test retries.

| Metric | Description |

|---|---|

| Pipeline Executions with Tests | Number of pipeline executions with one or more test sessions. |

| Saved by Auto Test Retries | Number of CI pipelines with passed test sessions containing tests with @test.is_retry:true and @test.is_new:false. |

Time Saved in CI

This table shows how much CI usage time Test Impact Analysis and Auto Test Retries have saved.

| Metric | Description |

|---|---|

| Total Testing Time | Sum of the duration of all test sessions. |

| Total Time Saved | Sum of time saved by Test Impact Analysis and Auto Test Retries. % of Testing Time is the percentage of time saved out of total testing time. Total time saved can exceed total testing time if you prevent a lot of unnecessary pipeline and job retries. |

| Saved by Test Impact Analysis | Total duration indicated by @test_session.itr.time_saved. |

| Saved by Auto Test Retries | Total duration of passed test sessions in which some tests initially failed but later passed due to Auto Test Retries. These tests are tagged with @test.is_retry:true and @test.is_new:false. |

Use cases

Enhance developer experience

Use Dev Experience - Test Failure Breakdown and Dev Experience - Time Lost Breakdown to identify how often flaky tests in particular cause failures and waste CI time.

These Test Optimization features improve developer experience by reducing test failures and wasted time:

- Auto Test Retries reduces the likelihood a flaky test causes a pipeline to fail. This includes your known flaky tests and flaky tests that have yet to be identified. This also provides developers with confidence in test results when a test is actually broken, as it will have failed all retries.

- Early Flake Detection, combined with Quality Gates, prevents new tests that are flaky from entering your default branch.

- Test Impact Analysis minimizes the flaky tests that run, by only running relevant tests based on code coverage. Skipping irrelevant tests also shortens the feedback loop for developers.

Maximize pipeline efficiency and reduce costs

Lengthy test suites slow down feedback loops to developers, and running irrelevant tests incurs unnecessary costs.

These Test Optimization features help you save CI time and costs:

- Auto Test Retries: If a single flaky test fails during your session, the entire duration of the CI job is lost. Auto Test Retries allow flaky tests to rerun, increasing the likelihood of passing.

- Test Impact Analysis: By running only tests relevant to your code changes, you reduce the overall duration of the test session. This also prevents pipelines from failing due to unrelated flaky tests if you skip them.

Further reading

Additional helpful documentation, links, and articles: