- Essentials

- Getting Started

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Datadog Mobile App

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Software Catalog

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Custom Metrics from AWS Lambda Serverless Applications

Overview

There are a few different ways to submit custom metrics to Datadog from a Lambda function.

- Creating custom metrics from logs or traces: If your Lambda functions are already sending trace or log data to Datadog, and the data you want to query is captured in an existing log or trace, you can generate custom metrics from logs and traces without re-deploying or making any changes to your application code.

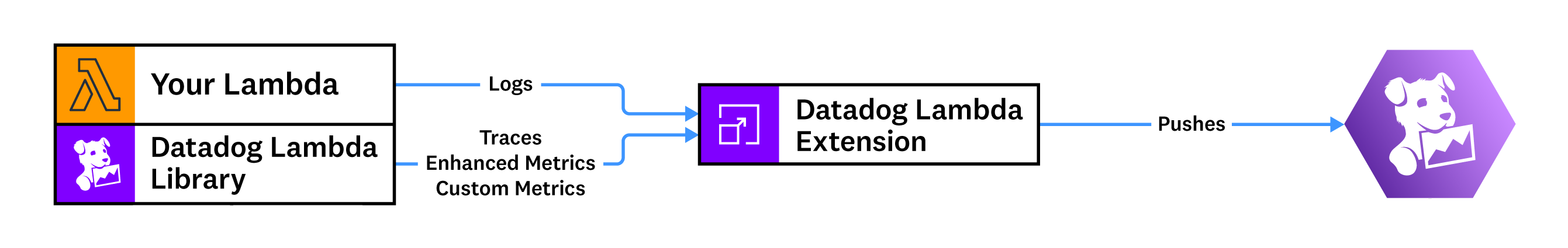

- Submitting custom metrics using the Datadog Lambda extension: If you want to submit custom metrics directly from your Lambda function, Datadog recommends using the Datadog Lambda extension.

- Submitting custom metrics using the Datadog Forwarder Lambda: If you are sending telemetry from your Lambda function over the Datadog Forwarder Lambda, you can submit custom metrics over logs using the Datadog-provided helper functions.

- (Deprecated) Submitting custom metrics from CloudWatch logs: The method to submit custom metrics by printing a log formatted as

MONITORING|<UNIX_EPOCH_TIMESTAMP>|<METRIC_VALUE>|<METRIC_TYPE>|<METRIC_NAME>|#<TAG_LIST>has been deprecated. Datadog recommends using the Datadog Lambda extension instead. - (Deprecated) Submitting custom metrics using the Datadog Lambda library: The Datadog Lambda library for Python, Node.js and Go support sending custom metrics synchronously from the runtime to Datadog by blocking the invocation when

DD_FLUSH_TO_LOGis set tofalse. Besides the performance overhead, metric submissions may also encounter intermittent errors due to the lack of retries because of transient network issues. Datadog recommends using the Datadog Lambda extension instead. - (Not recommended) Using a third-party library: Most third-party libraries do not submit metrics as distributions and can lead to under-counted results. You may also encounter intermittent errors due to the lack of retries because of transient network issues.

Understanding distribution metrics

When Datadog receives multiple count or gauge metric points that share the same timestamp and set of tags, only the most recent one counts. This works for host-based applications because metric points get aggregated by the Datadog agent and tagged with a unique host tag.

A Lambda function may launch many concurrent execution environments when traffic increases. The function may submit count or gauge metric points that overwrite each other and cause undercounted results. To avoid this problem, custom metrics generated by Lambda functions are submitted as distributions because distribution metric points are aggregated on the Datadog backend, and every metric point counts.

Distributions provide avg, sum, max, min, count aggregations by default. On the Metric Summary page, you can enable percentile aggregations (p50, p75, p90, p95, p99) and also manage tags. To monitor a distribution for a gauge metric type, use avg for both the time and space aggregations. To monitor a distribution for a count metric type, use sum for both the time and space aggregations. Refer to the guide Query to the Graph for how time and space aggregations work.

Submitting historical metrics

To submit historical metrics (only timestamps that are within the last 20 minutes are allowed), you must use the Datadog Forwarder. The Datadog Lambda Extension can only submit metrics with the current timestamp due to the limitation of the StatsD protocol.

Submitting many data points

When using the Forwarder to submit many data points for the same metric and the same set of tags, for example, inside a big for loop, there may be noticeable performance impact to the Lambda and also CloudWatch cost impact. You can aggregate the data points in your application to avoid the overhead, see the following Python example:

def lambda_handler(event, context):

# Inefficient when event['Records'] contains many records

for record in event['Records']:

lambda_metric("record_count", 1)

# Improved implementation

record_count = 0

for record in event['Records']:

record_count += 1

lambda_metric("record_count", record_count)

Creating custom metrics from logs or traces

With log-based metrics, you can record a count of logs that match a query or summarize a numeric value contained in a log, such as a request duration. Log-based metrics are a cost-efficient way to summarize log data from the entire ingest stream. Learn more about creating log-based metrics.

You can also generate metrics from all ingested spans, regardless of whether they are indexed by a retention filter. Learn more about creating span-based metrics.

With the Datadog Lambda Extension

Datadog recommends using the Datadog Lambda Extension to submit custom metrics as distribution from the supported Lambda runtimes.

- Follow the general serverless installation instructions appropriate for your Lambda runtime.

- If you are not interested in collecting traces from your Lambda function, set the environment variable

DD_TRACE_ENABLEDtofalse. - If you are not interested in collecting logs from your Lambda function, set the environment variable

DD_SERVERLESS_LOGS_ENABLEDtofalse. - Follow the sample code or instructions below to submit your custom metric.

from datadog_lambda.metric import lambda_metric

def lambda_handler(event, context):

lambda_metric(

"coffee_house.order_value", # Metric name

12.45, # Metric value

tags=['product:latte', 'order:online'] # Associated tags

)

const { sendDistributionMetric } = require('datadog-lambda-js');

async function myHandler(event, context) {

sendDistributionMetric(

'coffee_house.order_value', // Metric name

12.45, // Metric value

'product:latte', // First tag

'order:online' // Second tag

);

}

package main

import (

"github.com/aws/aws-lambda-go/lambda"

"github.com/DataDog/datadog-lambda-go"

)

func main() {

lambda.Start(ddlambda.WrapFunction(myHandler, nil))

}

func myHandler(ctx context.Context, event MyEvent) (string, error) {

ddlambda.Distribution(

"coffee_house.order_value", // Metric name

12.45, // Metric value

"product:latte", "order:online" // Associated tags

)

}

Install the latest version of java-dogstatsd-client and then follow the sample code below to submit your custom metrics as distribution.

package com.datadog.lambda.sample.java;

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.RequestHandler;

import com.amazonaws.services.lambda.runtime.events.APIGatewayV2ProxyRequestEvent;

import com.amazonaws.services.lambda.runtime.events.APIGatewayV2ProxyResponseEvent;

// import the statsd client builder

import com.timgroup.statsd.NonBlockingStatsDClientBuilder;

import com.timgroup.statsd.StatsDClient;

public class Handler implements RequestHandler<APIGatewayV2ProxyRequestEvent, APIGatewayV2ProxyResponseEvent> {

// instantiate the statsd client

private static final StatsDClient Statsd = new NonBlockingStatsDClientBuilder().hostname("localhost").build();

@Override

public APIGatewayV2ProxyResponseEvent handleRequest(APIGatewayV2ProxyRequestEvent request, Context context) {

// submit a distribution metric

Statsd.recordDistributionValue("my.custom.java.metric", 1, new String[]{"tag:value"});

APIGatewayV2ProxyResponseEvent response = new APIGatewayV2ProxyResponseEvent();

response.setStatusCode(200);

return response;

}

static {

// ensure all metrics are flushed before shutdown

Runtime.getRuntime().addShutdownHook(new Thread() {

@Override

public void run() {

System.out.println("[runtime] shutdownHook triggered");

try {

Thread.sleep(300);

} catch (InterruptedException e) {

System.out.println("[runtime] sleep interrupted");

}

System.out.println("[runtime] exiting");

}

});

}

}

Install the latest version of dogstatsd-csharp-client and then follow the sample code below to submit your custom metrics as distribution metrics.

using System.IO;

// import the statsd client

using StatsdClient;

namespace Example

{

public class Function

{

static Function()

{

// instantiate the statsd client

var dogstatsdConfig = new StatsdConfig

{

StatsdServerName = "127.0.0.1",

StatsdPort = 8125,

};

if (!DogStatsd.Configure(dogstatsdConfig))

throw new InvalidOperationException("Cannot initialize DogstatsD. Set optionalExceptionHandler argument in the `Configure` method for more information.");

}

public Stream MyHandler(Stream stream)

{

// submit a distribution metric

DogStatsd.Distribution("my.custom.dotnet.metric", 1, tags: new[] { "tag:value" });

// your function logic

}

}

}

- Install the DogStatsD client for your runtime

- Follow the sample code to submit your custom metrics as distribution

With the Datadog Forwarder

Datadog recommends using the Datadog Forwarder Lambda to submit custom metrics from Lambda runtimes that are not supported by the Datadog Lambda Extension.

- Follow the general serverless installation instructions to instrument your Lambda function using the Datadog Forwarder Lambda function.

- If you are not interested in collecting traces from your Lambda function, set the environment variable

DD_TRACE_ENABLEDtofalseon your own Lambda function. - If you are not interested in collecting logs from your Lambda function, set the Forwarder’s CloudFormation stack parameter

DdForwardLogtofalse. - Import and use the helper function from the Datadog Lambda Library, such as

lambda_metricorsendDistributionMetric, to submit your custom metrics following the sample code below.

from datadog_lambda.metric import lambda_metric

def lambda_handler(event, context):

lambda_metric(

"coffee_house.order_value", # Metric name

12.45, # Metric value

tags=['product:latte', 'order:online'] # Associated tags

)

# Submit a metric with a timestamp that is within the last 20 minutes

lambda_metric(

"coffee_house.order_value", # Metric name

12.45, # Metric value

timestamp=int(time.time()), # Unix epoch in seconds

tags=['product:latte', 'order:online'] # Associated tags

)

const { sendDistributionMetric } = require('datadog-lambda-js');

async function myHandler(event, context) {

sendDistributionMetric(

'coffee_house.order_value', // Metric name

12.45, // Metric value

'product:latte', // First tag

'order:online' // Second tag

);

// Submit a metric with a timestamp that is within the last 20 minutes

sendDistributionMetricWithDate(

'coffee_house.order_value', // Metric name

12.45, // Metric value

new Date(Date.now()), // date

'product:latte', // First tag

'order:online', // Second tag

);

}

package main

import (

"github.com/aws/aws-lambda-go/lambda"

"github.com/DataDog/datadog-lambda-go"

)

func main() {

lambda.Start(ddlambda.WrapFunction(myHandler, nil))

}

func myHandler(ctx context.Context, event MyEvent) (string, error) {

ddlambda.Distribution(

"coffee_house.order_value", // Metric name

12.45, // Metric value

"product:latte", "order:online" // Associated tags

)

// Submit a metric with a timestamp that is within the last 20 minutes

ddlambda.MetricWithTimestamp(

"coffee_house.order_value", // Metric name

12.45, // Metric value

time.Now(), // Timestamp

"product:latte", "order:online" // Associated tags

)

}

require 'datadog/lambda'

def handler(event:, context:)

# You only need to wrap your function handler (Not helper functions).

Datadog::Lambda.wrap(event, context) do

Datadog::Lambda.metric(

'coffee_house.order_value', # Metric name

12.45, # Metric value

"product":"latte", "order":"online" # Associated tags

)

# Submit a metric with a timestamp that is within the last 20 minutes

Datadog::Lambda.metric(

'coffee_house.order_value', # Metric name

12.45, # Metric value

time: Time.now.utc, # Timestamp

"product":"latte", "order":"online" # Associated tags

)

end

end

public class Handler implements RequestHandler<APIGatewayV2ProxyRequestEvent, APIGatewayV2ProxyResponseEvent> {

public Integer handleRequest(APIGatewayV2ProxyRequestEvent request, Context context){

DDLambda dd = new DDLambda(request, lambda);

Map<String,String> myTags = new HashMap<String, String>();

myTags.put("product", "latte");

myTags.put("order", "online");

dd.metric(

"coffee_house.order_value", // Metric name

12.45, // Metric value

myTags); // Associated tags

}

}

Write a reusable function that logs your custom metrics in the following format:

{

"m": "Metric name",

"v": "Metric value",

"e": "Unix timestamp (seconds)",

"t": "Array of tags"

}

For example:

{

"m": "coffee_house.order_value",

"v": 12.45,

"e": 1572273854,

"t": ["product:latte", "order:online"]

}

[DEPRECATED] CloudWatch logs

This method of submitting custom metrics is no longer supported, and is disabled for all new customers. Migrate to one of the recommended solutions.

Note: If you are migrating to one of the recommended solutions, you’ll need to start instrumenting your custom metrics under new metric names when submitting them to Datadog. The same metric name cannot simultaneously exist as both distribution and non-distribution metric types.

This requires the following AWS permissions in your Datadog IAM policy.

| AWS Permission | Description |

|---|---|

logs:DescribeLogGroups | List available log groups. |

logs:DescribeLogStreams | List available log streams for a group. |

logs:FilterLogEvents | Fetch specific log events for a stream to generate metrics. |

To send custom metrics to Datadog from your Lambda logs, print a log line using the following format:

MONITORING|<UNIX_EPOCH_TIMESTAMP>|<METRIC_VALUE>|<METRIC_TYPE>|<METRIC_NAME>|#<TAG_LIST>

Where:

MONITORINGsignals to the Datadog integration that it should collect this log entry.<UNIX_EPOCH_TIMESTAMP>is in seconds, not milliseconds.<METRIC_VALUE>MUST be a number (that is, integer or float).<METRIC_TYPE>iscount,gauge,histogram, orcheck.<METRIC_NAME>uniquely identifies your metric and follows the metric naming policy.<TAG_LIST>is optional, comma separated, and must be preceded by#. The tagfunction_name:<name_of_the_function>is automatically applied to custom metrics.

Note: The sum for each timestamp is used for counts and the last value for a given timestamp is used for gauges. It is not recommended to print a log statement every time you increment a metric, as this increases the time it takes to parse your logs. Continually update the value of the metric in your code, and print one log statement for that metric before the function finishes.