- Principales informations

- Getting Started

- Datadog

- Site Datadog

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Intégrations

- Conteneurs

- Dashboards

- Monitors

- Logs

- Tracing

- Profileur

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Surveillance Synthetic

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- OpenTelemetry

- Développeurs

- Authorization

- DogStatsD

- Checks custom

- Intégrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Checks de service

- IDE Plugins

- Communauté

- Guides

- API

- Application mobile

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Alertes

- Infrastructure

- Métriques

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Universal Service Monitoring

- Conteneurs

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- RUM et Session Replay

- Product Analytics

- Surveillance Synthetic

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Exécuteur de tests intelligent

- Code Analysis

- Quality Gates

- DORA Metrics

- Securité

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- Administration

Pipeline Data Model And Execution Types

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

CI Visibility is not available in the selected site ().

Overview

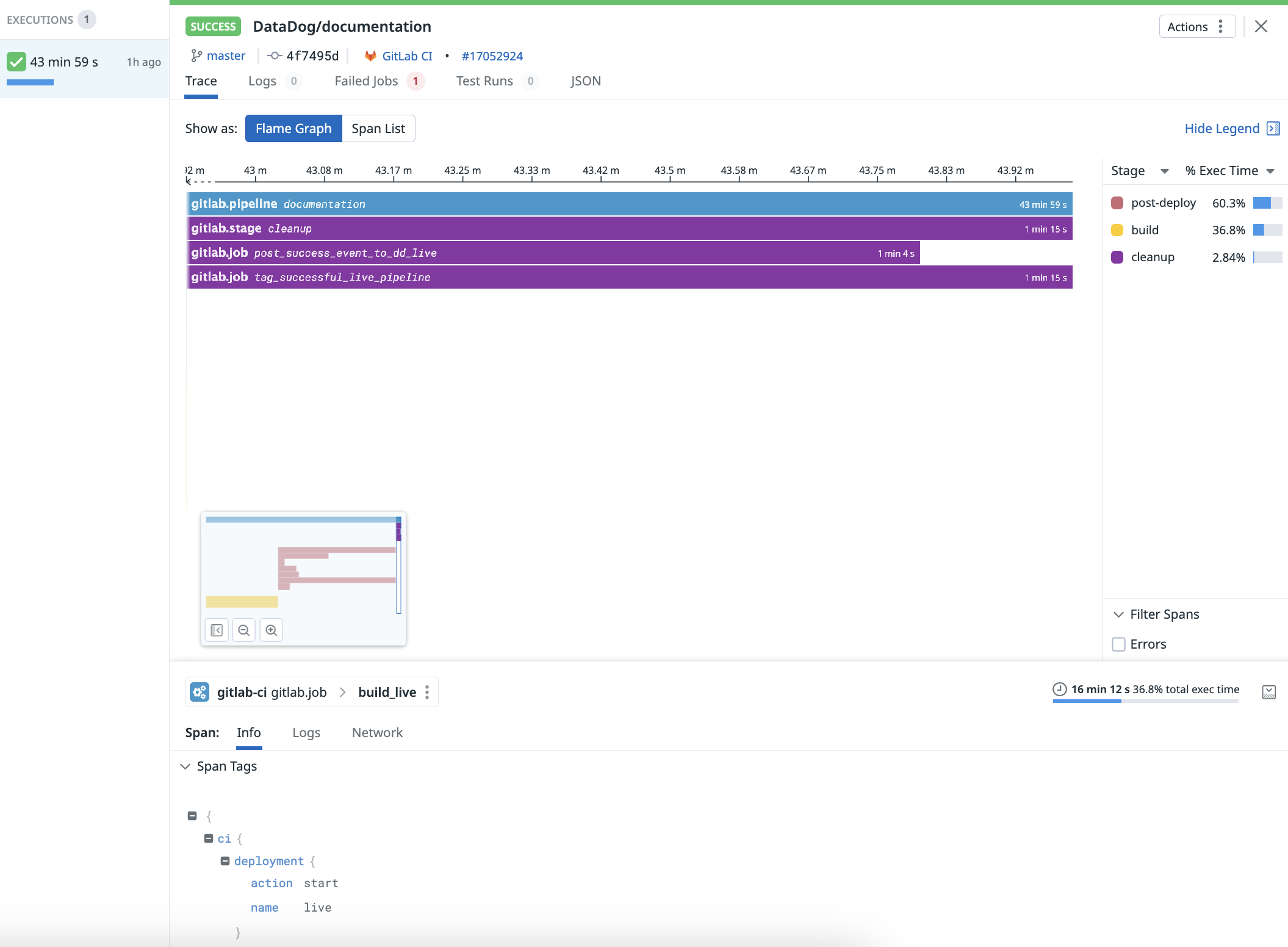

This guide describes how to programmatically set up pipeline executions in CI Visibility and defines the types of pipeline execution that CI Visibility supports.

This guide applies to pipelines created using the public CI Visibility Pipelines API. Integrations with other CI providers may vary.

Data model

Pipeline executions are modeled as traces, similar to an APM distributed trace, where spans represent the execution of different parts of the pipeline. The CI Visibility data model for representing pipeline executions consists of four levels:

| Level Name | Description |

|---|---|

| Pipeline (required) | The top-level root span that contains all other levels as children. It represents the overall execution of a pipeline from start to finish. This level is sometimes called build or workflow in some CI providers. |

| Stage | Serves as a grouping of jobs under a user-defined name. Some CI providers do not have this level. |

| Job | The smallest unit of work where commands are executed. All tasks at this level should be performed on a single node. |

| Step | In some CI providers, this level represents a shell script or an action executed within a job. |

When a pipeline, stage, job, or step finishes, execution data is sent to Datadog. To set up Pipeline Visibility, see the list of supported CI providers. If your CI provider or workflow is not supported, you can use the public API endpoint to send your pipeline executions to CI Visibility.

Stages, jobs, and steps are expected to have the exact same pipeline name as their parent pipeline. In the case of a mismatch, some pipelines may be missing stage, job, and step information. For example, missing jobs in the job summary tables.

Pipeline unique IDs

All pipeline executions within a level must have an unique identifier. For example, a pipeline and a job may have the same unique ID, but not two pipelines.

When sending repeated IDs with different timestamps, the user interface may exhibit undesirable behavior. For example, flame graphs may display span tags from a different pipeline execution. If duplicate IDs with the same timestamps are sent, only the values of the last pipeline execution received are stored.

Pipeline execution types

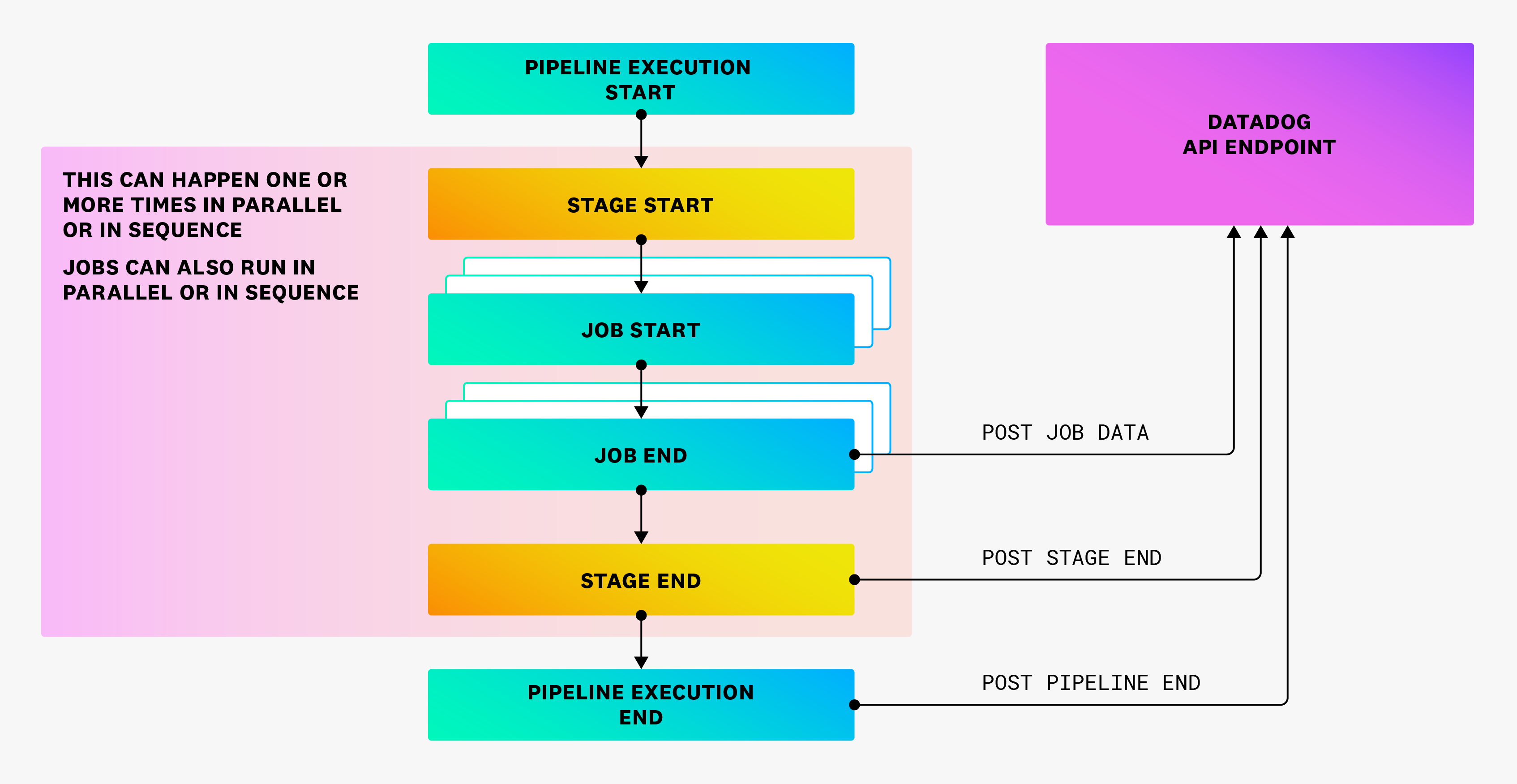

Normal run

The normal run of a pipeline execution follows the flow depicted below:

Depending on the provider, some levels may be missing. For example, stages may not exist, and jobs may run in parallel or sequence, or a combination of both.

After the completion of each component, a payload must be sent to Datadog with all the necessary data to represent the execution. Datadog processes this data, stores it as a pipeline event, and displays it in CI Visibility. Pipeline executions must end before sending them to Datadog.

Full retries

Full retries of a pipeline must have different pipeline unique IDs.

In the public API endpoint, you can populate the previous_attempt field to link to previous retries. Retries are treated as separate pipeline executions in Datadog, and the start and end time should only encompass that retry.

Partial retries

When retrying a subset of jobs within a pipeline, you must send a new pipeline event with a new pipeline unique ID. The payload for any new jobs must be linked to the new pipeline unique ID. To link them to the previous retry, add the previous_attempt field.

Partial retries are treated as separate pipelines as well. The start and end time must not include the time of the original retry. For a partial retry, do not send payloads for jobs that ran in the previous attempt. Also, set the partial_retry field to true on partial retries to exclude them from aggregation when calculating run times.

For example, a pipeline named P has three different jobs, namely J1, J2, and J3, executed sequentially. In the first run of P, only J1 and J2 are executed, and J2 fails.

Therefore, you need to send a total of three payloads:

- Job payload for

J1, with IDJ1_1and pipeline IDP_1. - Job payload for

J2, with IDJ2_1and pipeline IDP_1. - Pipeline payload for

P, with IDP_1.

Suppose there is a partial retry of the pipeline starting from J2, where all the remaining jobs succeed.

You need to send three additional payloads:

- Job payload for

J2, with IDJ2_2and pipeline IDP_2. - Job payload for

J3, with IDJ3_1and pipeline IDP_2. - Pipeline payload for

P, with IDP_2.

The actual values of the IDs are not important. What matters is that they are correctly modified based on the pipeline run as specified above.

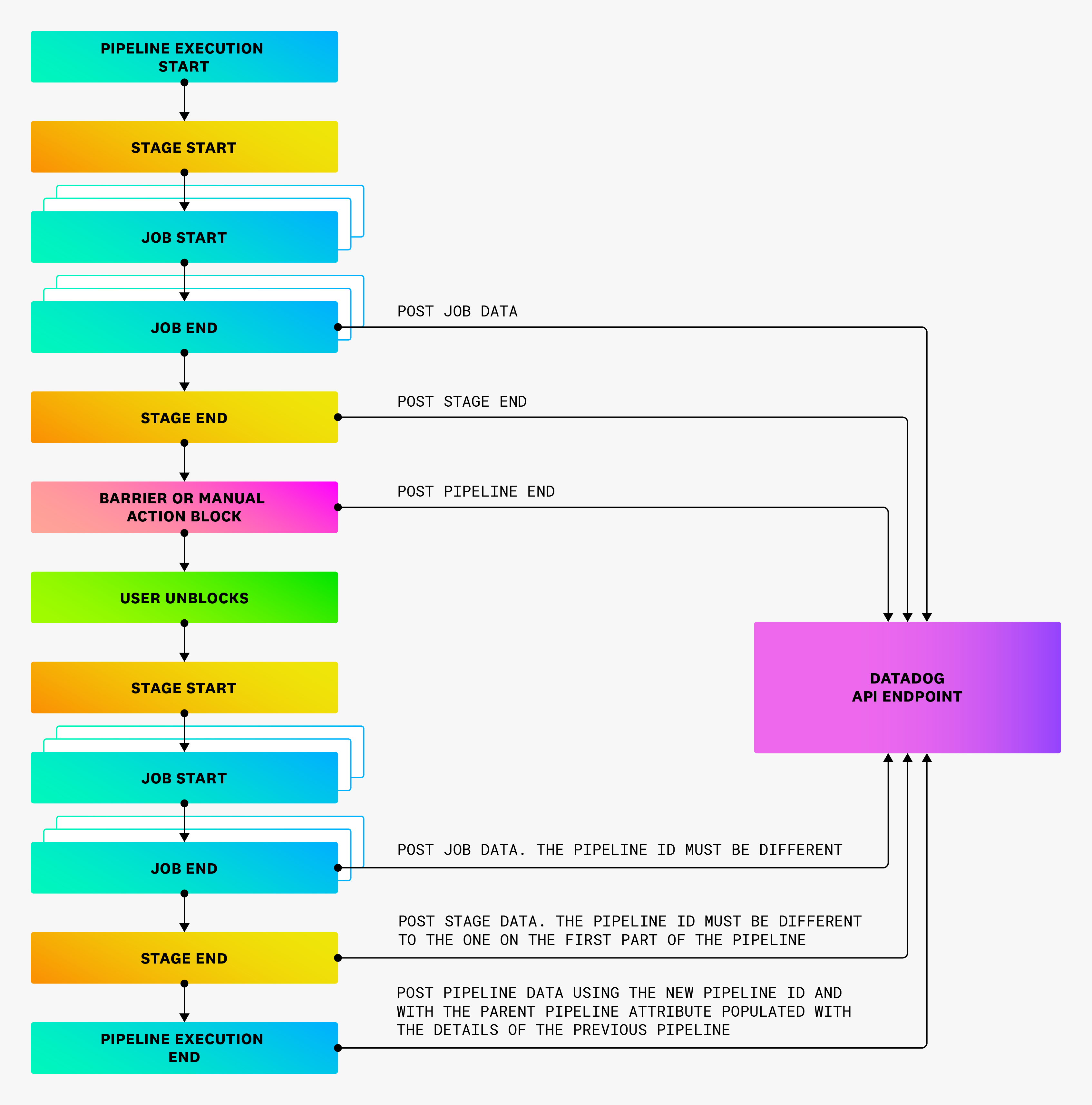

Blocked pipelines

If a pipeline is indefinitely blocked due to requiring manual intervention, a pipeline event payload must be sent as soon as the pipeline reaches the blocked state. The pipeline status must be set to blocked.

The remaining pipeline data must be sent in separate payloads with a different pipeline unique ID. In the second pipeline, you can set is_resumed to true to signal that the execution was resumed from a blocked pipeline.

Downstream pipelines

If a pipeline is triggered as a child of another pipeline, the parent_pipeline field should be populated with details of the parent pipeline.

Manual pipelines

If a pipeline is triggered manually, the is_manual field must be set to true.

Git information

Providing Git information of the commit that triggered the pipeline execution is strongly encouraged. Pipeline executions without Git information don’t appear on the Recent Code Changes page. At a minimum, the repository URL, commit SHA, and author email are required. For more information, see the public API endpoint specification.

Further reading

Documentation, liens et articles supplémentaires utiles: