- Principales informations

- Getting Started

- Datadog

- Site Datadog

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Intégrations

- Conteneurs

- Dashboards

- Monitors

- Logs

- Tracing

- Profileur

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Surveillance Synthetic

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- OpenTelemetry

- Développeurs

- Authorization

- DogStatsD

- Checks custom

- Intégrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Checks de service

- IDE Plugins

- Communauté

- Guides

- API

- Application mobile

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Alertes

- Infrastructure

- Métriques

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Universal Service Monitoring

- Conteneurs

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- RUM et Session Replay

- Product Analytics

- Surveillance Synthetic

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Exécuteur de tests intelligent

- Code Analysis

- Quality Gates

- DORA Metrics

- Securité

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- Administration

Investigate Slow Traces or Endpoints

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

If your application is showing performance problems in production, integrating distributed tracing with code stack trace benchmarks from profiling is a powerful way to identify the performance bottlenecks. Application processes that have both APM distributed tracing and continuous profiler enabled are automatically linked.

You can move directly from span information to profiling data on the Code Hotspots tab, and find specific lines of code related to performance issues. Similarly, you can also debug slow and resource consuming endpoints directly in the Profiling UI.

Identify code hotspots in slow traces

Prerequisites

Code Hotspots identification is enabled by default when you turn on profiling for your Java service on Linux and macOS. The feature is not available on Windows.

For manually instrumented code, continuous profiler requires scope activation of spans:

final Span span = tracer.buildSpan("ServicehandlerSpan").start();

try (final Scope scope = tracer.activateSpan(span)) { // mandatory for Datadog continuous profiler to link with span

// worker thread impl

} finally {

// Step 3: Finish Span when work is complete

span.finish();

}

It's highly recommended to use the Datadog profiler instead of Java Flight Recorder (JFR).

Code Hotspots identification is enabled by default when you turn on profiling for your Python service.

Requires dd-trace-py version 0.44.0+.

To enable the timeline feature:

- Upgrade

dd-trace-pyto version 2.12.0+, 2.11.4+, or 2.10.7+. - Set environment variable

DD_PROFILING_TIMELINE_ENABLEDtotrue

Code Hotspots identification is enabled by default when you turn on profiling for your Ruby service.

The new timeline feature, including GC information, is enabled by default in dd-trace-rb 1.22.0+.

Code Hotspots identification is enabled by default when you turn on profiling for your Node.js service on Linux and macOS. The feature is not available on Windows.

Requires dd-trace-js version 5.0.0+, 4.24.0+ or 3.45.0+.

The new timeline feature is enabled by default in dd-trace-js 5.11.0+, 4.35.0+, and 3.56.0+.

Code Hotspots identification is enabled by default when you turn on profiling for your Go service.

To enable the new timeline feature, set the environment variables below:

os.Setenv("DD_PROFILING_EXECUTION_TRACE_ENABLED", "true")

os.Setenv("DD_PROFILING_EXECUTION_TRACE_PERIOD", "15m")

Setting these variables will record up to 1 minute (or 5 MiB) of execution tracing data every 15 minutes.

You can find this data:

- In the Profile List by adding

go_execution_traced:yesto your search query. Click on a profile to view the Profile Timeline. To go even deeper, download the profile and usego tool traceor gotraceui to view the containedgo.tracefiles. - In the Trace Explorer by adding

@go_execution_traced:yes(note the@) to your search query. Click on a span and then select theCode Hotspotstab to view the Span Timeline.

While recording execution traces, your application may observe an increase in CPU usage similar to a garbage collection. Although this should not have a significant impact for most applications, Go 1.21 includes patches to eliminate this overhead.

This capability requires dd-trace-go version 1.37.0+ (1.52.0+ for timeline view) and works best with Go version 1.18 or later (1.21 or later for timeline view).

Code Hotspots identification is enabled by default when you turn on profiling for your .NET service.

This capability requires dd-trace-dotnet version 2.30.0+.

Code Hotspots identification is enabled by default when you turn on profiling for your PHP service.

Requires dd-trace-php version 0.71+.

To enable the timeline feature:

- Upgrade to

dd-trace-phpversion 0.98+. - Set the environment variable

DD_PROFILING_TIMELINE_ENABLED=1or INI settingdatadog.profiling.timeline_enabled=1

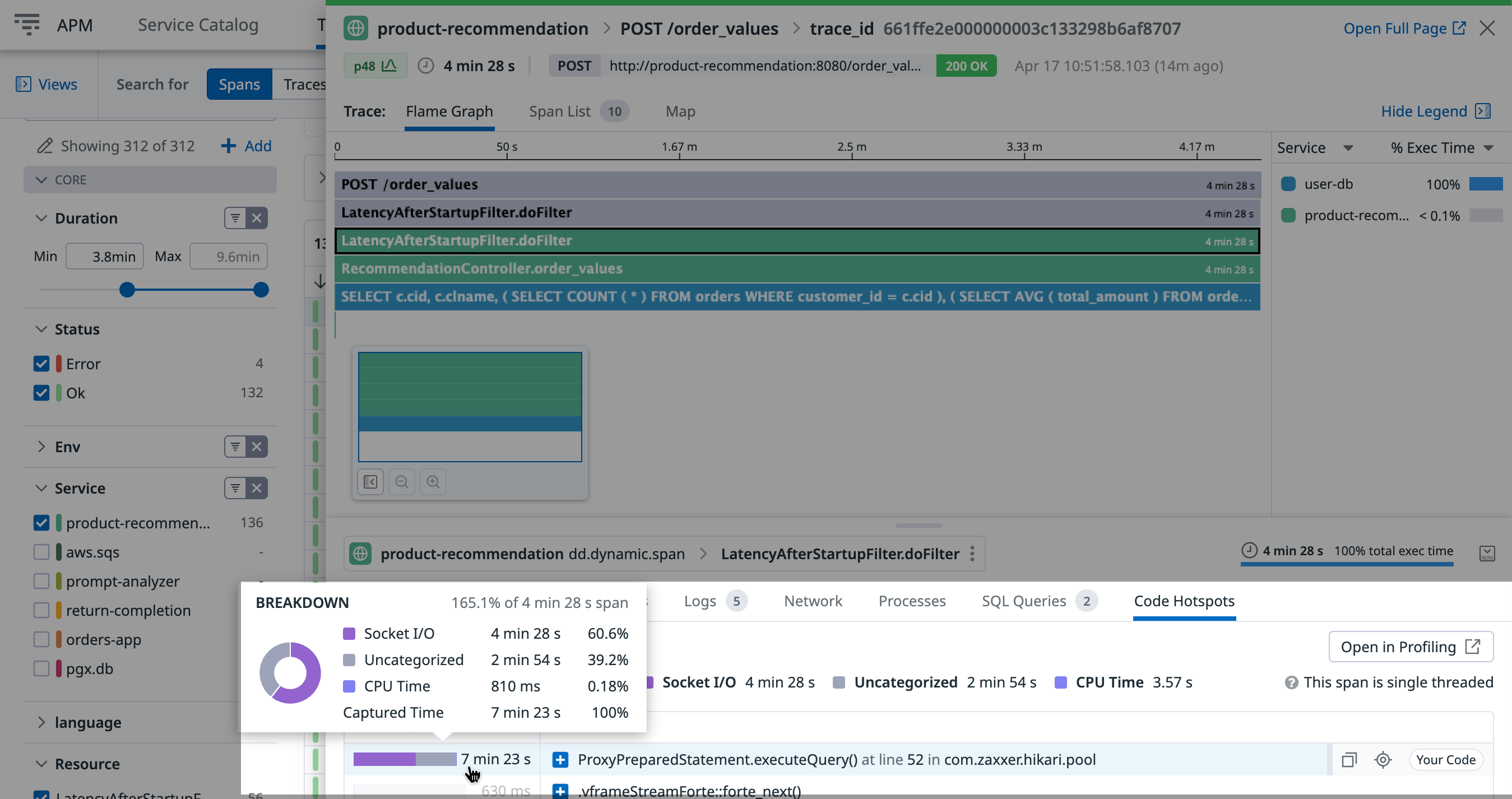

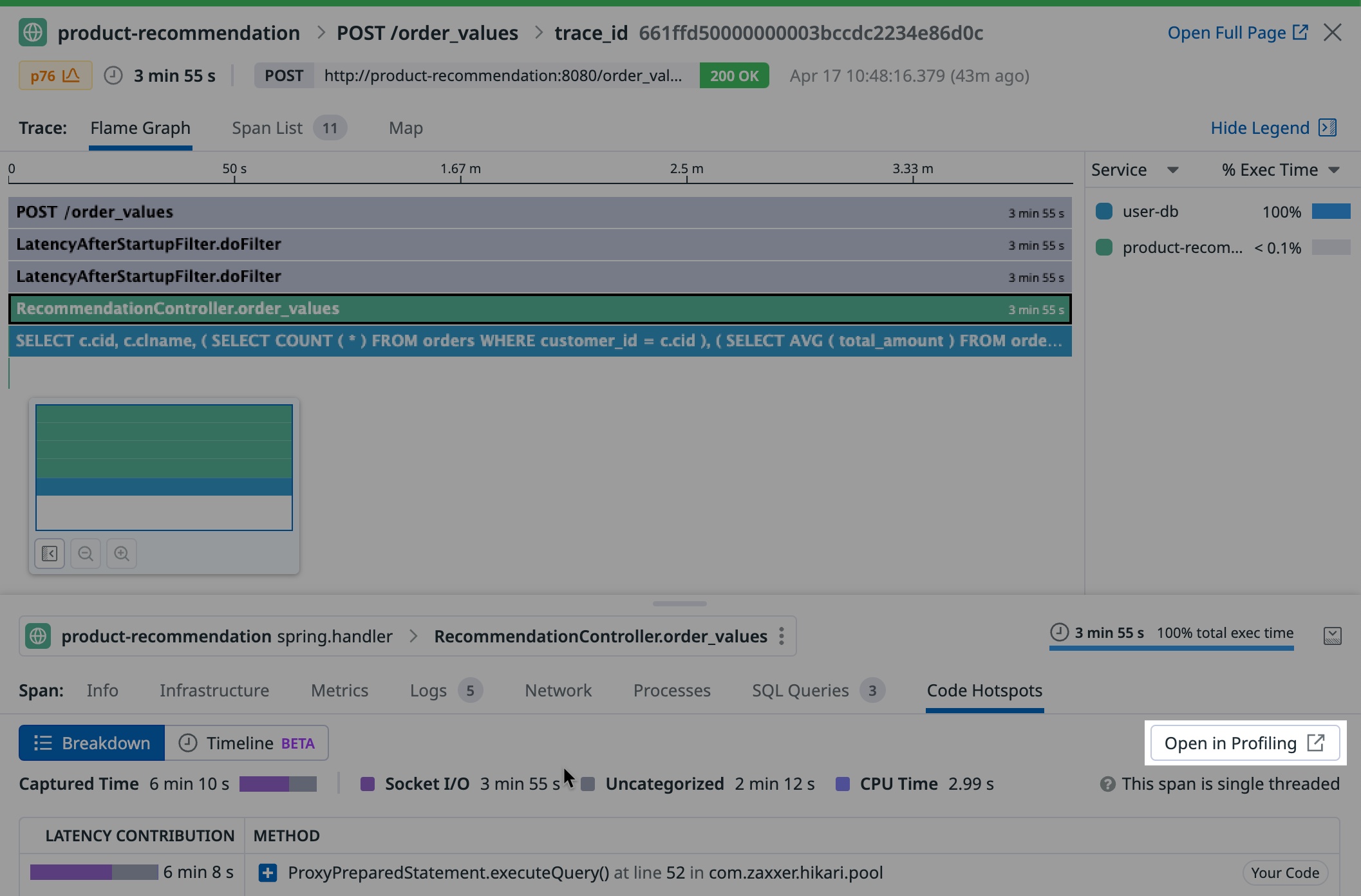

Span execution breakdown

From the view of each trace, the Code Hotspots tab highlights profiling data scoped on the selected spans.

The values on the left side represent the time spent in that method call during the selected span. Depending on the runtime and language, the categories vary:

- CPU shows the time taken executing CPU tasks.

- Synchronization shows the time spent waiting on monitors, the time a thread is sleeping and the time it is parked.

- VM operations shows the time taken waiting for VM operations (for example, garbage collections, compilation, safepoints, and heap dumps).

- File I/O shows the time taken waiting for a disk read/write operation to execute.

- Socket I/O shows the time taken waiting for a network read/write operation to execute.

- Monitor enter shows the time a thread is blocked on a lock.

- Uncategorized shows the time taken to execute the span that cannot be placed into one of the previous categories.

- CPU shows the time taken executing CPU tasks.

- Lock Wait shows the time a thread is blocked on a lock.

- Uncategorized shows the time taken to execute the span that cannot be placed into one of the previous categories.

- CPU shows the time taken executing CPU tasks.

- Uncategorized shows the time taken to execute the span that is not CPU execution.

- CPU shows the time taken executing CPU tasks.

- Uncategorized shows the time taken to execute the span that is not CPU execution.

- CPU shows the time taken executing CPU tasks.

- Off-CPU shows the time taken to execute the span that is not CPU execution.

- CPU shows the time taken executing CPU tasks.

- Lock Wait shows the time a thread is blocked on a lock.

- Uncategorized shows the time taken to execute the span that cannot be placed into one of the previous categories.

- CPU shows the time taken executing CPU tasks.

- Uncategorized shows the time taken to execute the span that is not CPU execution.

Click the plus icon + to expand the stack trace to that method in reverse order. Hover over the value to see the percentage of time explained by category.

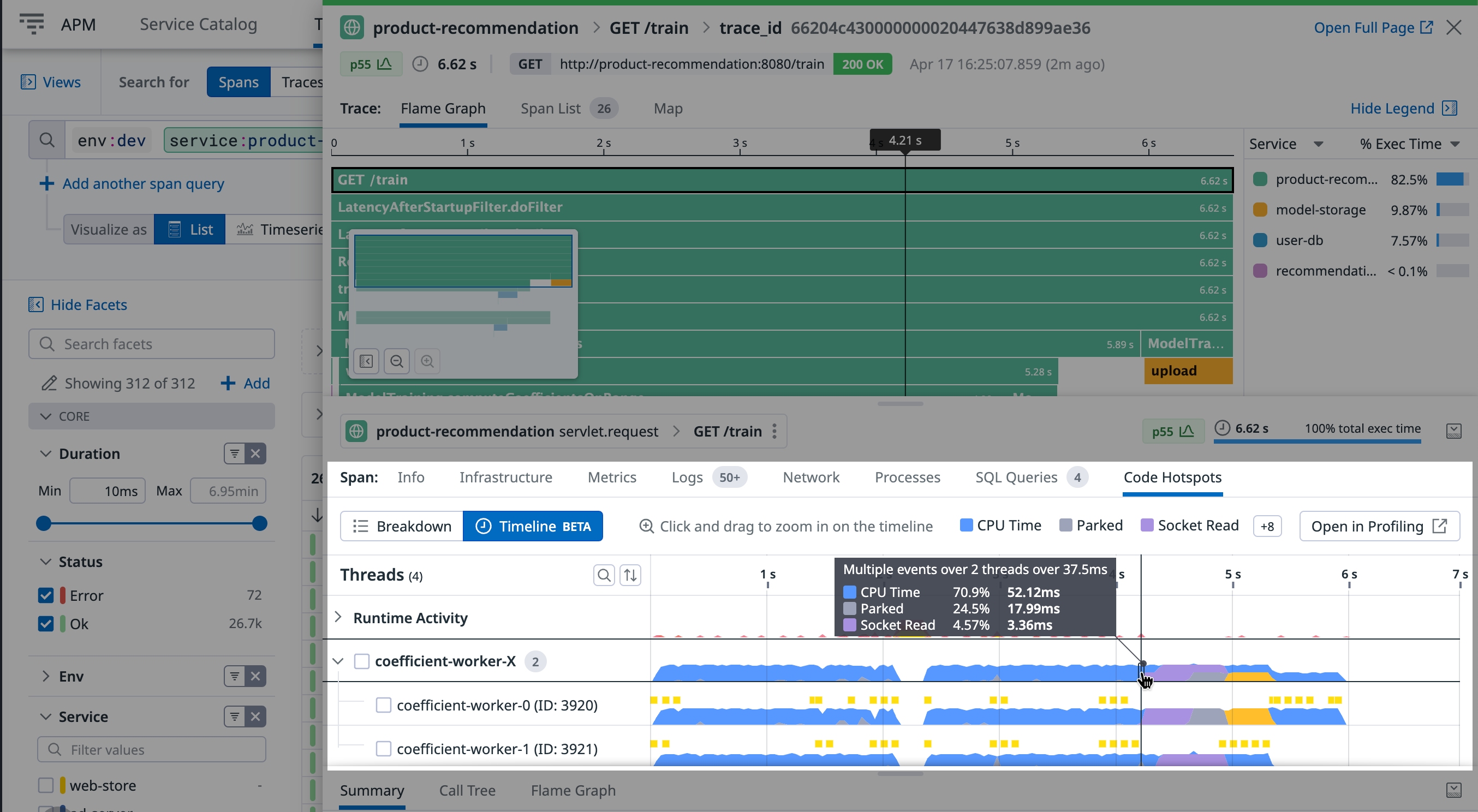

Span execution timeline view

The Timeline view surfaces time-based patterns and work distribution over the period of the span.

With the span Timeline view, you can:

- Isolate time-consuming methods.

- Sort out complex interactions between threads.

- Surface runtime activity that impacted the request.

Depending on the runtime and language, the lanes vary:

Each lane represents a thread. Threads from a common pool are grouped together. You can expand the pool to view details for each thread.

Lanes on top are runtime activities that may add extra latency. They can be unrelated to the request itself.

For additional information about debugging slow p95 requests or timeouts using the timeline, see the blog post Understanding Request Latency with Profiling.

See prerequisites to learn how to enable this feature for Python.

Each lane represents a thread. Threads from a common pool are grouped together. You can expand the pool to view details for each thread.

Each lane represents a goroutine. This includes the goroutine that started the selected span, as well as any goroutines it created and their descendants. Goroutines created by the same go statement are grouped together. You can expand the group to view details for each goroutine.

Lanes on top are runtime activities that may add extra latency. They can be unrelated to the request itself.

For additional information about debugging slow p95 requests or timeouts using the timeline, see the blog post Debug Go Request Latency with Datadog’s Profiling Timeline.

See prerequisites to learn how to enable this feature for Ruby.

Each lane represents a thread. Threads from a common pool are grouped together. You can expand the pool to view details for each thread.

Each lane represents a thread. Threads from a common pool are grouped together. You can expand the pool to view details for each thread.

Lanes on top are runtime activities that may add extra latency. They can be unrelated to the request itself.

See prerequisites to learn how to enable this feature for Node.js.

There is one lane for the JavaScript thread.

Lanes on the top are garbage collector runtime activities that may add extra latency to your request.

See prerequisites to learn how to enable this feature for PHP.

There is one lane for each PHP thread (in PHP NTS, this is only one lane). Fibers that run in this thread are represented in the same lane.

Lanes on the top are runtime activities that may add extra latency to your request, due to file compilation and garbage collection.

Viewing a profile from a trace

For each type from the breakdown, click Open in Profiling to see the same data opened up in a new page. From there, you can change the visualization to a flame graph. Click the Focus On selector to define the scope of the data:

- Span & Children scopes the profiling data to the selected span and all descendant spans in the same service.

- Span only scopes the profiling data to the previously selected span.

- Span time period scopes the profiling data to all threads during the time period the span was active.

- Full profile scopes the data to 60 seconds of the whole service process that executed the previously selected span.

Break down code performance by API endpoints

Prerequisites

Endpoint profiling is enabled by default when you turn on profiling for your Java service.

Requires using the Datadog profiler. JFR is not supported.

Endpoint profiling is enabled by default when you turn on profiling for your Python service.

Requires dd-trace-py version 0.54.0+.

Endpoint profiling is enabled by default when you turn on profiling for your Go service.

Requires dd-trace-go version 1.37.0+ and works best with Go version 1.18 or newer.

Endpoint profiling is enabled by default when you turn on profiling for your Ruby service.

Endpoint profiling is enabled by default when you turn on profiling for your Node.js service on Linux and macOS. The feature is not available on Windows.

Requires dd-trace-js version 5.0.0+, 4.24.0+ or 3.45.0+.

Endpoint profiling is enabled by default when you turn on profiling for your .NET service.

Requires dd-trace-dotnet version 2.15.0+.

Endpoint profiling is enabled by default when you turn on profiling for your PHP service.

Requires dd-trace-php version 0.79.0+.

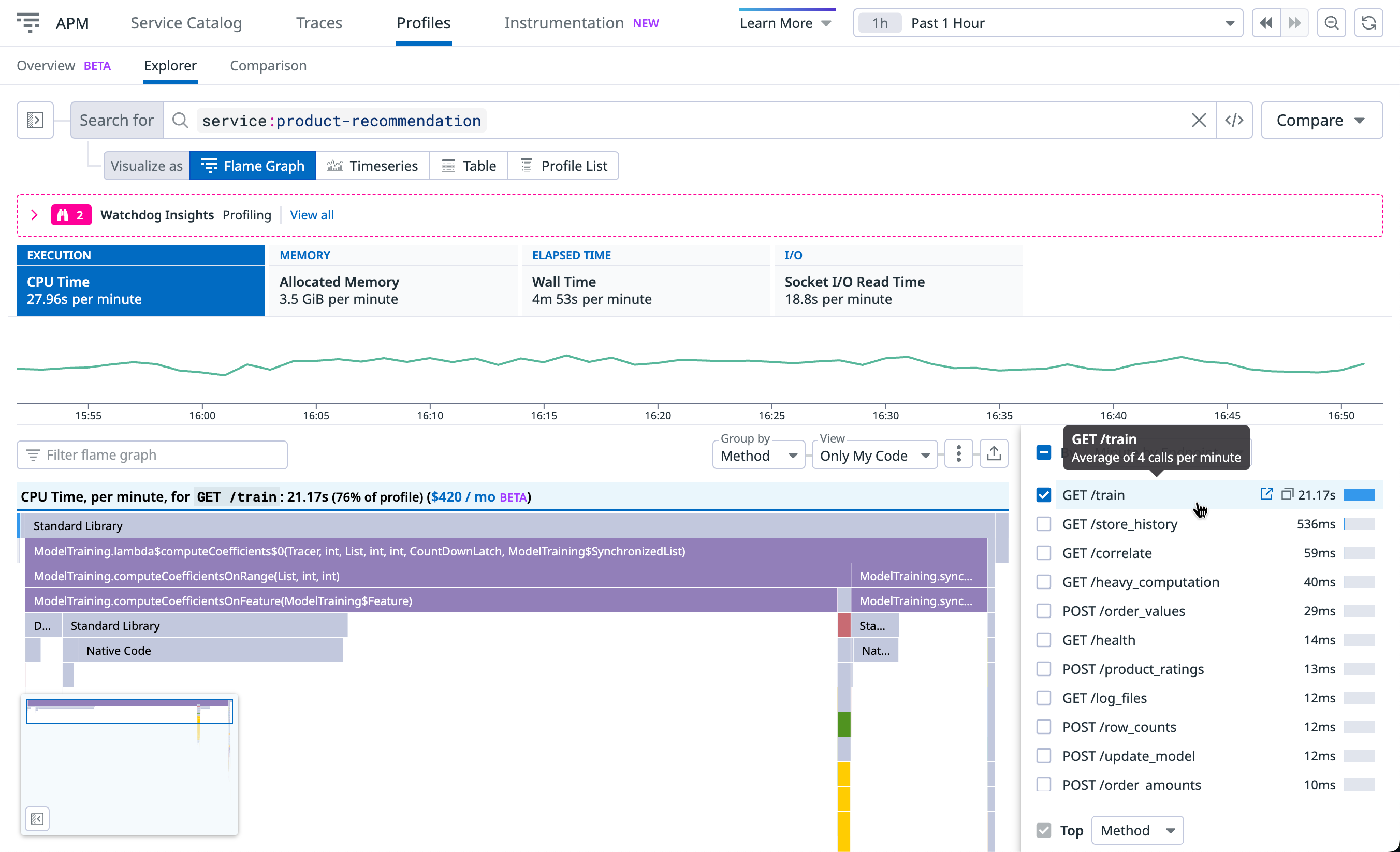

Endpoint profiling

Endpoint profiling allows you to scope your flame graphs by any endpoint of your web service to find endpoints that are slow, latency-heavy, and causing poor end-user experience. These endpoints can be tricky to debug and understand why they are slow. The slowness could be caused by an unintended large amount of resource consumption such as the endpoint consuming lots of CPU cycles.

With endpoint profiling you can:

- Identify the bottleneck methods that are slowing down your endpoint’s overall response time.

- Isolate the top endpoints responsible for the consumption of valuable resources such as CPU, memory, or exceptions. This is particularly helpful when you are generally trying to optimize your service for performance gains.

- Understand if third-party code or runtime libraries are the reason for your endpoints being slow or resource-consumption heavy.

Surface code that impacted your production latency

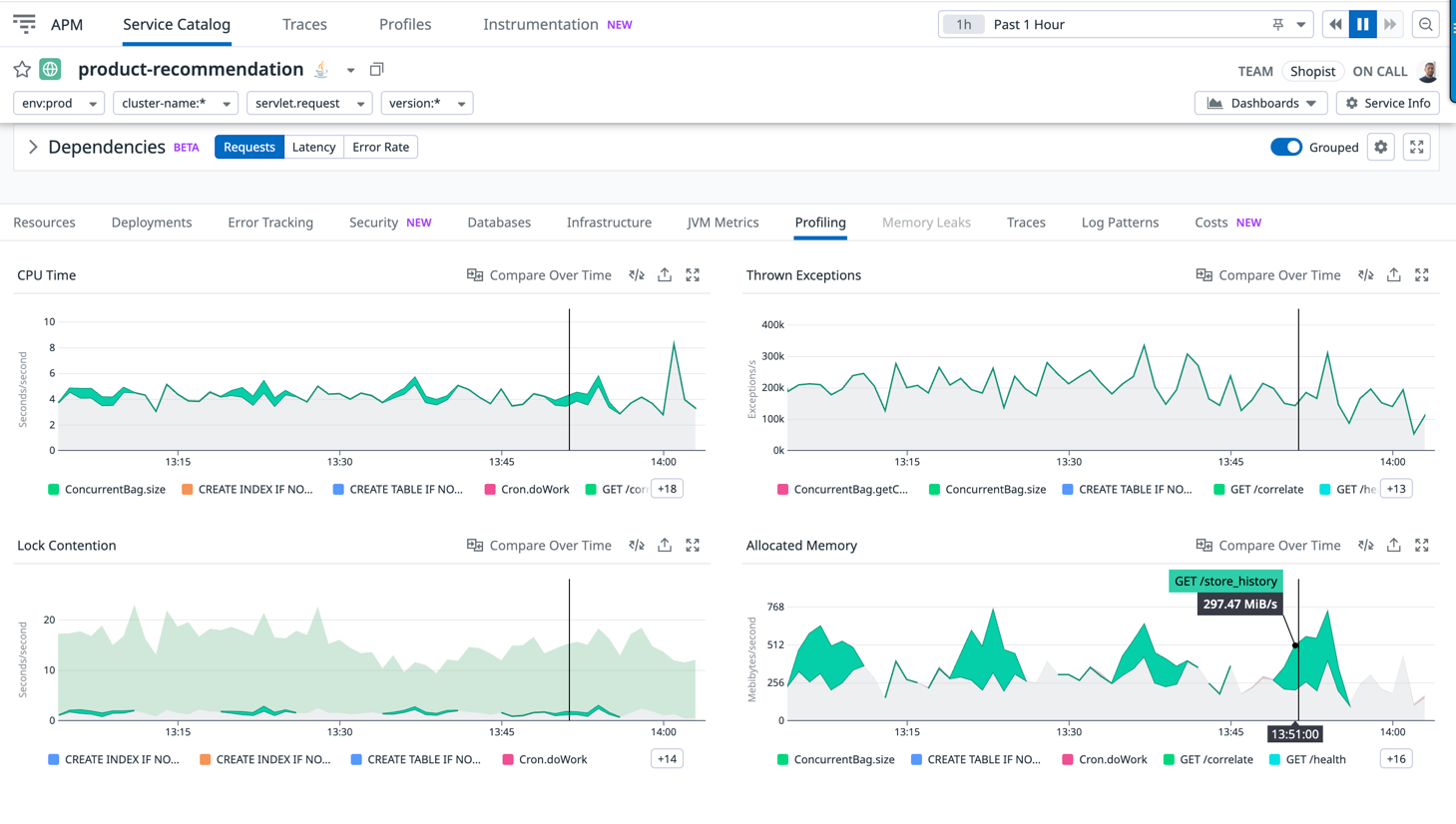

In the APM Service page, use the information in the Profiling tab to correlate a latency or throughput change to a code performance change.

In this example, you can see how latency is linked to a lock contention increase on /GET train that is caused by the following line of code:

Thread.sleep(DELAY_BY.minus(elapsed).toMillis());

Track endpoints that consume the most resources

It is valuable to track top endpoints that are consuming valuable resources such as CPU and wall time. The list can help you identify if your endpoints have regressed or if you have newly introduced endpoints that are consuming drastically more resources, slowing down your overall service.

The following image shows that GET /store_history is periodically impacting this service by consuming 20% of its CPU and 50% of its allocated memory:

Track average resource consumption per request

Select Per endpoint call to see behavior changes even as traffic shifts over time. This is useful for progressive rollout sanity checks or analyzing daily traffic patterns.

The following example shows that CPU per request increased for /GET train:

Further reading

Documentation, liens et articles supplémentaires utiles: