- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Sheets

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Logging Without Limits™ Guide

Overview

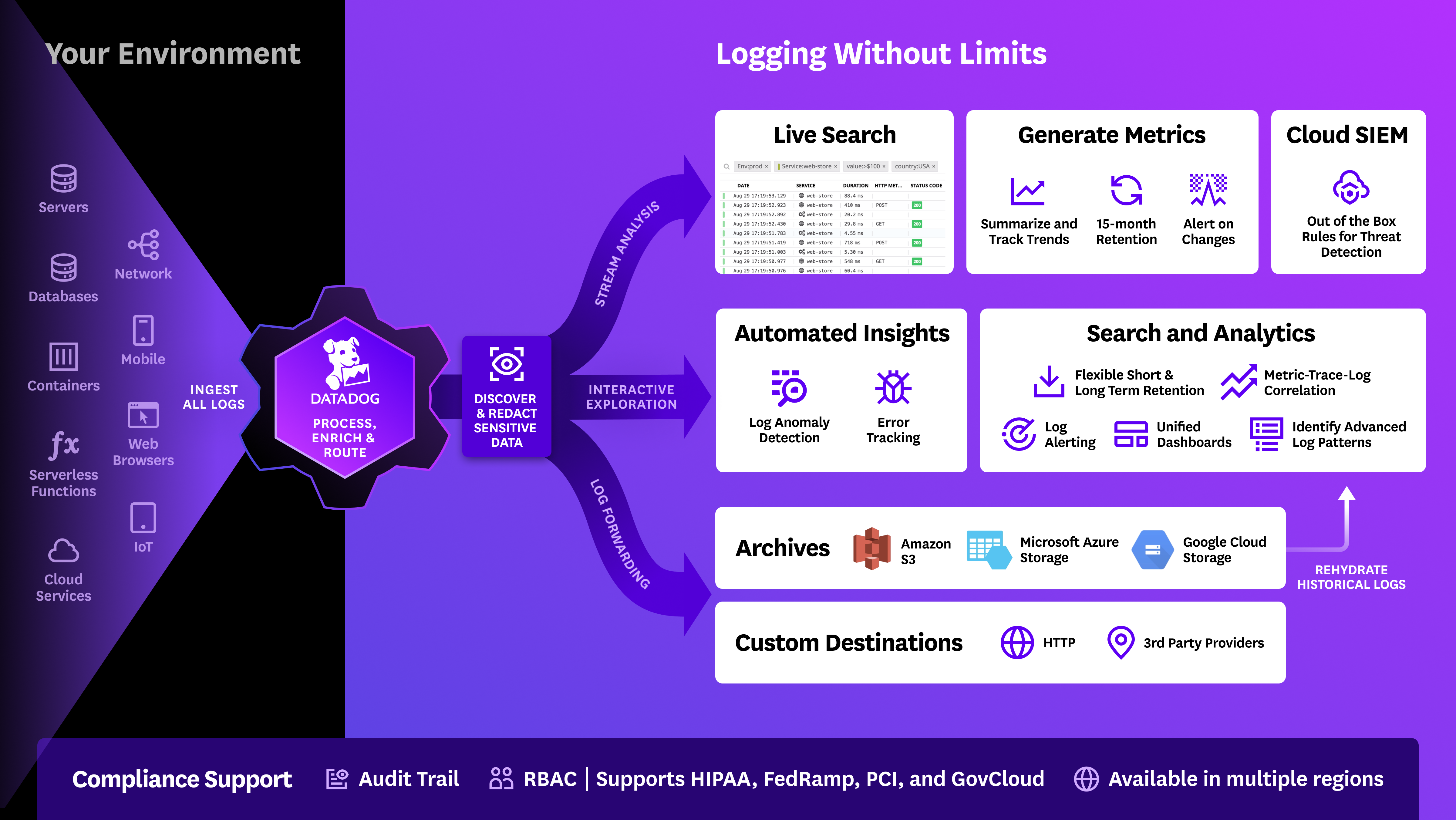

Cloud-based applications can generate logs at a rate of millions per minute. But because your logs are not all and equally valuable at any moment, Datadog Logging without Limits™ provides flexibility by decoupling log ingestion and indexing.

This guide identifies key components of Logging Without Limits™ such as Patterns, Exclusion Filters, Custom log-based metrics, and Monitors that can help you better organize Log Explorer and monitor your KPIs over time.

1. Identify your most logged service status

Your most logged service contains several logs, some of which may be irrelevant for troubleshooting. For example, you may want to investigate every 4xx and 5xx response code log, but excluded every 200 response code log from Log Explorer to expedite troubleshooting during a major outage or event. By identifying the corresponding service first, you can quickly track down which service status produces the most logs and is best to exclude from the Log Explorer view.

To identify your most logged service status:

- In Log Explorer, select graph view located next to the search bar.

- Below the search bar, set count

*group byserviceand limit totop 10. - Select Top List from the dropdown menu next to hide controls.

- Click on the first listed service and select search for in the populated menu. This generates a search, which is visible in the search bar above, based on your service facet.

- Switch group by

serviceto group bystatus. This generates a top statuses list for your service. - Click on the first listed status and select search for in the populated menu. This adds your status facet to the search.

Note: These steps are applicable to any high volume logging query to generate a top list. You can group by any facet, such as host or network.client.ip versus service or status.

2. Identify high volume logging patterns

Now that you have identified your most logging service status, switch to the patterns view, located next to the graph view in the top left of Log Explorer, to automatically see your log patterns for the selected context.

A context is composed of a time range and a search query. Each pattern comes with highlights to get you straight to its characteristic features. A mini graph displays a rough timeline for the volume of its logs to help you identify how that pattern differs from other patterns. Sections of logs that vary within the pattern are highlighted to help you quickly identify differences across log lines.

Click on the log pattern that you would like to exclude to see a sample of underlying logs.

The patterns view is helpful when identifying and filtering noisy patterns. It shows the number of logs matching a pattern, split by service and status. Click on the first pattern to view a detailed log of events relating to your status. A contextual panel is populated with information about your noisiest status pattern.

3. Create a log pattern exclusion filter

The pattern context panel lists every instance (event) of a log pattern and creates a custom search query based on the selected pattern. Use this query in an exclusion filter to remove those logs from your index.

To create an exclusion filter:

- Click on a pattern from the pattern view list.

- Click the Add Exclusion Filter button in the top right corner. This button is disabled if less than half of the logs in this pattern fall into a single index.

- The Log Index Configuration page opens in a new tab with a pre-filled exclusion filter for the index where the majority of the logs for that pattern show up.

- The exclusion filter is populated with an automatically generated search query associated with the pattern. Input the filter name and set an exclusion percentage and then save the new exclusion filter.

Note: If a log matches several exclusion filters, only the first exclusion filter rule is applied. A log is not sampled or excluded multiple times by different exclusion filters.

In this example, the service email-api-py, status INFO pattern response code from ses 200 is filtered with an exclusion filter. Removing any high volume logging pattern similar to this one from Log Explorer helps you reduce noise and identify issues quicker. However, these logs are only excluded from indexing. They are still ingested and available to view in Live Tail and can be sent to log archives or used to generate metrics.

Exclusion filters can be disabled at any time by toggling the disable option to the right of the filter. They can also be modified and removed by hovering over the filter and selecting the edit or delete option.

4. Generate metrics to track excluded logs

Once a log pattern is excluded from Log Explorer, you can still track KPIs over time at the ingest level by creating a new custom log-based metric.

Add a new log-based metric

To generate a new log-based metric based on your log pattern:

- Navigate to the Generate Metrics page.

- Click New Metric in the top right corner.

- Enter a name for your metric. Log-based metric names must follow the naming metric convention.

- Under Define Query, input the search query you copied and pasted into the pattern exclusion filter. For example, as per the example above:

service:web-store status:info "updating recommendations with customer_id" "url shops". - Select the field you would like to track: Select

*to generate a count of all logs matching your query or enter a measure (for example,@duration) to aggregate a numeric value and create its corresponding count, min, max, sum, and avg aggregated metrics. - Add dimensions to group: Select log attributes or tag keys to apply to the generated log-based metric to transform them into tags following the

<KEY>:<VALUE>format. Log-based metrics are considered custom metrics. Avoid grouping by unbounded or extremely high cardinality attributes like timestamps, user IDs, request IDs, or session IDs to avoid negatively impacting your billing.

Create an anomaly detection monitor

Anomaly detection is an algorithmic feature that identifies when a metric is behaving differently than it has in the past. Creating an anomaly detection monitor for your excluded logs alerts you of any changes based on your set alert conditions.

To set an anomaly detection monitor:

- Navigate to the New Monitor page.

- Select Anomaly.

- Enter the log-based metric you defined in the previous section.

- Set the alert conditions and add any additional information needed to alert yourself and/or your team of what’s happening.

- Click Create.

When an anomaly is detected, an alert is sent to all who are tagged. This alert can also be found in the Triggered Monitors page.

Review

In this guide, you learned how to use Logging without Limits™ to:

- Identify your most logging service status

- Identify high volume logging patterns

- Create a log pattern exclusion filter

- Generate metrics to track excluded logs

To learn more about Logging Without Limits™ and how to better utilize features like Log Explorer, Live Tail, and Log Patterns, view the links below.

Further Reading

Additional helpful documentation, links, and articles: