- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Sheets

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Create a Dashboard to track and correlate APM metrics

4 minutes to complete

Datadog APM allows you to create dashboards based on your business priorities and metrics important to you: You can create widgets on these dashboards to keep track of any traditional infrastructure, logs and custom metrics like host memory usage alongside critical APM metrics based on throughput, latency, and error rate for correlation. Next to these you can track latency of the user experience of your top customers or largest transactions and alongside these keep track of the throughput of your main web server ahead of any major events like Black Friday.

This guides walks you through adding trace metrics to a dashboard, correlating them with infrastructure metrics and then how to export an Analytics query. This guide covers adding widgets to the dashboard in three ways:

- Copying an existing APM graph ( Step 1. 2. & 3.)

- Creating it manually. (Step 4. & 5. )

- Exporting an Analytics query. (Step 7.)

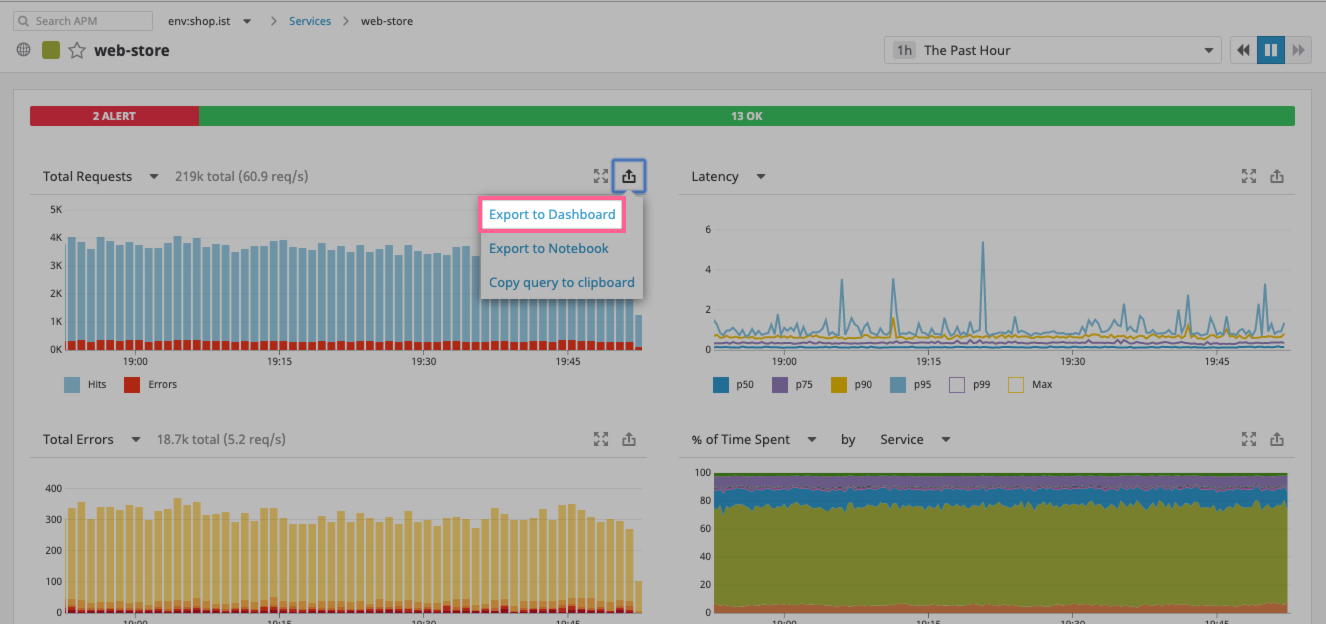

Open the Service Catalog and choose the

web-storeservice.Find the Total Requests Graph and click on the

exportbutton on the top right to chooseExport to Dashboard. ClickNew Timeboard.Click on

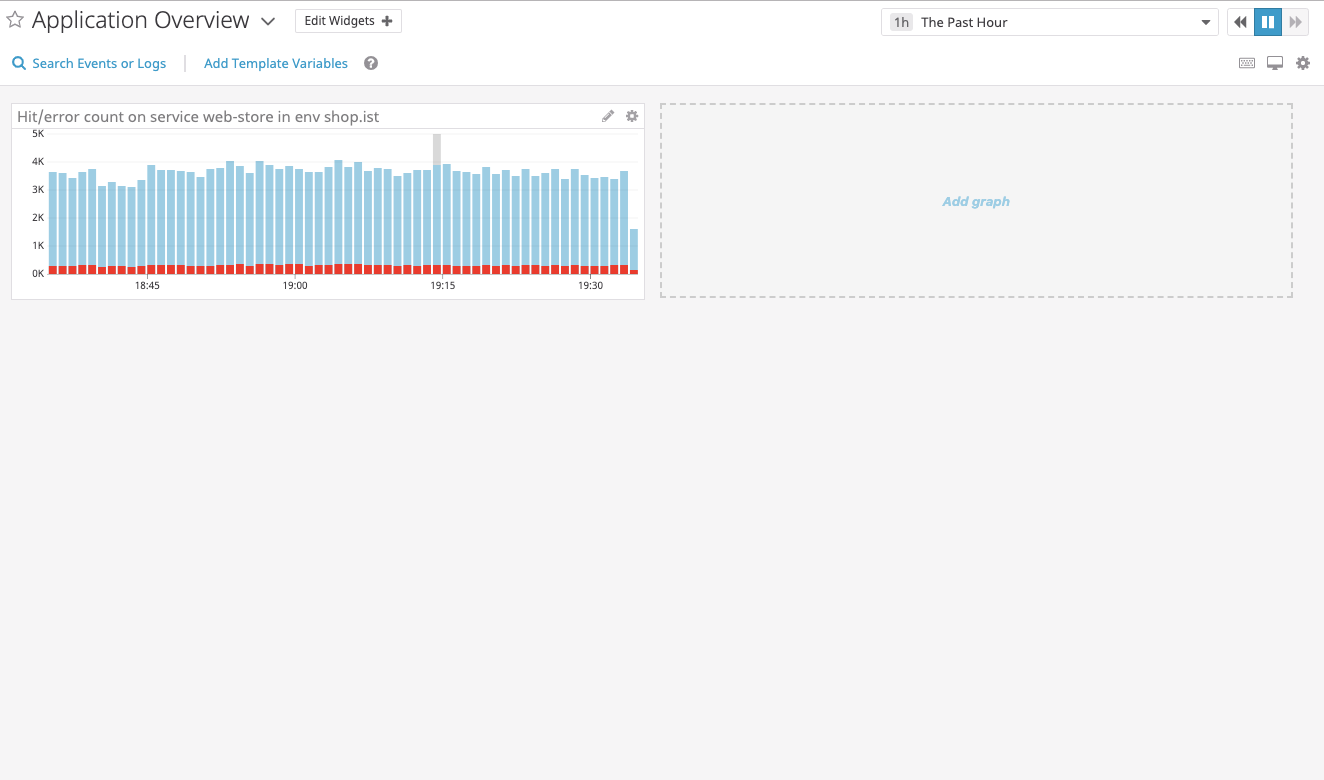

View Dashboardin the success message.In the new dashboard, the

Hit/error count on servicegraph for theweb-storeservice is now available. It shows the entire throughput of this service as well as its total amount of errors.Note: You can click on the pencil icon to edit this graph and see what precise metrics are being used.

Click on the

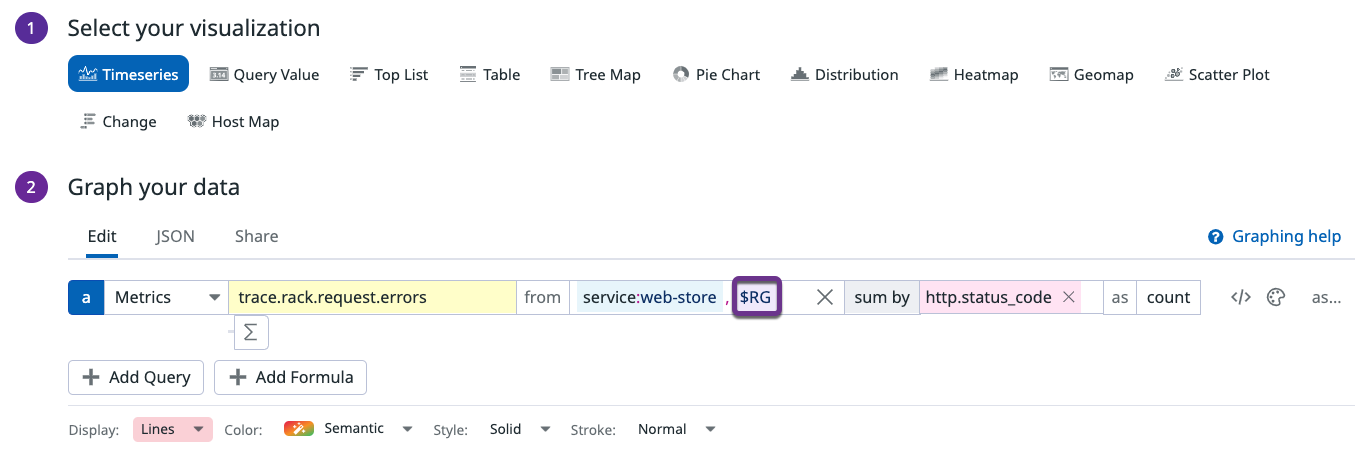

Add graphplaceholder tile on the dashboard space and then Drag aTimeseriesto this space.This is the dashboard widget edit screen. It empowers you to create any type of visualization across all of the metrics available to you. See the Timeseries widget documentation to learn more.

Click on the

system.cpu.userbox and choose the metric and parameters relevant to you, in this example:Parameter Value Description metrictrace.rack.requests.errorsThe Ruby Rack total set of erroneous requests. fromservice:web-storeThe main service in this example stack, it is a Ruby service and all the information in the chart with come from it. sum byhttp.status_codeBreaking down the chart by http status codes. This specific breakdown is just one example of the many can choose. It is important to note that any metric that starts with

trace.contains APM information. See the APM metric documentation to learn more.Drag another timeseries to the placeholder tile

In this example two different types of metrics are added to a graph, a

trace.*and aruntime.*one. Combined, these metrics allow you to correlate information between requests and code runtime performances. Specifically, the latency of a service is displayed next to the thread count, knowing that latency spikes might be associated with an increase in the thread count:First, add

trace.rack.requests.errorsmetric into the widget:Parameter Value Description metrictrace.rack.request.duration.by.service.99pThe 99th percentile of latency of requests in our service. fromservice:web-storeThe main service in this example stack, it is a Ruby service and all the information in the chart with come from it. Then click on the

Graph additional: Metricsto add another metric to the chart:Parameter Value Description metricruntime.ruby.thread_countThread count taken from the Ruby runtime metrics. fromservice:web-storeThe main service in this example stack, it is a Ruby service and all the information in the chart with come from it.

This setup can show whether a spike in latency is associated with a spike in the ruby thread count, immediately pointing out the cause for latency allowing for fast resolution.

Go to Analytics.

This example shows how to query the latency across the example application: breaking it down by merchants on the platform and view the top-10 merchants with highest latency. From the Analytics screen, export the graph to the dashboard and view it there:

Return to your dashboard.

Multiple widgets can now be seen providing deep observability into the example application from both a technical perspective and a business one. But this is only the start of what you can do: add infrastructure metrics, use multiple types of visualizations and add calculations and projections.

With the dashboard you can also explore related events.

Click on the

Search Events or Logsbutton and add search for a relevant event explorer. Note: in this example Ansible is used, your event explorer might be different.Here, alongside the view of our dashboard, recent events that have happened (in datadog or in external services like Ansible, Chef, etc.) can be seen such as: deployments, task completions, or monitors alerting. These events can then be correlated to what is happening to the metrics setup in the dashboard.

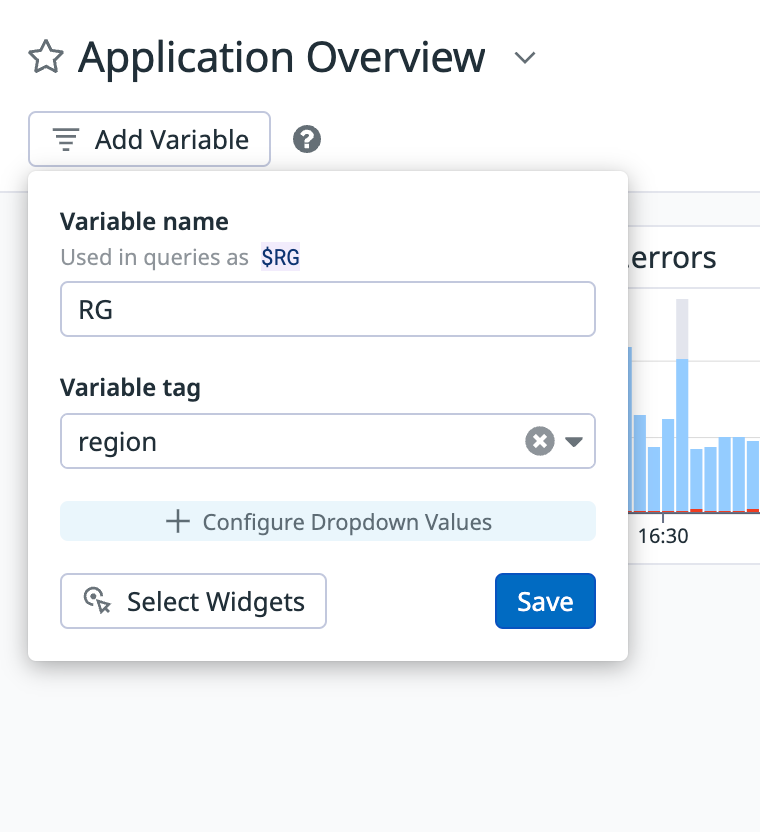

Finally, make sure to use template variables. These are a set of values that dynamically control the widgets on the dashboards that every user can use without having to edit the widgets themselves. For more information, see the Template Variable documentation.

Click on Add Variable in the header. Choose the tag that the variable will control, and configure its name, default value, or available values.

In this example a template variable for

Regionis added to see how the dashboard behaves acrossus-east1andeurope-west-4, out two primary areas of operation.You can now add this template variable to each of the graphs:

When you select template variable values, all values update in the applicable widgets of the dashboard.

Be sure to explore all the metrics available to you and take full advantage of the Datadog 3 pillars of observability. You can turn this basic dashboard into a powerful tool that is a one-stop-shop for monitoring and observability in your organization.

Further Reading

Additional helpful documentation, links, and articles: