- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Test Impact Analysis

- Code Analysis

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Sheets

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Optimization

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

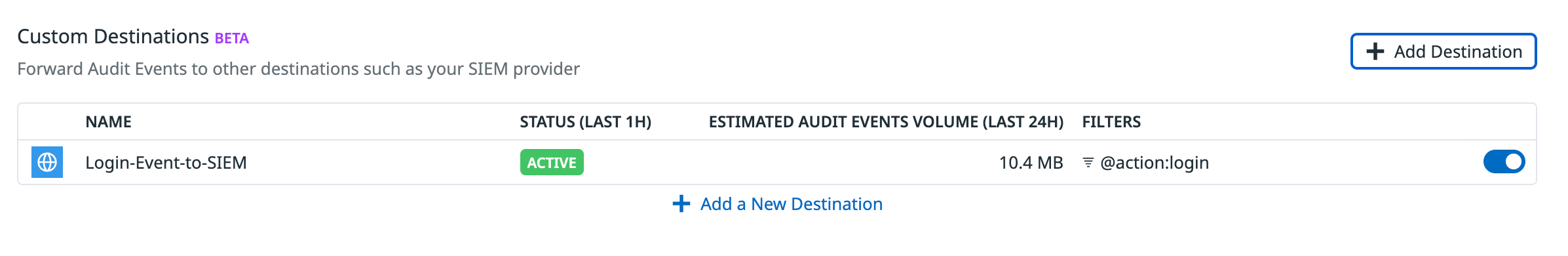

Forwarding Audit Events to Custom Destinations

Audit Event Forwarding is not available in the US1-FED site.

Audit Event Forwarding is in beta.

Overview

Audit Event Forwarding allows you to send audit events from Datadog to custom destinations like Splunk, Elasticsearch, and HTTP endpoints. Audit events are forwarded in JSON format. You can add up to three destinations for each Datadog org.

Note: Only Datadog users with the audit_trail_write permission can create, edit, or delete custom destinations for forwarding audit events.

Set up audit event forwarding to custom destinations

- Add webhook IPs from the IP ranges list to the allowlist if necessary.

- Navigate to Audit Trail Settings.

- Click Add Destination in the Audit Event Forwarding section.

- Enter the query to filter your audit events for forwarding. For example, add

@action:loginas the query to filter if you only want to forward login events to your SIEM or custom destination. See Search Syntax for more information. - Select the Destination Type.

- Enter a name for the destination.

- In the Define endpoint field, enter the endpoint to which you want to send the logs. The endpoint must start with

https://.- For example, if you want to send logs to Sumo Logic, follow their Configure HTTP Source for Logs and Metrics documentation to get the HTTP Source Address URL to send data to their collector. Enter the HTTP Source Address URL in the Define endpoint field.

- In the Configure Authentication section, select one of the following authentication types and provide the relevant details:

- Basic Authentication: Provide the username and password for the account to which you want to send logs.

- Request Header: Provide the header name and value. For example, if you use the Authorization header and the username for the account to which you want to send logs is

myaccountand the password ismypassword:- Enter

Authorizationfor the Header Name. - The header value is in the format of

Basic username:password, whereusername:passwordis encoded in base64. For this example, the header value isBasic bXlhY2NvdW50Om15cGFzc3dvcmQ=.

- Enter

- Click Save.

- Enter a name for the destination.

- In the Configure Destination section, enter the endpoint to which you want to send the logs. The endpoint must start with

https://. For example, enterhttps://<your_account>.splunkcloud.com:8088. Note:/services/collector/eventis automatically appended to the endpoint. - In the Configure Authentication section, enter the Splunk HEC token. See Set up and use HTTP Event Collector for more information about the Splunk HEC token.

- Click Save.

Note: The indexer acknowledgment needs to be disabled.

Enter a name for the destination.

In the Configure Destination section, enter the following details:

a. The endpoint to which you want to send the logs. The endpoint must start with

https://. An example endpoint for Elasticsearch:https://<your_account>.us-central1.gcp.cloud.es.io.b. The name of the destination index where you want to send the logs.

c. Optionally, select the index rotation for how often you want to create a new index:

No Rotation,Every Hour,Every Day,Every Week, orEvery Month. The default isNo Rotation.In the Configure Authentication section, enter the username and password for your Elasticsearch account.

Click Save.

Further Reading

Additional helpful documentation, links, and articles: