- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Actualizar el Agent

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

Ceph

Supported OS

Versión de la integración4.0.0

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

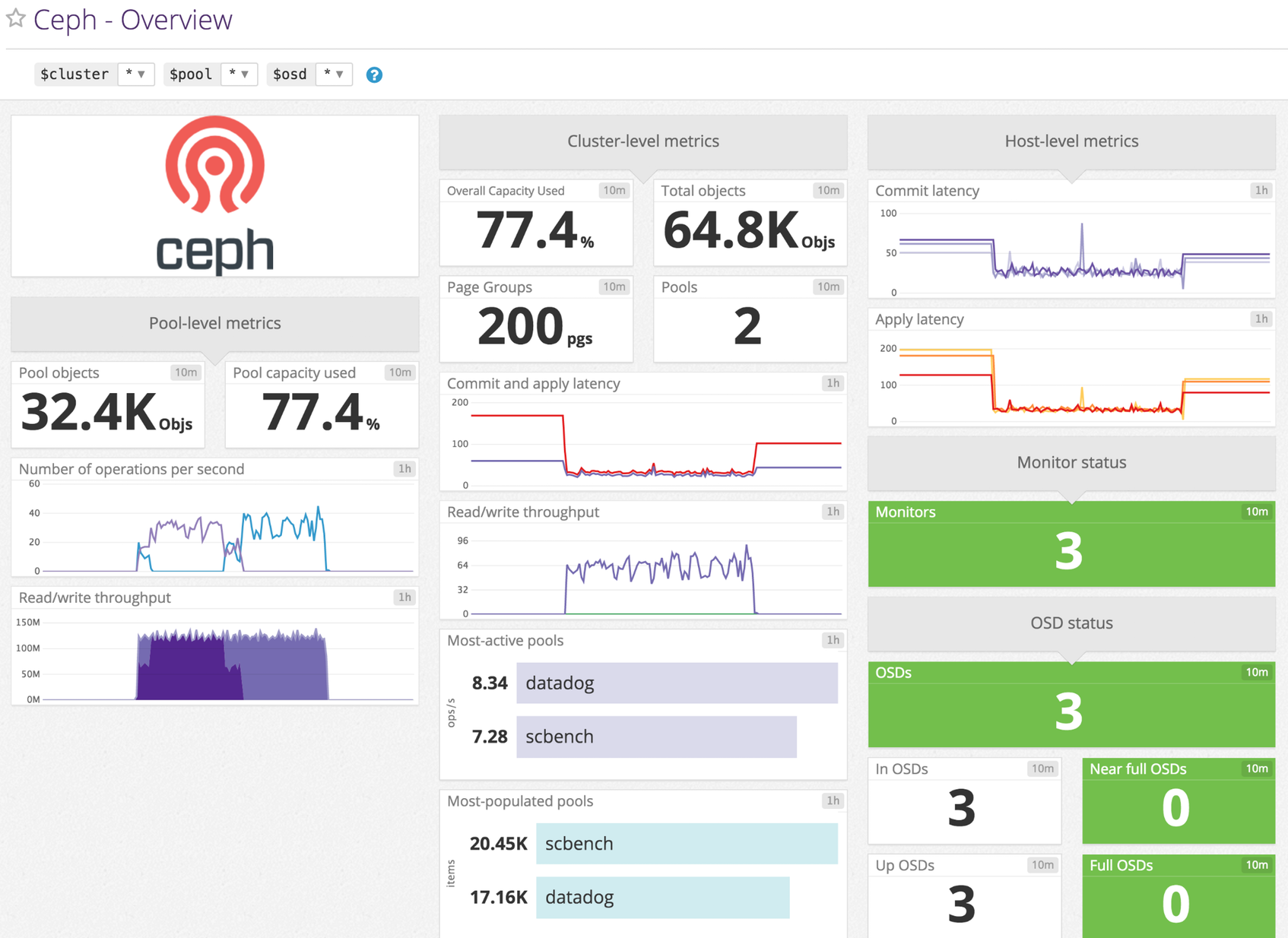

Overview

Enable the Datadog-Ceph integration to:

- Track disk usage across storage pools

- Receive service checks in case of issues

- Monitor I/O performance metrics

Setup

Installation

The Ceph check is included in the Datadog Agent package, so you don’t need to install anything else on your Ceph servers.

Configuration

Edit the file ceph.d/conf.yaml in the conf.d/ folder at the root of your Agent’s configuration directory.

See the sample ceph.d/conf.yaml for all available configuration options:

init_config:

instances:

- ceph_cmd: /path/to/your/ceph # default is /usr/bin/ceph

use_sudo: true # only if the ceph binary needs sudo on your nodes

If you enabled use_sudo, add a line like the following to your sudoers file:

dd-agent ALL=(ALL) NOPASSWD:/path/to/your/ceph

Log collection

Available for Agent versions >6.0

Collecting logs is disabled by default in the Datadog Agent, enable it in your

datadog.yamlfile:logs_enabled: trueNext, edit

ceph.d/conf.yamlby uncommenting thelogslines at the bottom. Update the logspathwith the correct path to your Ceph log files.logs: - type: file path: /var/log/ceph/*.log source: ceph service: "<APPLICATION_NAME>"

Validation

Run the Agent’s status subcommand and look for ceph under the Checks section.

Data Collected

Metrics

| ceph.aggregate_pct_used (gauge) | Overall capacity usage metric Shown as percent |

| ceph.apply_latency_ms (gauge) | Time taken to flush an update to disks Shown as millisecond |

| ceph.commit_latency_ms (gauge) | Time taken to commit an operation to the journal Shown as millisecond |

| ceph.misplaced_objects (gauge) | Number of objects misplaced Shown as item |

| ceph.misplaced_total (gauge) | Total number of objects if there are misplaced objects Shown as item |

| ceph.num_full_osds (gauge) | Number of full osds Shown as item |

| ceph.num_in_osds (gauge) | Number of participating storage daemons Shown as item |

| ceph.num_mons (gauge) | Number of monitor daemons Shown as item |

| ceph.num_near_full_osds (gauge) | Number of nearly full osds Shown as item |

| ceph.num_objects (gauge) | Object count for a given pool Shown as item |

| ceph.num_osds (gauge) | Number of known storage daemons Shown as item |

| ceph.num_pgs (gauge) | Number of placement groups available Shown as item |

| ceph.num_pools (gauge) | Number of pools Shown as item |

| ceph.num_up_osds (gauge) | Number of online storage daemons Shown as item |

| ceph.op_per_sec (gauge) | IO operations per second for given pool Shown as operation |

| ceph.osd.pct_used (gauge) | Percentage used of full/near full osds Shown as percent |

| ceph.pgstate.active_clean (gauge) | Number of active+clean placement groups Shown as item |

| ceph.read_bytes (gauge) | Per-pool read bytes Shown as byte |

| ceph.read_bytes_sec (gauge) | Bytes/second being read Shown as byte |

| ceph.read_op_per_sec (gauge) | Per-pool read operations/second Shown as operation |

| ceph.recovery_bytes_per_sec (gauge) | Rate of recovered bytes Shown as byte |

| ceph.recovery_keys_per_sec (gauge) | Rate of recovered keys Shown as item |

| ceph.recovery_objects_per_sec (gauge) | Rate of recovered objects Shown as item |

| ceph.total_objects (gauge) | Object count from the underlying object store. [v<=3 only] Shown as item |

| ceph.write_bytes (gauge) | Per-pool write bytes Shown as byte |

| ceph.write_bytes_sec (gauge) | Bytes/second being written Shown as byte |

| ceph.write_op_per_sec (gauge) | Per-pool write operations/second Shown as operation |

Note: If you are running Ceph luminous or later, the ceph.osd.pct_used metric is not included.

Events

The Ceph check does not include any events.

Service Checks

ceph.overall_status

Returns OK if your ceph cluster status is HEALTH_OK, WARNING if it’s HEALTH_WARNING, CRITICAL otherwise.

Statuses: ok, warning, critical

ceph.osd_down

Returns OK if you have no down OSD. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.osd_orphan

Returns OK if you have no orphan OSD. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.osd_full

Returns OK if your OSDs are not full. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.osd_nearfull

Returns OK if your OSDs are not near full. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pool_full

Returns OK if your pools have not reached their quota. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pool_near_full

Returns OK if your pools are not near reaching their quota. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_availability

Returns OK if there is full data availability. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_degraded

Returns OK if there is full data redundancy. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_degraded_full

Returns OK if there is enough space in the cluster for data redundancy. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_damaged

Returns OK if there are no inconsistencies after data scrubing. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_not_scrubbed

Returns OK if the PGs were scrubbed recently. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.pg_not_deep_scrubbed

Returns OK if the PGs were deep scrubbed recently. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.cache_pool_near_full

Returns OK if the cache pools are not near full. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.too_few_pgs

Returns OK if the number of PGs is above the min threshold. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.too_many_pgs

Returns OK if the number of PGs is below the max threshold. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.object_unfound

Returns OK if all objects can be found. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.request_slow

Returns OK requests are taking a normal time to process. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

ceph.request_stuck

Returns OK requests are taking a normal time to process. Otherwise, returns WARNING if the severity is HEALTH_WARN, else CRITICAL.

Statuses: ok, warning, critical

Troubleshooting

Need help? Contact Datadog support.