- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Actualizar el Agent

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

(LEGACY) Advanced Configurations

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

Observability Pipelines is not available on the US1-FED Datadog site.

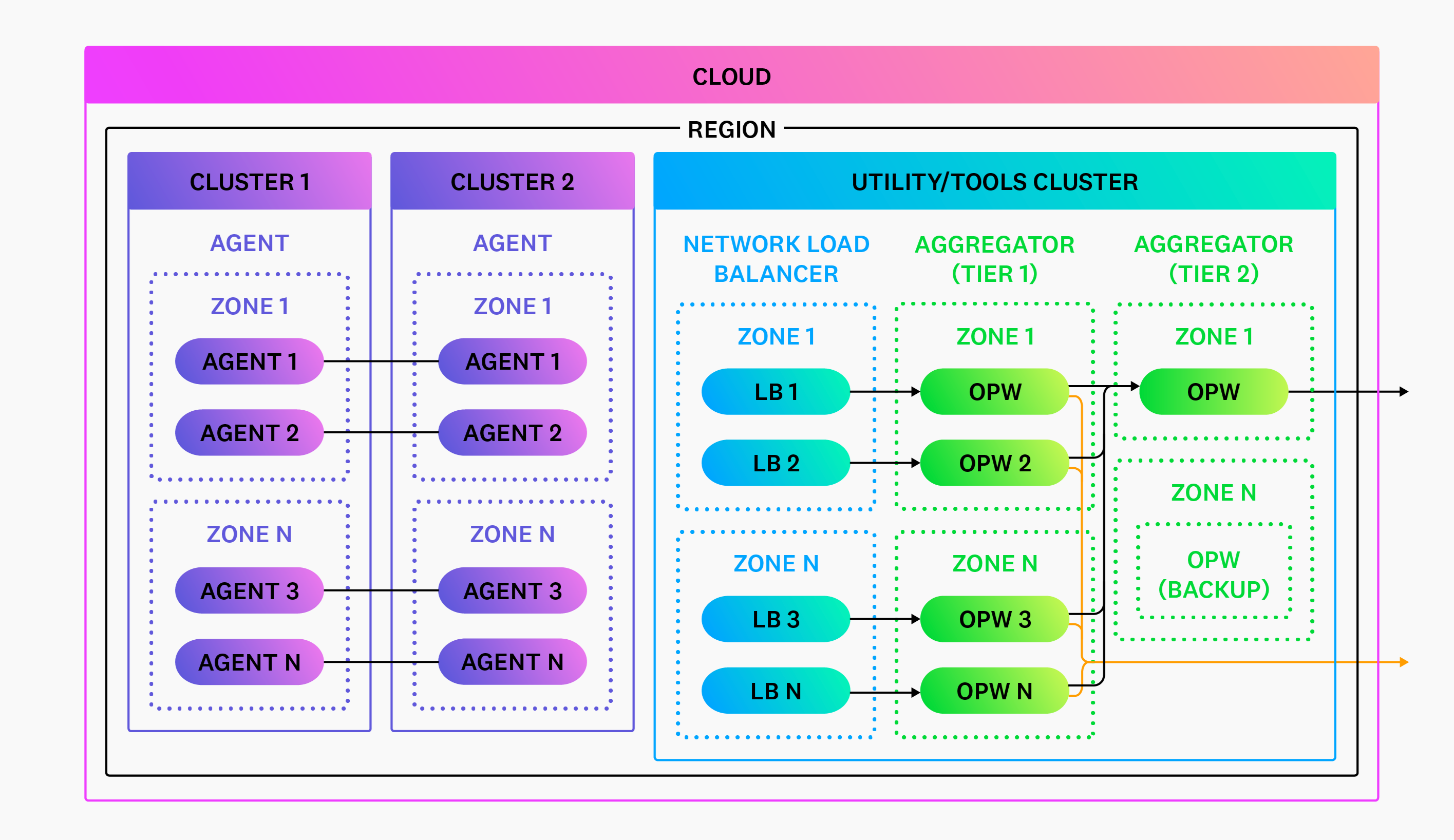

This guide is for large-scale production-level deployments.

Multiple aggregator deployments

As covered in Networking, Datadog recommends to start with one Observability Pipelines Worker aggregator per region. This is to prevent overcomplicating your initial deployment of Observability Pipelines Worker, but there are circumstances where starting with multiple deployments is ideal:

Prevent sending data over the public internet. If you have multiple clouds and regions, deploy the Observability Pipelines Worker aggregator in each of them to prevent sending large amounts of data over the internet. Your Observability Pipelines Worker aggregator should receive internal data and serve as the single point of egress for your network.

Independent management. You have teams that can operate and manage an Observability Pipelines Worker aggregator independently for their use case. For example, your Data Science team may be responsible for operating their own infrastructure and has the means to independently operate their own Observability Pipelines Worker aggregator.

Multiple cloud accounts

Many users have multiple cloud accounts with VPCs and clusters inside. Datadog still recommends in this case to deploy one Observability Pipelines Worker aggregator per region. Deploy Observability Pipelines Worker into your utility or tools cluster and configure all your cloud accounts to send data to this cluster. See Networking for more information.

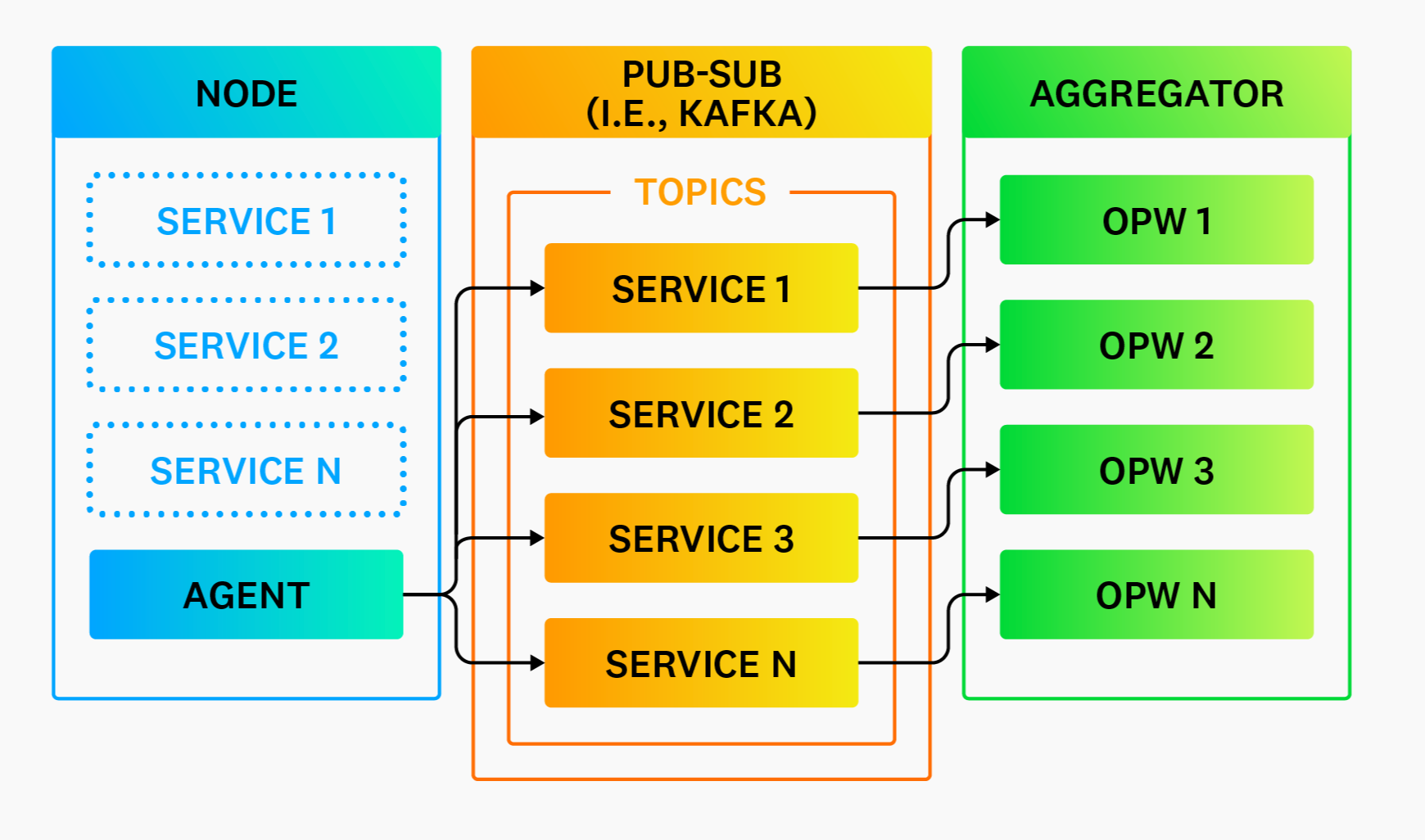

Pub-sub systems

Using a publish-subscribe (pub-sub) system such as Kafka is not required to make your architecture highly available or highly durable (see High Availability and Disaster Recovery), but they do offer the following advantages:

Improved reliability. Pub-sub systems are designed to be highly reliable and durable systems that change infrequently. They are especially reliable if you are using a managed option. The Observability Pipelines Worker is likely to change more often based on its purpose. Isolate Observability Pipelines Worker downtime behind a pub-sub system to increase availability from the perception of your clients and make recovery simpler.

Load balancer not required. Pub-sub systems eliminate the need for a load balancer. Your pub-sub system handles the coordination of consumers, making it easy to scale Observability Pipelines Worker horizontally.

Pub-sub partitioning

Partitioning, or “topics” in Kafka terminology, refers to separating data in your pub-sub systems. You should partition along data origin lines, such as the service or host that generated the data.

Pub-sub configuration

When using a pub-sub system, Datadog recommends the following configuration changes for Observability Pipelines Worker:

- Enable end-to-end acknowledgments for all sinks. This setting ensures that the pub-sub checkpoint is not advanced until data is successfully written.

- Use memory buffers. There is no need to use Observability Pipelines Worker’s disk buffers when it sits behind a pub-sub system. Your pub-sub system is designed for long-term buffering with high durability. Observability Pipelines Worker should only be responsible for reading, processing, and routing the data (not durability).

Global aggregation

This section provides recommendations for performing global calculations for legacy destinations. Modern destinations already support global calculations. For example, Datadog supports distributions (such as DDSketch) that solve global observations of your metrics data.

Global aggregation refers to the ability to aggregate data for an entire region. For example, computing global quantiles for CPU load averages. To achieve this, a single Observability Pipelines Worker instance must have access to every node’s CPU load average statistics. This is not possible with horizontal scaling; each individual Observability Pipelines Worker instance only has access to a slice of the overall data. Therefore, aggregation should be tiered.

In the above diagram, the tier two aggregators receive an aggregated sub-stream of the overall data from the tier one aggregators. This allows a single instance to get a global view without processing the entire stream and introducing a single point of failure.

Recommendations

- Limit global aggregation to tasks that can reduce data, such as computing global histograms. Never send all data to your global aggregators.

- Continue to use your local aggregators to process and deliver most data so that you do not introduce a single point of failure.