- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Actualizar el Agent

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

(LEGACY) High Availability and Disaster Recovery

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

Observability Pipelines is not available on the US1-FED Datadog site.

This guide is for large-scale production-level deployments.

In the context of Observability Pipelines, high availability refers to the Observability Pipelines Worker remaining available if there are any system issues.

To achieve high availability:

- Deploy at least two Observability Pipelines Worker instances in each Availability Zone.

- Deploy Observability Pipelines Worker in at least two Availability Zones.

- Front your Observability Pipelines Worker instances with a load balancer that balances traffic across Observability Pipelines Worker instances. See Capacity Planning and Scaling for more information.

Mitigating failure scenarios

Handling Observability Pipelines Worker process issues

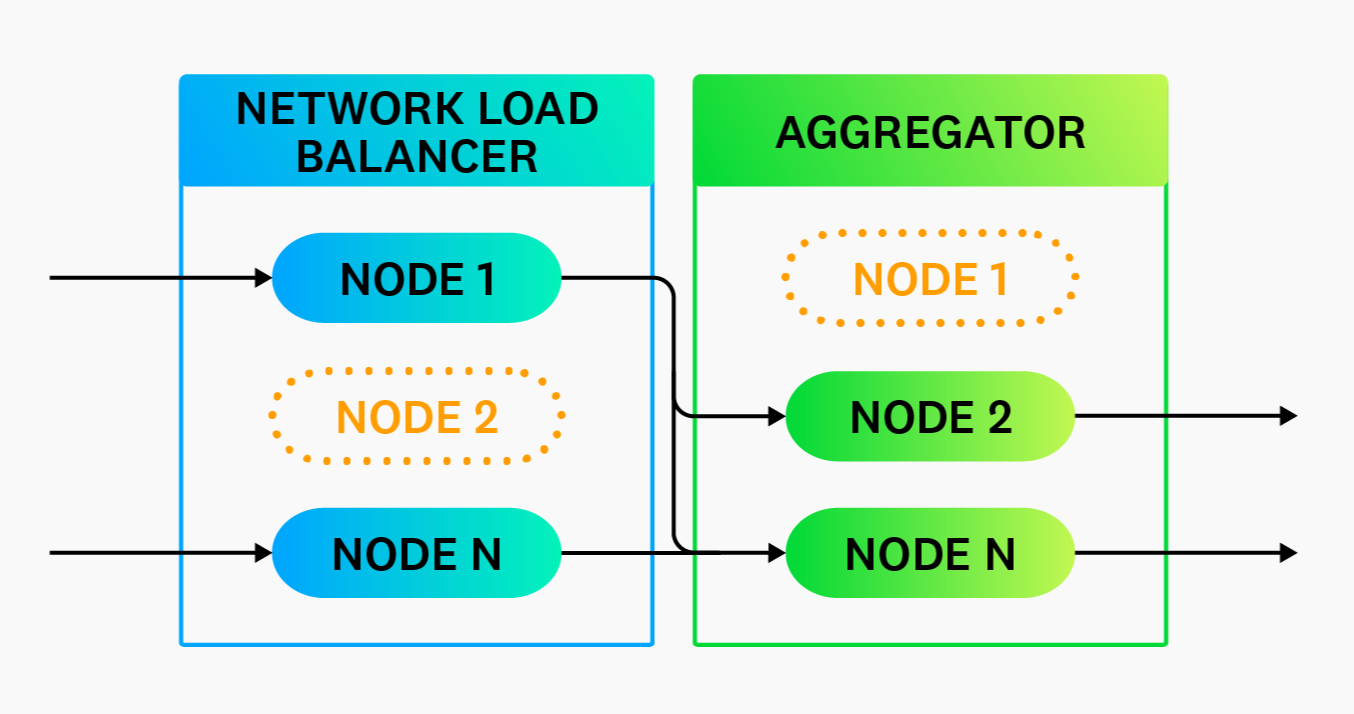

To mitigate a system process issue, distribute the Observability Pipelines Worker across multiple nodes and front them with a network load balancer that can redirect traffic to another Observability Pipelines Worker instance as needed. In addition, platform-level automated self-healing should eventually restart the process or replace the node.

Mitigating node failures

To mitigate node issues, distribute the Observability Pipelines Worker across multiple nodes and front them with a network load balancer that can redirect traffic to another Observability Pipelines Worker node. In addition, platform-level automated self-healing should eventually replace the node.

Handling availability zone failures

To mitigate issues with availability zones, deploy the Observability Pipelines Worker across multiple availability zones.

Mitigating region failures

Observability Pipelines Worker is designed to route internal observability data, and it should not failover to another region. Instead, Observability Pipelines Worker should be deployed in all of your regions. Therefore, if your entire network or region fails, Observability Pipelines Worker would fail with it. See Networking for more information.

Disaster recovery

Internal disaster recovery

Observability Pipelines Worker is an infrastructure-level tool designed to route internal observability data. It implements a shared-nothing architecture and does not manage state that should be replicated or transferred to a disaster recovery (DR) site. Therefore, if your entire region fails, Observability Pipelines Worker would fail with it. Therefore, you should install the Observability Pipelines Worker in your DR site as part of your broader DR plan.

External disaster recovery

If you’re using a managed destination, such as Datadog, Observability Pipelines Worker can facilitate automatic routing of data to your Datadog DR site using Observability Pipelines Worker’s circuit breaker feature.