- Esenciales

- Empezando

- Datadog

- Sitio web de Datadog

- DevSecOps

- Serverless para Lambda AWS

- Agent

- Integraciones

- Contenedores

- Dashboards

- Monitores

- Logs

- Rastreo de APM

- Generador de perfiles

- Etiquetas (tags)

- API

- Catálogo de servicios

- Session Replay

- Continuous Testing

- Monitorización Synthetic

- Gestión de incidencias

- Monitorización de bases de datos

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Análisis de código

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Uso básico del Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Configuración remota

- Automatización de flotas

- Actualizar el Agent

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- OpenTelemetry

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un cuadro

- Crear un dashboard de integración

- Crear un monitor recomendado

- Crear una regla de detección Cloud SIEM

- OAuth para integraciones

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- API

- Aplicación móvil de Datadog

- CoScreen

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Hojas

- Monitores y alertas

- Infraestructura

- Métricas

- Watchdog

- Bits AI

- Catálogo de servicios

- Catálogo de APIs

- Error Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Gestión de eventos

- Gestión de casos

- Workflow Automation

- App Builder

- Infraestructura

- Universal Service Monitoring

- Contenedores

- Serverless

- Monitorización de red

- Coste de la nube

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Observabilidad del servicio

- Instrumentación dinámica

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Experiencia digital

- Real User Monitoring

- Monitorización del navegador

- Configuración

- Configuración avanzada

- Datos recopilados

- Monitorización del rendimiento de páginas

- Monitorización de signos vitales de rendimiento

- Monitorización del rendimiento de recursos

- Recopilación de errores del navegador

- Rastrear las acciones de los usuarios

- Señales de frustración

- Error Tracking

- Solucionar problemas

- Monitorización de móviles y TV

- Plataforma

- Session Replay

- Exploración de datos de RUM

- Feature Flag Tracking

- Error Tracking

- Guías

- Seguridad de los datos

- Monitorización del navegador

- Análisis de productos

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Entrega de software

- CI Visibility

- CD Visibility

- Test Visibility

- Configuración

- Tests en contenedores

- Búsqueda y gestión

- Explorador

- Monitores

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Detección temprana de defectos

- Reintentos automáticos de tests

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- Métricas de DORA

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Sensitive Data Scanner

- Ayuda

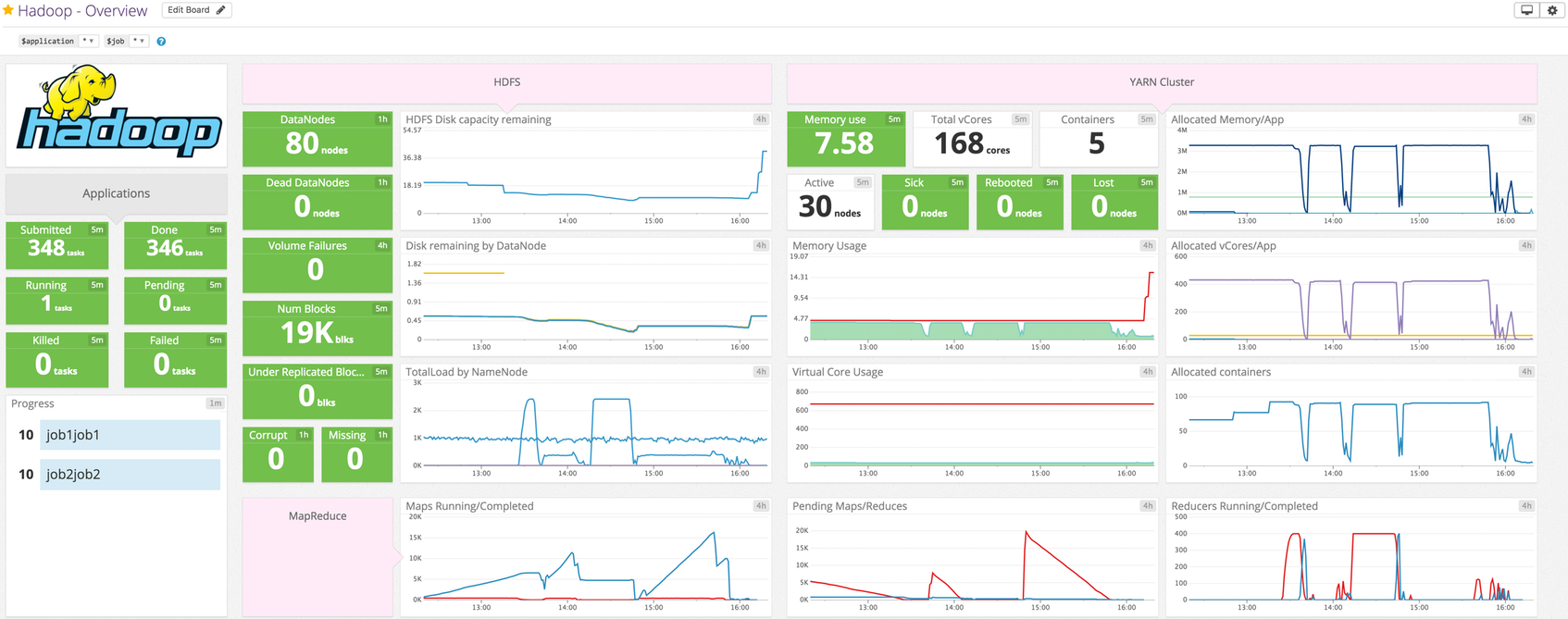

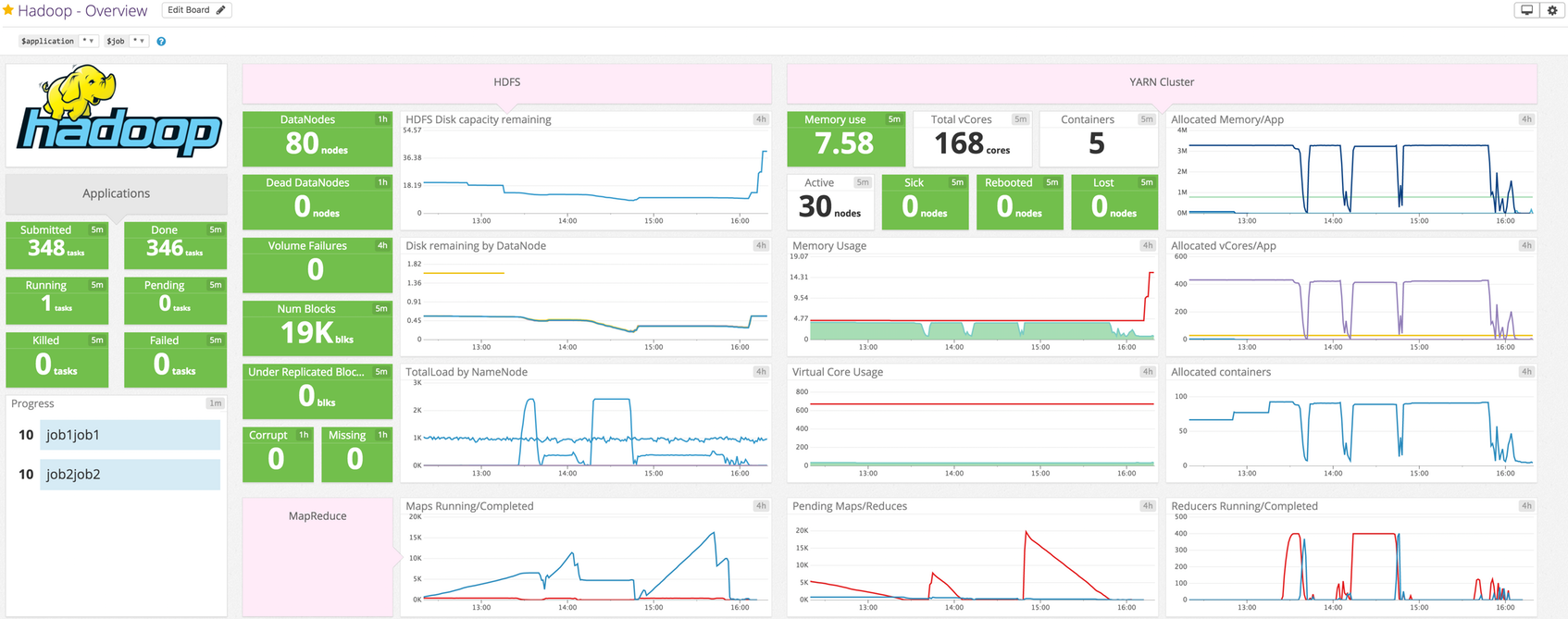

Hdfs

This page is not yet available in Spanish. We are working on its translation.

If you have any questions or feedback about our current translation project, feel free to reach out to us!

If you have any questions or feedback about our current translation project, feel free to reach out to us!

HDFS DataNode Integration

Overview

Track disk utilization and failed volumes on each of your HDFS DataNodes. This Agent check collects metrics for these, as well as block- and cache-related metrics.

Use this check (hdfs_datanode) and its counterpart check (hdfs_namenode), not the older two-in-one check (hdfs); that check is deprecated.

Setup

Follow the instructions below to install and configure this check for an Agent running on a host. For containerized environments, see the Autodiscovery Integration Templates for guidance on applying these instructions.

Installation

The HDFS DataNode check is included in the Datadog Agent package, so you don’t need to install anything else on your DataNodes.

Configuration

Connect the Agent

Host

To configure this check for an Agent running on a host:

Edit the

hdfs_datanode.d/conf.yamlfile, in theconf.d/folder at the root of your Agent’s configuration directory. See the sample hdfs_datanode.d/conf.yaml for all available configuration options:init_config: instances: ## @param hdfs_datanode_jmx_uri - string - required ## The HDFS DataNode check retrieves metrics from the HDFS DataNode's JMX ## interface via HTTP(S) (not a JMX remote connection). This check must be installed on a HDFS DataNode. The HDFS ## DataNode JMX URI is composed of the DataNode's hostname and port. ## ## The hostname and port can be found in the hdfs-site.xml conf file under ## the property dfs.datanode.http.address ## https://hadoop.apache.org/docs/r3.1.3/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml # - hdfs_datanode_jmx_uri: http://localhost:9864

Containerized

For containerized environments, see the Autodiscovery Integration Templates for guidance on applying the parameters below.

| Parameter | Value |

|---|---|

<INTEGRATION_NAME> | hdfs_datanode |

<INIT_CONFIG> | blank or {} |

<INSTANCE_CONFIG> | {"hdfs_datanode_jmx_uri": "http://%%host%%:9864"} |

Log collection

Available for Agent >6.0

Collecting logs is disabled by default in the Datadog Agent. Enable it in the

datadog.yamlfile with:logs_enabled: trueAdd this configuration block to your

hdfs_datanode.d/conf.yamlfile to start collecting your DataNode logs:logs: - type: file path: /var/log/hadoop-hdfs/*.log source: hdfs_datanode service: <SERVICE_NAME>Change the

pathandserviceparameter values and configure them for your environment.

Validation

Run the Agent’s status subcommand and look for hdfs_datanode under the Checks section.

Data Collected

Metrics

| hdfs.datanode.cache_capacity (gauge) | Cache capacity in bytes Shown as byte |

| hdfs.datanode.cache_used (gauge) | Cache used in bytes Shown as byte |

| hdfs.datanode.dfs_capacity (gauge) | Disk capacity in bytes Shown as byte |

| hdfs.datanode.dfs_remaining (gauge) | The remaining disk space left in bytes Shown as byte |

| hdfs.datanode.dfs_used (gauge) | Disk usage in bytes Shown as byte |

| hdfs.datanode.estimated_capacity_lost_total (gauge) | The estimated capacity lost in bytes Shown as byte |

| hdfs.datanode.last_volume_failure_date (gauge) | The date/time of the last volume failure in milliseconds since epoch Shown as millisecond |

| hdfs.datanode.num_blocks_cached (gauge) | The number of blocks cached Shown as block |

| hdfs.datanode.num_blocks_failed_to_cache (gauge) | The number of blocks that failed to cache Shown as block |

| hdfs.datanode.num_blocks_failed_to_uncache (gauge) | The number of failed blocks to remove from cache Shown as block |

| hdfs.datanode.num_failed_volumes (gauge) | Number of failed volumes |

Events

The HDFS-datanode check does not include any events.

Service Checks

hdfs.datanode.jmx.can_connect

Returns CRITICAL if the Agent cannot connect to the DataNode’s JMX interface for any reason. Returns OK otherwise.

Statuses: ok, critical

Troubleshooting

Need help? Contact Datadog support.

Further Reading

- Hadoop architectural overview

- How to monitor Hadoop metrics

- How to collect Hadoop metrics

- How to monitor Hadoop with Datadog

HDFS NameNode Integration

Overview

Monitor your primary and standby HDFS NameNodes to know when your cluster falls into a precarious state: when you’re down to one NameNode remaining, or when it’s time to add more capacity to the cluster. This Agent check collects metrics for remaining capacity, corrupt/missing blocks, dead DataNodes, filesystem load, under-replicated blocks, total volume failures (across all DataNodes), and many more.

Use this check (hdfs_namenode) and its counterpart check (hdfs_datanode), not the older two-in-one check (hdfs); that check is deprecated.

Setup

Follow the instructions below to install and configure this check for an Agent running on a host. For containerized environments, see the Autodiscovery Integration Templates for guidance on applying these instructions.

Installation

The HDFS NameNode check is included in the Datadog Agent package, so you don’t need to install anything else on your NameNodes.

Configuration

Connect the Agent

Host

To configure this check for an Agent running on a host:

Edit the

hdfs_namenode.d/conf.yamlfile, in theconf.d/folder at the root of your Agent’s configuration directory. See the sample hdfs_namenode.d/conf.yaml for all available configuration options:init_config: instances: ## @param hdfs_namenode_jmx_uri - string - required ## The HDFS NameNode check retrieves metrics from the HDFS NameNode's JMX ## interface via HTTP(S) (not a JMX remote connection). This check must be installed on ## a HDFS NameNode. The HDFS NameNode JMX URI is composed of the NameNode's hostname and port. ## ## The hostname and port can be found in the hdfs-site.xml conf file under ## the property dfs.namenode.http-address ## https://hadoop.apache.org/docs/r3.1.3/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml # - hdfs_namenode_jmx_uri: http://localhost:9870

Containerized

For containerized environments, see the Autodiscovery Integration Templates for guidance on applying the parameters below.

| Parameter | Value |

|---|---|

<INTEGRATION_NAME> | hdfs_namenode |

<INIT_CONFIG> | blank or {} |

<INSTANCE_CONFIG> | {"hdfs_namenode_jmx_uri": "https://%%host%%:9870"} |

Log collection

Available for Agent >6.0

Collecting logs is disabled by default in the Datadog Agent. Enable it in the

datadog.yamlfile with:logs_enabled: trueAdd this configuration block to your

hdfs_namenode.d/conf.yamlfile to start collecting your NameNode logs:logs: - type: file path: /var/log/hadoop-hdfs/*.log source: hdfs_namenode service: <SERVICE_NAME>Change the

pathandserviceparameter values and configure them for your environment.

Validation

Run the Agent’s status subcommand and look for hdfs_namenode under the Checks section.

Data Collected

Metrics

| hdfs.namenode.blocks_total (gauge) | Total number of blocks Shown as block |

| hdfs.namenode.capacity_remaining (gauge) | Remaining disk space left in bytes Shown as byte |

| hdfs.namenode.capacity_total (gauge) | Total disk capacity in bytes Shown as byte |

| hdfs.namenode.capacity_used (gauge) | Disk usage in bytes Shown as byte |

| hdfs.namenode.corrupt_blocks (gauge) | Number of corrupt blocks Shown as block |

| hdfs.namenode.estimated_capacity_lost_total (gauge) | Estimated capacity lost in bytes Shown as byte |

| hdfs.namenode.files_total (gauge) | Total number of files Shown as file |

| hdfs.namenode.fs_lock_queue_length (gauge) | Lock queue length |

| hdfs.namenode.max_objects (gauge) | Maximum number of files HDFS supports Shown as object |

| hdfs.namenode.missing_blocks (gauge) | Number of missing blocks Shown as block |

| hdfs.namenode.num_dead_data_nodes (gauge) | Total number of dead data nodes Shown as node |

| hdfs.namenode.num_decom_dead_data_nodes (gauge) | Number of decommissioning dead data nodes Shown as node |

| hdfs.namenode.num_decom_live_data_nodes (gauge) | Number of decommissioning live data nodes Shown as node |

| hdfs.namenode.num_decommissioning_data_nodes (gauge) | Number of decommissioning data nodes Shown as node |

| hdfs.namenode.num_live_data_nodes (gauge) | Total number of live data nodes Shown as node |

| hdfs.namenode.num_stale_data_nodes (gauge) | Number of stale data nodes Shown as node |

| hdfs.namenode.num_stale_storages (gauge) | Number of stale storages |

| hdfs.namenode.pending_deletion_blocks (gauge) | Number of pending deletion blocks Shown as block |

| hdfs.namenode.pending_replication_blocks (gauge) | Number of blocks pending replication Shown as block |

| hdfs.namenode.scheduled_replication_blocks (gauge) | Number of blocks scheduled for replication Shown as block |

| hdfs.namenode.total_load (gauge) | Total load on the file system |

| hdfs.namenode.under_replicated_blocks (gauge) | Number of under replicated blocks Shown as block |

| hdfs.namenode.volume_failures_total (gauge) | Total volume failures |

Events

The HDFS-namenode check does not include any events.

Service Checks

hdfs.namenode.jmx.can_connect

Returns CRITICAL if the Agent cannot connect to the NameNode’s JMX interface for any reason. Returns OK otherwise.

Statuses: ok, critical

Troubleshooting

Need help? Contact Datadog support.